Achieving Cross-Lab Reproducibility in Immunological Assays: A Framework for Standardization, Validation, and Best Practices

This article provides a comprehensive framework for researchers, scientists, and drug development professionals aiming to improve the reproducibility of immunological assays across different laboratories.

Achieving Cross-Lab Reproducibility in Immunological Assays: A Framework for Standardization, Validation, and Best Practices

Abstract

This article provides a comprehensive framework for researchers, scientists, and drug development professionals aiming to improve the reproducibility of immunological assays across different laboratories. It explores the foundational challenges and sources of variability, details methodological best practices and standardized protocols for key assays like the DC maturation assay and flow cytometry, offers troubleshooting strategies for critical parameters such as cell fitness and reagent validation, and establishes a rigorous approach for assay validation and comparative analysis. By synthesizing current research and multi-institutional efforts, this guide aims to equip scientists with the knowledge to generate reliable, comparable, and clinically translatable immunological data.

Understanding the Reproducibility Crisis in Immunological Testing

Reproducibility forms the cornerstone of scientific validity, particularly in biomedical research where immunological assays provide critical data for vaccine development and therapeutic interventions. The consistency of experimental results—whether within a single laboratory, across multiple facilities, or when different methodologies are applied—directly impacts the reliability of scientific conclusions and the success of clinical translation. In immunological research, the challenges of achieving reproducible data are compounded by complex assay requirements, reagent variability, and the need for standardized protocols. This guide examines the multifaceted nature of reproducibility through the lens of recent interlaboratory studies and methodological validations, providing researchers with comparative data and frameworks to enhance the reliability of their experimental findings.

The Three Dimensions of Reproducibility

Intra-laboratory Precision

Intra-laboratory precision, also known as intermediate precision, measures the consistency of results within a single laboratory under varying conditions such as different analysts, equipment, or days. This dimension of reproducibility captures the inherent variability of an assay when performed within one facility.

A study evaluating a multiplex immunoassay for Group B streptococcus (GBS) capsular polysaccharide antibodies demonstrated exceptional within-laboratory precision. Across five participating laboratories, the relative standard deviation (RSD) was generally below 20% for all six GBS serotypes when factoring in variables like bead lots, analysts, and testing days [1]. Similarly, a microneutralization assay for detecting anti-AAV9 neutralizing antibodies reported intra-assay variations of 7-35% for low positive quality controls [2].

Table 1: Intra-laboratory Precision Metrics Across Assay Types

| Assay Type | Target | Precision Metric | Reported Value | Key Variables Tested |

|---|---|---|---|---|

| Multiplex Immunoassay | GBS CPS Serotypes | Relative Standard Deviation (RSD) | <20% | Bead lot, analyst, day [1] |

| Microneutralization Assay | Anti-AAV9 NAbs | Intra-assay Variation | 7-35% | Low positive QC samples [2] |

| Microneutralization Assay | Anti-AAV9 NAbs | Inter-assay Variation | 22-41% | Low positive QC samples [2] |

| MEASURE Assay | fHbp Surface Expression | Total RSD | ≤30% | Multiple operators/runs [3] |

Inter-laboratory Reproducibility

Inter-laboratory reproducibility represents the ability of different laboratories to produce consistent results using the same method. This is particularly crucial for multi-center clinical trials and global health initiatives where data must be comparable across sites.

The GBS multiplex immunoassay study demonstrated remarkable cross-laboratory reproducibility, with RSD values below 25% for all six serotypes across five different laboratories [1]. This consistency was achieved despite the participating facilities being located in different countries (USA, England, and South Africa), highlighting the effectiveness of standardized protocols and reagents.

In the validation of a meningococcal MEASURE assay, three independent laboratories achieved >97% agreement when classifying 42 MenB test strains based on a predetermined fluorescence intensity threshold [3]. This high level of concordance is significant as the MEASURE assay predicts strain susceptibility to vaccine-induced antibodies, a critical determination for vaccine efficacy assessment.

Table 2: Inter-laboratory Reproducibility in Recent Studies

| Assay Type | Participating Laboratories | Reproducibility Metric | Performance | Significance |

|---|---|---|---|---|

| GBS Multiplex Immunoassay | 5 (Pfizer, UKHSA, CDC, St. George's, Witwatersrand) | Cross-lab RSD | <25% all serotypes | Enables data comparison across studies [1] |

| MEASURE Assay | 3 (Pfizer, UKHSA, CDC) | Classification Agreement | >97% | Consistent prediction of vaccine susceptibility [3] |

| Microneutralization Assay | 3 (Beijing laboratories) | % Geometric Coefficient of Variation | 23-46% | Supports clinical trial application [2] |

| Malaria Multiplex Immunoassay | 2 (MSD, Jenner Institute) | Correlation of Clinical Samples | Statistically significant (all antigens) | Validated for Phase 3 clinical trial use [4] |

Methodological and Analytical Challenges

Methodological challenges encompass issues related to protocol standardization, reagent characterization, and data analysis approaches that impact reproducibility regardless of where an assay is performed.

The antibody characterization crisis represents a significant methodological challenge, with an estimated 50% of commercial antibodies failing to meet basic characterization standards [5]. This deficiency costs the U.S. research community an estimated $0.4-1.8 billion annually in irreproducible research [5].

In artificial intelligence applications for biomedical data science, reproducibility faces unique challenges from inherent non-determinism in AI models, data preprocessing variations, and substantial computational requirements that hinder independent verification [6]. For complex models like AlphaFold3, the computational cost alone presents a significant barrier to reproducibility, with the original AlphaFold requiring 264 hours of training on specialized Tensor Processing Units [6].

Standardized Experimental Protocols for Enhanced Reproducibility

GBS Multiplex Immunoassay Protocol

The standardized GBS multiplex immunoassay (MIA), adopted by the GASTON consortium, exemplifies a robust protocol designed for cross-laboratory reproducibility [1]:

- Bead Coupling: MagPlex microspheres are coupled with GBS capsular polysaccharide Poly-L-Lysine conjugates following standardized coating procedures

- Assay Setup: Each 96-well plate includes an 11-point human serum reference standard dilution series, quality control samples, and test serum samples diluted in assay buffer (0.5% BSA in 10 mM PBS/0.05% Tween-20/0.02% NaN3, pH 7.2)

- Incubation: Test serum samples are tested in duplicate at 1:500, 1:5,000, and 1:50,000 dilutions with overnight incubation

- Detection: Plates are washed using a standardized protocol, followed by addition of R-Phycoerythrin-conjugated goat anti-human IgG secondary antibody

- Analysis: Plates are read on a Luminex 200 reader using Bio-Plex Manager, with signal output expressed as mean fluorescence intensity (MFI)

Microneutralization Assay for Anti-AAV9 Antibodies

The optimized microneutralization assay for detecting anti-AAV9 neutralizing antibodies incorporates critical quality controls [2]:

- Sample Pre-treatment: Serum or plasma samples are heat-inactivated at 56°C for 30 minutes

- Virus Neutralization: 50 μL of 2-fold serially diluted serum (starting at 1:20) is incubated with 2×10^8 vg of rAAV9-EGFP-2A-Gluc in 50 μL DMEM with 0.1% BSA for 1 hour at 37°C

- Cell Infection: The mixture is added to HEK293-C340 cells (20,000 cells/well) and incubated for 48-72 hours

- Detection: Gaussian luciferase activity is measured using a luciferase assay system with coelenterazine substrate

- Quality Control: System QC requires inter-assay titer variation of <4-fold difference or geometric coefficient of variation <50%

Essential Research Reagent Solutions

Table 3: Key Research Reagents and Their Functions in Reproducible Immunoassays

| Reagent/ Material | Function | Reproducibility Considerations | Examples from Literature |

|---|---|---|---|

| Qualified Bead Lots | Solid phase for antigen immobilization | Lot-to-lot variability must be <20% RSD; qualification against reference lot required [1] | GBS CPS-PLL coated beads [1] |

| Human Serum Reference Standard | Quantification standard for IgG antibodies | Enables comparison across laboratories and studies; weight-based IgG assignments [1] | GBS human serum reference standard [1] |

| Quality Control Samples (QCS) | Monitoring assay performance | Pools of immune human serum samples; tested in each run [1] | GBS QCS from immune human serum pools [1] |

| rAAV Vectors with Reporter Genes | Virus neutralization target | Empty and full virus particles separated; <10% empty capsids [2] | rAAV9-EGFP-2A-Gluc [2] |

| Anti-AAV Neutralizing Monoclonal Antibody | System suitability control | Used for quality control; defines acceptable variation thresholds [2] | Mouse neutralizing monoclonal antibody in human negative serum [2] |

| Secondary Antibodies | Detection | Conjugated for specific detection methods; lot consistency critical [1] [4] | R-Phycoerythrin-conjugated goat anti-human IgG [1] |

Factors Influencing Reproducibility Across Dimensions

The pursuit of reproducibility in immunological assays requires systematic attention to intra-laboratory precision, inter-laboratory consistency, and methodological rigor. The case studies examined demonstrate that carefully standardized protocols, qualified reagents, and appropriate statistical approaches can achieve remarkable reproducibility across multiple laboratories, with relative standard deviations frequently below 25% and classification agreements exceeding 97%. The continued development of standardized assays like the GBS GASTON assay and the MEASURE assay, coupled with increased attention to antibody characterization and computational reproducibility, provides a roadmap for enhancing reliability in immunological research. As the field progresses, adherence to these principles will be essential for generating translatable findings that successfully bridge basic research and clinical application.

Reproducibility is a cornerstone of scientific research, yet immunological assays are particularly prone to variability that can compromise data reliability and cross-study comparisons. This guide objectively compares sources of variability and their impact on assay performance across different laboratory settings. Evidence from multi-site proficiency testing and methodological comparisons reveals that variability arises at every stage of the experimental workflow, from sample collection to final data interpretation [7]. Understanding and managing these sources is crucial for researchers, scientists, and drug development professionals who rely on precise and reproducible immunological data for critical decisions in therapeutic development and clinical applications.

Sample Collection and Handling

The pre-analytical phase introduces significant variability before formal testing begins. Sample stability is profoundly affected by handling conditions. Multi-site studies demonstrate that cytokine measurements in serum can vary by 10-25% based solely on freeze-thaw cycles or duration of sample storage at room temperature [7]. The matrix effect—where samples are diluted in serum, plasma, or artificial buffers—also substantially impacts recovery rates, particularly in immunoassays where sample composition interferes with antibody binding [8].

Reagents and Biological Materials

Reagent quality and consistency are fundamental to assay reproducibility. Critical reagents such as capture antibodies, detection antibodies, and analyte standards exhibit lot-to-lot variations that directly impact assay performance. Table 1 summarizes the effects of key reagent-related variables.

Table 1: Impact of Reagent Variability on Assay Performance

| Variable | Impact on Assay | Evidence |

|---|---|---|

| Antibody affinity/ specificity | Alters sensitivity, dynamic range | Affinity-purified antibodies reduce non-specific binding [8] |

| Coating buffer composition | Affects immobilization efficiency | Comparison of carbonate-bicarbonate vs. PBS buffers [8] |

| Blocking buffer formulation | Changes background signal, noise | Casein-based blockers reduce non-specific binding vs. BSA [8] |

| Conjugate enzyme stability | Impacts detection sensitivity | HRP vs. alkaline phosphatase substrate kinetics [8] |

Biological materials present additional challenges. Use of misidentified, cross-contaminated, or over-passaged cell lines compromises experimental validity and reproducibility [9]. Long-term serial passaging can alter gene expression, growth rates, and physiological responses, generating significantly different results across laboratories using supposedly identical cellular models [9].

Assay Execution and Platform Differences

Technical execution contributes substantially to variability. In bead-based cytokine assays, procedural differences in washing steps, incubation timing, and temperature control account for approximately 15-30% of inter-laboratory variation [7]. Instrument selection introduces another layer of variability, with different plate readers and flow cytometers producing systematically different readouts despite identical samples [10] [7].

Substantial inter-assay differences emerge even when measuring the same analyte. For example, two different pseudovirus-based SARS-CoV-2 neutralization assays (Duke and Monogram) showed statistically significant differences in measured antibody titers when testing identical samples, with the Monogram assay consistently reporting higher values [11]. These differences necessitate statistical bridging methods to compare or combine data across platforms.

Quantitative Evidence from Multi-Laboratory Studies

Multi-site proficiency testing provides the most compelling evidence of variability in real-world conditions. The External Quality Assurance Program Oversight Laboratory (EQAPOL) multiplex program, conducting 22 rounds of proficiency testing over 12 years with over 40 laboratories, offers comprehensive data on inter-laboratory variability [7].

Table 2: Inter-Laboratory Variability in Cytokine Measurements from EQAPOL Program

| Cytokine | Concentration (pg/mL) | Inter-lab CV (%) | Major Source of Variability |

|---|---|---|---|

| IL-2 | 50 | 15-25% | Bead type, detection antibody |

| IL-6 | 100 | 12-20% | Standard curve fitting |

| IL-10 | 75 | 18-30% | Matrix effects, sample dilution |

| TNF-α | 50 | 10-22% | Instrument calibration |

| IFN-γ | 100 | 20-35% | Bead type, sample handling |

The data reveal that variability is analyte-dependent, with some cytokines exhibiting consistently higher coefficients of variation (CV) across laboratories. The switch from polystyrene to paramagnetic beads early in the program significantly reduced average inter-laboratory CVs by approximately 8-12%, highlighting how single technological improvements can enhance reproducibility [7]. However, proficiency scores stabilized after initial improvements, suggesting fundamental limits to technical standardization.

Similar variability was observed in T-cell immunophenotyping across five laboratories, where interlaboratory differences were statistically significant for all T-cell subsets except CD4+ cells, ranging from minor to eightfold for CD25+ subsets [10]. Notably, the date of analysis was significantly associated with values for all cellular activation markers within laboratories, emphasizing the impact of temporal drift even in established assays [10].

Experimental Protocols for Assessing Variability

Variance Component Analysis

Formal statistical approaches are essential for quantifying variability. Variance component analysis, consistent with USP 〈1033〉, partitions total variability into its constituent sources [12]. The recommended practice involves:

- Logarithmic Transformation: Apply base e logarithm to relative potency results to reduce variance heterogeneity with increasing potency [12].

- Experimental Design: Execute studies with multiple analysts over several days, with two assays performed by each analyst daily.

- Variance Calculation: Use statistical software to estimate variance components for each source (e.g., analyst, day, inter-assay).

- Percentage Calculation: Convert variance components to more interpretable %CV or %GCV using the formulas:

- %CV = 100√{exp(Vc) - 1}

- %GCV = 100(exp(√Vc) - 1) where Vc is the variance component estimate [12].

This approach enables practitioners to identify whether variability stems predominantly from analyst-to-analyst differences, day-to-day variation, or inter-assay effects, allowing targeted improvement efforts.

Bridging Different Assay Platforms

When combining data from different assays, statistical bridging methods are essential. The left-censored multivariate normal model accommodates differences in both measurement error and lower limits of detection (LOD) between assays [11]. The protocol involves:

- Paired Sample Collection: Obtain samples measured by both assays (e.g., Duke and Monogram nAb assays).

- Model Establishment: Assume (Xâ‚, Xâ‚‚) follow a bivariate normal distribution, where Xâ‚ and Xâ‚‚ represent measurements from two different assays.

- Parameter Estimation: Account for left-censoring due to different LODs in each assay.

- Calibration: Use established relationships to convert measurements between assays or combine data for meta-analysis [11].

This method prevents misleading conclusions when comparing immunogenicity between vaccine regimens or evaluating correlates of risk using data from different assays [11].

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Research Reagents and Their Functions in Immunoassays

| Reagent Category | Specific Examples | Function & Importance |

|---|---|---|

| Solid Surfaces | Greiner high-binding plates, Nunc plates | Optimal antigen/antibody immobilization with minimal lot-to-lot variability [8] |

| Coating Buffers | 50mM sodium bicarbonate (pH 9.6), PBS (pH 8.0) | Maximize binding efficiency of capture antibodies or antigens to solid phase [8] |

| Blocking Buffers | 1% BSA in TBS, Casein-based blockers, Heterophilic blocking reagents | Reduce non-specific binding to minimize background signal [8] |

| Wash Buffers | PBST (0.05% Tween-20), TBST | Remove unbound reagents while maintaining assay integrity [8] |

| Detection Systems | HRP/TMB, Alkaline phosphatase/pNPP | Generate measurable signal with optimal signal-to-noise ratio [8] |

| Reference Materials | Authenticated, low-passage cell banks, Characterized serum pools | Provide standardization across laboratories and over time [13] [9] |

| H-Phe-Trp-OH | H-Phe-Trp-OH, CAS:24587-41-5, MF:C20H21N3O3, MW:351.4 g/mol | Chemical Reagent |

| NSC 16590 | NSC 16590, CAS:62-57-7, MF:C4H9NO2, MW:103.12 g/mol | Chemical Reagent |

Strategies for Managing Variability

Measurement Assurance Framework

A systematic measurement assurance framework identifies, minimizes, and monitors variability throughout the experimental process [13]. This approach includes:

- Clearly Defined Measurands: Precisely specify what physical property is being measured (e.g., "number of cells fluorescently labeled with a membrane-impermeant dye" for viability counting) [13].

- Fit-for-Purpose Validation: Qualify assays for sensitivity, limits of detection, linearity, specificity, accuracy, precision, and robustness [13].

- In-Process Controls: Implement controls at each step to monitor variability during sample preparation, data collection, and analysis [13].

- Reference Materials: Use authenticated biological reference materials with documented performance characteristics to calibrate measurements across instruments and laboratories [13].

Statistical and Design Approaches

Robust experimental design significantly reduces variability. Design of Experiment (DOE) methodologies systematically evaluate the sensitivity of assays to changes in experimental parameters, establishing acceptable performance ranges for critical factors such as enzymatic treatment times, reagent concentrations, and incubation conditions [13]. Pre-registering studies, including detailed methodologies, helps standardize approaches across laboratories and reduces selective reporting [9]. Publishing negative data is equally valuable, as it helps interpret positive results and prevents resource waste on irreproducible findings [9].

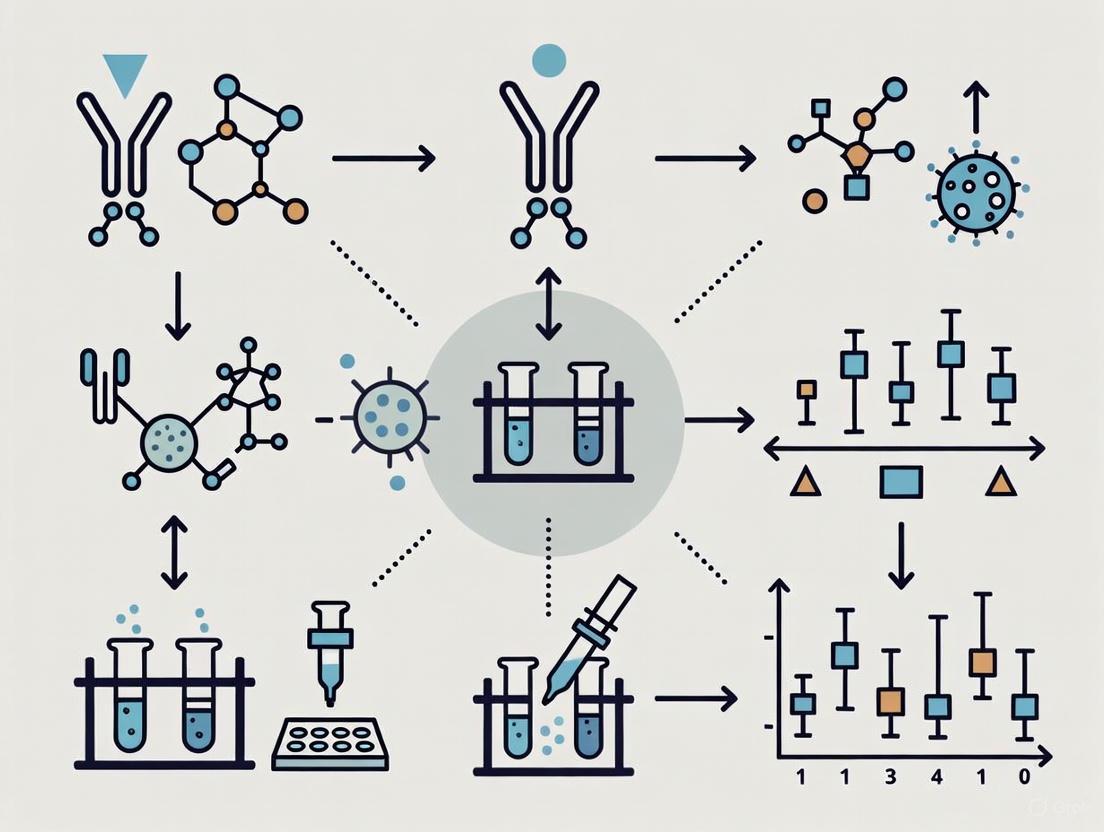

The above diagram illustrates a systematic framework for managing variability throughout the measurement process, incorporating specific assurance tools at each experimental stage.

Variability in immunological assays arises from interconnected technical and biological sources spanning the entire experimental workflow. Evidence from multi-laboratory studies demonstrates that consistent implementation of measurement assurance strategies—including standardized protocols, validated reagents, appropriate statistical bridging, and reference materials—significantly improves reproducibility. While some variability is inherent to complex biological systems, systematic approaches to its identification and management enable more reliable data interpretation and cross-study comparisons, ultimately accelerating drug development and scientific discovery.

Immunogenicity—the unwanted immune response to therapeutic biologics or vaccines—poses a significant challenge throughout the drug development pipeline. For protein-based therapeutics, immunogenicity can trigger the development of anti-drug antibodies (ADAs) that reduce efficacy, alter pharmacokinetics, and potentially cause severe adverse events [14]. Similarly, vaccine development requires careful assessment of immunogenicity to ensure consistent protection against targeted pathogens. The reproducibility of immunological assays across different laboratories is therefore paramount for accurately evaluating product performance, enabling meaningful comparisons between platforms, and ensuring regulatory compliance.

This guide objectively compares experimental approaches for immunogenicity and vaccine assessment, focusing on interlaboratory reproducibility data. We examine case studies across therapeutic classes, provide detailed methodological protocols, and present quantitative comparisons of assay performance to support scientific and regulatory decision-making.

Comparative Analysis of Immunological Assay Reproducibility

Reproducibility of the Meningococcal MEASURE Assay

The Meningococcal Antigen Surface Expression (MEASURE) assay was developed by Pfizer as a flow-cytometry-based method to quantify surface-expressed factor H binding protein (fHbp) on intact meningococci. This assay addresses limitations of the traditional serum bactericidal antibody using human complement (hSBA) assay, which is constrained by human sera and complement requirements [15].

Table 1: Interlaboratory Reproducibility of the MEASURE Assay

| Performance Metric | Pfizer Laboratory | UKHSA Laboratory | CDC Laboratory | Overall Agreement |

|---|---|---|---|---|

| Strain Classification Agreement | Reference | >97% concordance | >97% concordance | >97% across all sites |

| Precision (Total RSD) | ≤30% | ≤30% | ≤30% | All sites met criteria |

| Key Threshold | Mean fluorescence intensity <1000 indicates susceptibility to MenB-fHbp-induced antibodies | |||

| Number of MenB Strains Tested | 42 strains encoding sequence-diverse fHbp variants | |||

| Study Design | Intermediate precision within each laboratory; pairwise comparisons between laboratories |

This interlaboratory study demonstrated that MEASURE assay results were highly consistent across three independent laboratories (Pfizer, UKHSA, and CDC), with >97% agreement in classifying strains above or below the critical threshold for predicting susceptibility to vaccine-induced antibodies [15]. Each laboratory met precision criteria of ≤30% total relative standard deviation, establishing the MEASURE assay as a robust and reproducible platform for meningococcal vaccine assessment.

Reproducibility of Anti-HPV ELISA Isotype Detection

A comprehensive study evaluated the reproducibility of enzyme-linked immunosorbent assays (ELISAs) for detecting different anti-human papillomavirus (HPV) immunoglobulin isotypes in samples from the Costa Rica HPV Vaccine Trial [16].

Table 2: Reproducibility Performance of Anti-HPV16 L1 Isotype ELISAs

| Assay Isotype | Inter-Technician CV (%) | Inter-Day CV (%) | Overall CV (%) | Detectability in Vaccinated Participants | Intraclass Correlation Coefficient (ICC) |

|---|---|---|---|---|---|

| IgG1 | 12.8 | 6.2 | 7.7 | >86.3% | >98.7% |

| IgG3 | 22.7 | 30.6 | 31.1 | 100% | >98.7% |

| IgA | 16.2 | 19.4 | 19.8 | >86.3% | >98.7% |

| IgM | 15.8 | 25.3 | 26.4 | 62.1% | >98.7% |

| Assay Cut-off (EU/mL) | IgG1: 12; IgG3: 1.25; IgA: 0.48; IgM: 4.79 |

The data revealed that IgG1 exhibited the highest precision (lowest coefficients of variation), while IgM showed the greatest variability. IgG3 was detected in all vaccinated participants, whereas IgM had limited detectability (62.1%). All assays demonstrated excellent reliability with ICC values exceeding 98.7% [16]. Correlation analyses showed significant relationships between IgG subclasses and IgA, but not with IgM, informing interpretation of humoral immune responses to HPV vaccination.

Experimental Protocols for Immunological Assays

Three-Tiered Immunogenicity Testing Approach for Biologics

The FDA recommends a three-tiered testing approach for detecting anti-drug antibodies (ADAs) against therapeutic proteins during drug development [14]:

Tier 1: Screening Assay

- Purpose: Maximize sensitivity to minimize false-negatives

- Methodology: Ligand-binding immunoassay format

- Procedure:

- Dilute patient samples in appropriate buffer

- Incubate with immobilized therapeutic protein

- Wash to remove unbound components

- Detect bound antibodies using labeled secondary antibodies

- Compare signals to predetermined cut point (typically based on negative control population)

Tier 2: Confirmatory Assay

- Purpose: Establish specificity and minimize false-positives

- Methodology: Competitive inhibition format

- Procedure:

- Split positive samples from Tier 1 into two aliquots

- Pre-incubate one aliquot with excess soluble therapeutic protein

- Pre-incubate second aliquot with buffer only

- Process both aliquots through Tier 1 method

- Calculate percentage inhibition: [1 - (Signal with inhibitor/Signal without inhibitor)] × 100%

- Samples exceeding predetermined inhibition cut point (e.g., >50%) are confirmed positive

Tier 3: Characterization Assays

- Purpose: Determine ADA titer, isotype, and neutralizing capacity

- Methodologies:

- Titer Determination: Serial dilution of confirmed positive samples until signal falls below cut point

- Isotyping: Use of isotype-specific secondary antibodies

- Neutralizing Antibody Assays: Cell-based or competitive ligand-binding formats to assess interference with therapeutic protein function

A significant limitation of this approach is the reliance on positive controls created in non-human species, which may not accurately represent human ADA responses [14].

MEASURE Assay Protocol

The MEASURE assay protocol for quantifying fHbp expression on meningococcal surfaces consists of the following key steps [15]:

Bacterial Culture Preparation:

- Grow MenB strains to mid-logarithmic phase in appropriate media

- Standardize bacterial density using optical density measurements

Antibody Staining:

- Incubate bacteria with primary anti-fHbp antibody

- Wash to remove unbound antibody

- Incubate with fluorochrome-conjugated secondary antibody

- Include isotype controls for background determination

Flow Cytometry Analysis:

- Acquire data on flow cytometer using standardized instrument settings

- Gate on bacterial population based on light scatter properties

- Analyze minimum of 10,000 events per sample

- Report results as mean fluorescence intensity (MFI)

Data Interpretation:

- Compare MFI values to established threshold of 1000

- Strains with MFI < 1000 are considered susceptible to MenB-fHbp-induced antibodies

HPV Isotype ELISA Protocol

The detailed protocol for anti-HPV16 L1 isotype ELISAs includes these critical steps [16]:

Plate Coating:

- Coat 96-well plates with HPV16 L1 virus-like particles (VLPs) at 50 ng/well in PBS

- Incubate overnight at 4°C

- Block with PBS containing 1% bovine serum albumin and 0.05% Tween-20

Sample and Standard Preparation:

- Prepare serum standard pools from vaccinated individuals at predetermined dilutions (IgG1: 1:4200; IgG3: 1:3150; IgA: 1:200; IgM: 1:300)

- Create standard curves using 2-fold serial dilutions

- Include quality control samples at low, medium, and high concentrations

- Test clinical samples at optimal dilutions determined during assay development

Assay Procedure:

- Add standards and samples to coated plates in duplicate

- Incubate for 2 hours at room temperature

- Wash plates extensively

- Add isotype-specific horseradish peroxidase-conjugated detection antibodies at optimized concentrations (IgG1: 150 ng/mL; IgG3: 233 ng/mL; IgA: 67 ng/mL; IgM: 40 ng/mL)

- Incubate for 1 hour at room temperature

- Develop with tetramethylbenzidine substrate

- Stop reaction with sulfuric acid

- Read optical density at 450 nm with reference wavelength at 620 nm

Data Analysis:

- Generate standard curves using 4-parameter logistic regression

- Apply cut-off values determined from naive samples (3 standard deviations above geometric mean)

- Calculate intra- and inter-assay coefficients of variation

- Determine intraclass correlation coefficients for reproducibility assessment

Immunogenicity Pathways and Testing Workflows

Figure 1: Immunogenicity Cascade Pathway. This diagram illustrates the sequential immune events following biologic administration, from initial innate immune activation to potential clinical consequences of anti-drug antibody production. Route of administration influences immunogenicity risk [14]. ADA: anti-drug antibody; PK: pharmacokinetics.

Figure 2: Three-Tiered Immunogenicity Testing Workflow. This workflow depicts the sequential approach for anti-drug antibody detection and characterization, as recommended by FDA guidance [14]. Each tier serves a distinct purpose in ensuring accurate immunogenicity assessment.

The Scientist's Toolkit: Essential Research Reagents

Table 3: Key Research Reagent Solutions for Immunogenicity Assessment

| Reagent/Category | Function/Application | Examples/Specifications |

|---|---|---|

| Positive Controls | Semiquantitative assay calibration; quality control | Polyclonal ADAs from immunized non-human species; critical for assay standardization [14] |

| Isotype-Specific Detection Antibodies | Differentiation of immune response profiles | HRP-conjugated anti-human IgG1, IgG3, IgA, IgM; optimized concentrations for each assay [16] |

| Virus-Like Particles (VLPs) | Antigen source for vaccine immunogenicity assays | HPV16 L1 VLPs for plate coating in ELISA; maintain conformational epitopes [16] |

| Flow Cytometry Reagents | Surface antigen quantification | Anti-fHbp antibodies, fluorochrome-conjugated secondaries; standardized for bacterial staining [15] |

| Assay Standards | Quantitative comparison across laboratories | Pooled immune sera with assigned arbitrary units (EU/mL); enables normalization [16] |

| Cell-Based Reporter Systems | Innate immune response profiling | THP-1 and RAW-Blue reporter cell lines; detect immunogenicity-risk impurities [17] |

| Eplivanserin | Eplivanserin, CAS:130579-75-8, MF:C19H21FN2O2, MW:328.4 g/mol | Chemical Reagent |

| Fluvastatin Lactone | Fluvastatin Lactone, CAS:94061-83-3, MF:C24H24FNO3, MW:393.4 g/mol | Chemical Reagent |

The case studies presented demonstrate that robust, reproducible immunological assays are achievable across multiple laboratories when standardized protocols, calibrated reagents, and validated analysis methods are implemented. The MEASURE and HPV isotype ELISA platforms show how precise quantification of vaccine antigens and immune responses enables reliable product characterization and comparison.

Reproducibility challenges persist, particularly regarding positive control preparation for ADA assays and interpretation of results across different assay platforms [14]. Emerging approaches, including quantitative systems pharmacology models and computational prediction of immunogenic epitopes, show promise for enhancing immunogenicity risk assessment during early drug development [14] [18]. As biologic therapeutics and novel vaccine platforms continue to evolve, standardized assessment of immunogenicity will remain crucial for ensuring product safety, efficacy, and comparability.

The Role of International Consortia and Standardization Initiatives

The reproducibility of immunological assays across different laboratories is a cornerstone of reliable biomedical research and drug development. Variability in assay protocols, reagents, and interpretation criteria can significantly compromise data comparability, potentially delaying diagnostic advancements and therapeutic innovations. International consortia and standardization initiatives have emerged as essential forces in addressing these challenges by establishing harmonized protocols, developing reference materials, and implementing quality assurance programs. These collaborative efforts provide the critical framework needed to ensure that experimental results are consistent, comparable, and transferable from research settings to clinical applications, ultimately strengthening the scientific foundation upon which diagnostic and therapeutic decisions are made.

The absence of analytical standards can lead to startling discrepancies in critical diagnostic tests. For example, a study of estrogen receptor (ER) testing across accredited laboratories revealed that while one laboratory's assay could detect 7,310 target molecules per cell, another required 74,790 molecules—a tenfold difference in analytical sensitivity—to produce a visible result, despite both laboratories passing national proficiency testing [19]. Such inconsistencies underscore the vital role that standardization bodies play in aligning methodological sensitivity and ensuring that assays performed in different settings yield clinically equivalent results.

Major International Standardization Initiatives

Several prominent organizations and consortia have established frameworks to improve the accuracy and reproducibility of immunological assays across laboratories worldwide. These initiatives range from broad regulatory standards to focused technical consortia targeting specific methodological challenges.

Consortium for Analytic Standardization in Immunohistochemistry (CASI)

Established with funding from the National Cancer Institute, CASI addresses a fundamental gap in immunohistochemistry (IHC) testing—the lack of analytical standards. Its mission centers on integrating analytical standards into routine IHC practice to improve test accuracy and reproducibility [19]. CASI operates under two primary mandates: experimentally determining analytical sensitivity thresholds (lower and upper limits of detection) for selected IHC assays, and educating IHC stakeholders about what analytical standards are, why they matter, and how they should be used [19].

CASI promotes the use of quantitative IHC calibrators composed of purified analytes conjugated to solid-phase microbeads at defined concentrations traceable to the National Institute of Standards and Technology (NIST) Standard Reference Material 1934 [19]. This approach allows laboratories to objectively measure their assay's lower limit of detection (LOD) and align it with the analytical sensitivity of original clinical trial assays, thereby creating a crucial link between research validation and diagnostic implementation.

External Quality Assurance Programs

External quality assurance (EQA) programs, also known as proficiency testing, serve as practical tools for assessing and improving interlaboratory consistency. The Spanish Society for Immunology's GECLID program represents a comprehensive example, running 13 distinct EQA schemes for histocompatibility and immunogenetics testing [20]. Between 2011 and 2024, this program collected and evaluated over 1.69 million results across various assay types, including anti-HLA antibody detection, molecular typing, chimerism analyses, and crossmatching [20].

These programs enable ongoing performance monitoring and harmonization across participating laboratories. The success rates reported by GECLID demonstrate the effectiveness of such initiatives, with molecular typing schemes achieving 99.2% success, serological typing at 98.9%, crossmatches at 96.7%, and chimerism analyses at 94.8% [20]. Importantly, in 2022, 61.3% of participating laboratories successfully passed every HLA EQA scheme, while 87.9% of annual reports were rated satisfactory, indicating generally strong performance with targeted areas for improvement [20].

International Assay Comparison Studies

Collaborative studies across multiple laboratories have played a pivotal role in understanding sources of variability and establishing standardized approaches. A landmark international collaborative study published in 1990 involving 11 laboratories comparing 14 different methods for detecting HIV-neutralizing antibodies demonstrated that excellent between-laboratory consistency was achievable [21]. This study identified the virus strain used as the most important variable, while factors such as cell line, culture conditions, and endpoint determination method proved less impactful [21].

Similar approaches have been applied to influenza serology. A 2020 comparison of influenza-specific neutralizing antibody assays found that while different microneutralization (MN) assay readouts (cytopathic effect, hemagglutination, ELISA, RT-qPCR) showed good correlation, the agreement of nominal titers varied significantly depending on the readouts compared and the virus strain used [22]. The study identified the MN assay with ELISA readout as having the highest potential for standardization due to its reproducibility, cost-effectiveness, and unbiased assessment of results [22].

Comparative Analysis of Standardization Initiatives

The table below summarizes key international standardization initiatives, their focal areas, and their documented impacts on assay reproducibility.

Table 1: Comparison of Major International Standardization Initiatives in Immunological Assays

| Initiative/Program | Primary Focus | Key Metrics | Impact on Reproducibility |

|---|---|---|---|

| Consortium for Analytic Standardization in Immunohistochemistry (CASI) [19] | Developing analytical standards for IHC assays | • Lower/upper limits of detection• Quantitative calibrators traceable to NIST | Addresses 10-30% discordance rates in IHC testing; enables standardized method transfer from clinical trials to diagnostics |

| GECLID External Quality Assurance [20] | Proficiency testing for immunogenetics laboratories | • 1.69+ million results evaluated• 13 specialized schemes• 99.2% success rate for molecular typing | Identifies error sources (nomenclature, risk interpretation); ensures homogeneous results across different methods and laboratories |

| International HIV Neutralization Assay Comparison [21] | Method comparison for HIV antibody detection | • 11 laboratories• 14 methods compared• Virus strain identified as key variable | Demonstrated excellent between-laboratory consistency; established that standardization is readily achievable |

| Influenza MN Assay Standardization Study [22] | Identifying optimal readout for influenza neutralization assays | • 4 MN readouts compared• ELISA readout showed highest reproducibility• Correlation with HAI titers | Recommended standardized MN protocol with ELISA readout to minimize interlaboratory variability |

Experimental Protocols and Methodologies

Standardization initiatives rely on rigorous experimental approaches to evaluate and harmonize assay performance. The following section details key methodologies employed by these programs.

IHC Calibrator Protocol for Determining Limits of Detection

The CASI consortium has pioneered the use of calibrators to determine the analytical sensitivity of IHC assays [19]. The experimental workflow proceeds through several critical stages:

Figure 1: IHC Calibrator Workflow for Detection Limits

- Calibrator Preparation: Purified analytes are conjugated to clear cell-sized (7-8 µm) glass microbeads at up to 10 defined concentration levels, with values traceable to NIST Standard Reference Material 1934 [19].

- Parallel Processing: Calibrators undergo identical processing as tissue samples, including deparaffinization, hydration, and antigen retrieval [19].

- Visual Detection Threshold Determination: The lowest calibrator level that produces visible color after IHC staining establishes the lower limit of detection (LOD) [19].

- Analytic Response Characterization: Higher analyte concentrations typically show an initial linear increase in stain intensity followed by a plateau at maximum response [19].

- Quantitative Reporting: The LOD is expressed as the number of analyte molecules per cell equivalent, providing a standardized metric for comparing assay sensitivity across laboratories [19].

External Quality Assurance (EQA) Assessment Methodology

The GECLID program follows a rigorous protocol for administering and evaluating EQA schemes [20]:

Figure 2: External Quality Assurance Assessment Process

- Sample Selection and Distribution: Programs obtain peripheral blood (buffy coats) and sera from biobanks, with minimal processing to maintain samples representative of routine clinical specimens [20].

- Participant Analysis: Laboratories process samples using their standard protocols, including both commercial kits and laboratory-developed tests, reflecting real-world practice [20].

- Result Collection: Participants report results through standardized web forms, capturing data on methodology, reagents, and interpretation criteria [20].

- Consensus Value Assignment: Using algorithms specified in ISO 13528, the program analyzes all reported results to determine assigned values for each parameter [20].

- Performance Evaluation: Individual laboratory results are compared against consensus values using scoring systems aligned with European Federation for Immunogenetics (EFI) standards [20].

- Reporting: Comprehensive reports detail overall performance metrics alongside individualized feedback, identifying specific areas requiring improvement [20].

International Method Comparison Protocol

The international HIV neutralization assay study established a model for multi-laboratory method comparisons [21]:

- Panel Design: A blinded panel of 10 coded sera plus positive and negative controls was distributed to all participating laboratories [21].

- Parallel Testing: Each laboratory tested the panel using their established neutralization assay methods (14 different methods across 11 laboratories) [21].

- Data Collection: Laboratories reported both methodological details (virus strain, cell line, culture conditions, endpoint determination) and experimental results [21].

- Correlation Analysis: Results were analyzed for within-laboratory and between-laboratory consistency, identifying key variables contributing to variability [21].

- Recommendation Development: Based on findings, the study identified the virus strain as the most critical variable requiring standardization for improved reproducibility [21].

The Scientist's Toolkit: Essential Research Reagent Solutions

Successful implementation of standardized immunological assays requires specific reagent solutions and reference materials. The following table outlines key components used in standardization initiatives.

Table 2: Essential Research Reagent Solutions for Assay Standardization

| Reagent/Resource | Function in Standardization | Application Examples |

|---|---|---|

| Primary Reference Standards [19] | Fully characterized materials with known analyte concentrations from accredited agencies (NIST, WHO) | Serve as metrological foundation for traceability; used by companies preparing secondary reference standards |

| Secondary Reference Standards (Calibrators) [19] | Materials with assigned analyte concentrations derived from primary standards | IHC calibrators with defined molecules/cell equivalent; enable quantitative sensitivity measurements |

| International Standard Sera [21] [22] | Well-characterized antibody preparations for interlaboratory normalization | WHO reference anti-HIV-1 serum; standard sera for influenza neutralizing antibody comparisons |

| Stable Control Materials [20] | Quality control samples mimicking clinical specimens | Peripheral blood and serum samples distributed in EQA schemes; ensure representative testing conditions |

| Matched Antibody Pairs [23] [24] | Optimized antibody combinations for specific capture and detection | Sandwich ELISA kits; ensure consistent recognition of target epitopes across laboratories |

| Erythrivarone A | Erythrivarone A, MF:C20H18O5, MW:338.4 g/mol | Chemical Reagent |

| Bullatantriol | Bullatantriol, CAS:99933-32-1, MF:C15H28O3, MW:256.38 g/mol | Chemical Reagent |

Impact Assessment and Future Directions

Standardization initiatives have demonstrated measurable benefits for assay reproducibility and reliability. The incorporation of analytical standards in other clinical chemistry fields offers instructive precedents: the National Glycohemoglobin Standardization Program dramatically improved hemoglobin A1c testing, while standardization of cholesterol testing reduced error rates from 18% to less than 5%, with estimated healthcare savings exceeding $100 million annually [19].

For immunohistochemistry, the integration of calibrators and analytical standards is expected to enable three key advancements: (1) harmonization and standardization of IHC assays across laboratories; (2) improved test accuracy and reproducibility; and (3) dramatically simplified method transfer of new IHC protocols from published literature or clinical trials to diagnostic laboratories [19].

The evolving regulatory landscape, including the EU In Vitro Diagnostic Device Regulation, now requires appropriateness evaluation for laboratory-developed tests, further emphasizing the importance of standardized approaches and participation in proficiency testing schemes [20]. Future directions will likely include expanded reference material availability, harmonized reporting standards, and the integration of new technologies such as digital pathology and artificial intelligence for more objective result interpretation.

International consortia and standardization initiatives provide indispensable frameworks for ensuring the reproducibility and reliability of immunological assays across research laboratories and clinical diagnostics. Through the development of reference materials, establishment of standardized protocols, and implementation of quality assurance programs, these collaborative efforts address critical sources of variability and create the foundation for robust, comparable data generation. As biomarker discovery advances and personalized medicine evolves, the role of these standardization bodies will become increasingly vital in translating research findings into clinically actionable information that improves patient care and therapeutic outcomes.

Implementing Standardized Protocols for Common Immunoassays

Best Practices for the Dendritic Cell (DC) Maturation Assay

The dendritic cell (DC) maturation assay is a critical tool in the non-clinical immunogenicity risk assessment toolkit during drug development. It evaluates the ability of a therapeutic candidate to induce maturation of immature monocyte-derived DCs (moDCs), serving as an indicator of factors that may initiate an innate immune response and contribute to an adaptive immune response [25]. As therapeutic modalities increase in structural and functional complexity, ensuring the reproducibility and robustness of this assay across different laboratories has become paramount for meaningful data comparison and candidate selection [25] [26]. This guide outlines best practices, standardized protocols, and comparative data to achieve reliable and reproducible DC maturation assays.

Purpose and Application of the DC Maturation Assay

The primary objective of the DC maturation assay is to assess the adjuvanticity potential of biotherapeutics, which can contribute to the risk of developing anti-drug antibodies (ADA) [25]. The assay enables the ranking of different drug candidates based on their capacity to trigger DC maturation.

Key applications include:

- Candidate Screening: Comparing and ranking variants of molecules during development processes [25].

- Impact Assessment of Product Attributes: Investigating the effects of critical quality attributes (CQAs) such as protein aggregates, host cell proteins, or formulation components [25] [27].

- Mechanistic Studies: Understanding stimulatory effects mediated by target engagement, candidate payloads, or specific impurities under defined conditions [25].

It is crucial to note that the absence of observed DC maturation does not imply the absence of T-cell epitopes in the therapeutic product. Therefore, this assay should be used alongside other preclinical immunogenicity assays, such as MHC-associated peptide proteomics (MAPPs) and T-cell activation assays, to obtain a comprehensive risk assessment [25].

Core Signaling Pathways in DC Maturation

The maturation of DCs is a fundamental process linking innate and adaptive immunity. The following diagram illustrates the key signaling pathways involved in DC maturation and subsequent T-cell activation.

Figure 1: Signaling Pathway in DC Maturation and T-Cell Activation. Immature DCs (iDCs) recognize pathogenic stimuli or drug product impurities via Pattern Recognition Receptors (PRRs). This triggers a maturation process, leading to upregulated surface expression of costimulatory molecules (CD80, CD86, CD83, CD40) and HLA class II molecules. The mature DC (mDC) then activates naive CD4+ T cells by providing two essential signals: Signal 1 (TCR engagement with HLA-peptide complexes) and Signal 2 (co-stimulation via CD80/CD86 binding to CD28) [25].

Best Practices for a Reproducible Assay

Achieving inter-laboratory reproducibility requires standardization of key parameters. The European Immunogenicity Platform Non-Clinical Immunogenicity Risk Assessment working group (EIP-NCIRA) has provided recommendations to improve assay robustness and comparability [25].

Cell Source and Culture Standardization

- Cell Source: Use peripheral blood mononuclear cells (PBMCs) from healthy donors as the starting material. PBMCs should be obtained from reputable blood donation centers with appropriate ethical consent [25] [27].

- Monocyte Isolation: Isolate CD14+ monocytes from PBMCs via immunomagnetic positive selection using systems like CliniMACS or MiniMACS technology [28] [27].

- DC Differentiation and Maturation: Differentiate monocytes into immature DCs (iDCs) by culturing them for 5-6 days in the presence of GM-CSF (50 ng/mL) and IL-4 (50 ng/mL) [28] [27]. Induce maturation by adding a pro-inflammatory cytokine cocktail, typically including TNF-α, IL-1β, IL-6, and PGE2 [28].

Critical Assay Controls

Including the appropriate controls is vital for meaningful data interpretation. The table below summarizes the essential controls and their acceptance criteria.

Table 1: Essential Controls for the DC Maturation Assay

| Control Type | Purpose | Examples | Acceptance Criteria |

|---|---|---|---|

| Negative Control | Defines baseline maturation of iDCs. | Cell culture medium alone [25]. | Low expression of maturation markers (e.g., CD80, CD83, CD86). |

| Positive Control | Verifies DCs' capacity to mature. | 100 ng/mL Lipopolysaccharide (LPS) [27]. | Significant upregulation of maturation markers and cytokine production. |

| Reference Control | Provides a benchmark for comparison. | A clinically validated benchmark molecule or a known immunogenic antibody (e.g., aggregated infliximab) [25] [27]. | Consistent response profile across multiple assay runs. |

Key Readouts and Quality Control

A robust assay employs multiple readouts to comprehensively assess the maturation state.

- Phenotypic Analysis via Flow Cytometry: This is the primary readout. Measure the increased surface expression of CD80, CD83, CD86, CD40, and HLA-DR on mature DCs compared to immature DCs [25] [28] [27]. Adherence to flow cytometry standardization efforts, such as those by the Euroflow consortium, is recommended to minimize inter-laboratory variation [26].

- Cytokine Secretion Profile: Quantify the production of pro-inflammatory cytokines such as IL-1β, IL-6, IL-8, IL-12, TNF-α, CCL3, and CCL4 in the culture supernatant using techniques like cytometric bead array (CBA) or ELISA [27].

- Quality Control (QC) of Cells: Prior to assay setup, iDCs should undergo QC. Viability must be maintained above 90% to ensure functional competence [28] [29]. The immature state should be confirmed by low expression of maturation markers.

Experimental Data and Protocol Comparison

To illustrate the practical application and outcomes of the DC maturation assay, the following table summarizes experimental data generated using different therapeutic antibodies.

Table 2: Comparative DC Maturation Response to Therapeutic Antibodies and Aggregates

| Therapeutic Antibody | Humanization Status | Stress Condition | Phenotypic Changes (CD83/CD86) | Cytokine Signature | Phospho-Signaling |

|---|---|---|---|---|---|

| Infliximab | Chimeric | Heat stress (aggregates) | Marked increase | IL-1β, IL-6, IL-8, IL-12, TNF-α, CCL3, CCL4 ↑ | Syk, ERK1/2, Akt ↑ |

| Natalizumab | Humanized | Native / Stressed (non-aggregating) | No activation | No significant change | No significant change |

| Adalimumab | Fully Human | Heat stress (aggregates) | Slight variation | Slight parameter variation | Slight parameter variation |

| Rituximab | Chimeric | Heat stress (aggregates) | Slight variation | Slight parameter variation | Slight parameter variation |

Data adapted from a multi-laboratory study [27]. The results demonstrate that the propensity to activate DCs is molecule-dependent and influenced by factors like aggregation state.

Detailed Experimental Protocol

The following workflow outlines the core steps for performing a standardized DC maturation assay, integrating recommendations from multiple sources [25] [28] [27].

Figure 2: DC Maturation Assay Workflow. The process begins with the isolation and differentiation of immature DCs (iDCs), followed by a critical quality control step. iDCs are then exposed to the test articles and controls before being harvested for multiparameter analysis.

The Scientist's Toolkit: Essential Research Reagents

The table below lists key reagents and materials required to establish a reproducible DC maturation assay.

Table 3: Essential Reagent Solutions for the DC Maturation Assay

| Reagent / Material | Function / Purpose | Examples / Notes |

|---|---|---|

| CD14+ Microbeads | Immunomagnetic isolation of monocytes from PBMCs. | Clinically graded kits (e.g., Miltenyi Biotec CliniMACS) ensure reproducibility [28]. |

| Cytokines (GM-CSF, IL-4) | Induces monocyte differentiation into immature DCs (iDCs). | Use pharmaceutical-grade cytokines and replenish on day 3 of culture [28] [27]. |

| Maturation Cocktail | Induces final maturation of antigen-loaded DCs. | Typically includes TNF-α, IL-1β, IL-6, and PGE2 [28]. |

| Flow Cytometry Antibodies | Phenotypic analysis of maturation markers. | Antibodies against CD80, CD83, CD86, CD40, and HLA-DR. Standardized panels improve cross-lab comparability [26]. |

| Cytokine Detection Kit | Quantification of secreted cytokines in supernatant. | Cytometric Bead Array (CBA) flex sets or ELISA kits for IL-1β, IL-6, IL-12, TNF-α, etc. [29] [27]. |

| Positive Control | Assay validation and system suitability. | LPS (100 ng/mL) or a known immunogenic aggregated antibody (e.g., infliximab) [27]. |

| Piribedil N-Oxide | Piribedil N-Oxide, CAS:53954-71-5, MF:C16H18N4O3, MW:314.34 g/mol | Chemical Reagent |

| 9-PAHPA | 9-PAHPA, CAS:1636134-70-7, MF:C32H62O4, MW:510.8 g/mol | Chemical Reagent |

The DC maturation assay is a powerful predictive tool for assessing the innate immunogenicity risk of biotherapeutics. Its successful implementation and the ability to compare data across different projects and laboratories hinge on the adoption of standardized best practices. This includes careful attention to cell source, culture conditions, a defined set of controls, and multiple readout parameters. By adhering to these guidelines and utilizing the essential reagent toolkit, researchers can generate robust, reproducible, and meaningful data to inform candidate selection and de-risk drug development.

Flow cytometry remains a powerful, high-throughput methodology for multiparameter single-cell analysis, but its utility in multi-center research and drug development is heavily dependent on standardized procedures. The reproducibility crisis in immunological assays stems from multiple variables, including instrument configuration, antibody reagent performance, sample preparation protocols, and data analysis approaches. Recent studies have demonstrated considerable variability in flow cytometric measurements between different laboratories analyzing identical samples, limiting the comparability of data in large-scale clinical trials [30]. This comparison guide evaluates current standardization methodologies across technological platforms, antibody panel development, and procedural workflows to provide researchers and drug development professionals with evidence-based strategies for enhancing reproducibility.

Comparative Performance of Flow Cytometry Platforms

Technology Benchmarking and Detection Limits

Different flow cytometry platforms offer varying capabilities for detection sensitivity, multiparameter analysis, and reproducibility. A 2021 benchmark study systematically compared conventional, high-resolution, and imaging flow cytometry platforms using nanospheres and extracellular vesicles (EVs) to characterize detection abilities [31].

Table 1: Performance Comparison of Flow Cytometry Platforms

| Platform Type | Lower Detection Limit | Key Technological Features | Reported Applications | Sensitivity Limitations |

|---|---|---|---|---|

| Conventional Flow Cytometry (e.g., BD FACSAria III) | 300-500 nm (best case: ~150 nm) | Standard photomultiplier tubes (PMTs), fluidics optimized for cells (2-30 µm) | Immunophenotyping, cell cycle analysis | Unable to detect abundantly present smaller EVs; swarm detection of multiple particles as single events |

| High-Resolution Flow Cytometry (e.g., Apogee A60 Micro-PLUS) | <150 nm | PMTs on scatter channels, reduced wide-angle forward scatter/medium-angle light scatter, higher power lasers, decreased flow rates | EV characterization, nanoscale particle analysis | Improved but limited for smallest biological particles |

| Imaging Flow Cytometry (e.g., ImageStream X Mk II) | ~20 nm | Charge-coupled device (CCD) cameras with larger dynamic range, time delay integration (TDI), slow sheath/sample flow rates | EV characterization, submicron particle analysis | Longer acquisition times, complex data analysis |

The study found that conventional flow cytometers have a lower detection limit between 300-500 nm, with an optimized minimal detection limit of approximately 150 nm, thereby excluding abundantly present smaller extracellular vesicles from analysis [31]. Additionally, conventional instruments suffer from "swarm detection" where multiple EVs are detected as single events due to fluidics optimized for cell-sized particles (2-30 μm) [31].

High-resolution flow cytometers incorporate modifications such as changing photodiodes to PMTs on light scatter channels, adding reduced wide-angle forward scatter collection, installing higher-power lasers, and decreasing sample and sheath flow rates [31]. These modifications enable detection limits below those of conventional flow cytometers, making them increasingly prevalent in extracellular vesicle research.

Imaging flow cytometers demonstrate significantly enhanced sensitivity, detecting synthetic nanospheres as small as 20 nm, largely due to CCD cameras with greater dynamic range and lower noise than PMTs, combined with time delay integration that allows longer signal integration times for each particle [31].

Inter-Instrument Variability in Multicenter Studies

A 2020 multicenter study investigating standardization procedures for flow cytometry data harmonization revealed significant variability across eleven instruments from different manufacturers (Navios, Gallios, Canto II, Fortessa, Verse, Aria) [30]. When analyzing the same blood control sample across all platforms, researchers found frequency variation coefficients ranging from 2.3% for neutrophils to 17.7% for monocytes, and mean fluorescence intensity (MFI) variation coefficients ranging from 10.9% for CD3 to 30.9% for CD15, despite initial harmonization procedures [30].

Table 2: Inter-Instrument Variability in Multicenter Flow Cytometry Study

| Measurement Parameter | Cell Population/Marker | Coefficient of Variation Range | Impact on Data Interpretation |

|---|---|---|---|

| Population Frequencies | Neutrophils | 2.3% | Minimal impact |

| Population Frequencies | Monocytes | 17.7% | Substantial impact for precise immunomonitoring |

| Marker Expression (MFI) | CD3 | 10.9% | Moderate impact for low-expression markers |

| Marker Expression (MFI) | CD15 | 30.9% | Substantial impact for quantitative comparisons |

The study further identified that lot-to-lot variations in reagents represented a significant source of variability, with three different antibody lots used during the 4-year study period showing marked variations in MFI when the same samples were analyzed [30].

Standardization of Antibody Panels and Reagents

Antibody Titration and Validation

Proper antibody titration is fundamental for generating reproducible flow cytometry data. Using incorrect antibody concentrations leads to either non-specific binding (with excess antibody) or weak signals (with insufficient antibody) [32]. The optimal concentration must be determined for each new antibody lot and specific cell type through systematic titration.

A recommended titration protocol involves preparing a series of antibody dilutions and staining identical control samples [32]. The optimal concentration provides the maximum signal-to-noise ratio—the brightest specific signal with the lowest background. This process ensures both scientific rigor and cost-effective reagent use [32].

For complex panels, particularly in spectral flow cytometry, careful antibody selection and staining optimization are crucial. As panel complexity increases, so does potential data variability from non-biological factors [33]. In a 30-color spectral flow cytometry panel development, researchers performed iterative refinements with careful consideration of antibody selection, staining optimization, and stability analyses to minimize non-biological variability [33].

Ad Hoc Antibody Panel Modifications

In clinical laboratories, unforeseen situations may necessitate ad hoc modifications to validated antibody panels. The 2025 guidance from the European Immunogenicity Platform recommends that such modifications should be limited—such as substituting or adding one or two antibodies—while maintaining assay integrity [34]. These modifications are intended for rare clinical situations and are not substitutes for full validation protocols.

Key considerations for ad hoc modifications include assessing impacts on fluorescence compensation, antibody binding, assay sensitivity, and overall performance [34]. Proper documentation with review and approval by laboratory medical directors is essential to mitigate risks associated with these modifications. The guidance emphasizes that these are temporary adaptations, not permanent changes to validated assays [34].

Instrument Setup and Quality Control Procedures

Daily Quality Control and Standardization

Implementing robust quality control procedures is essential for instrument stability. The PRECISESADS study developed a standardized operating procedure using 8-peak beads for daily QC to preserve intra-instrument stability throughout their 4-year project period [30]. They established targets during initial harmonization and monitored performance regularly.

Researchers developed an R script for normalization of results over the study period for each center based on initial harmonization targets to correct variations observed in daily QC [30]. This script applied normalization using linear regression with determined parameters, using MFI values of 8-peak beads obtained during initial calibration as reference. Validation experiments demonstrated that this approach could correct intentionally introduced PMT variations of 10-15%, reducing coefficients of variation to less than 5% [30].

Standardized Startup and Shutdown Procedures

Core facilities typically implement strict protocols for instrument operation to ensure consistency and maintenance. The Houston Methodist Flow Cytometry Core provides detailed SOPs for startup and shutdown procedures [35]:

Start-up Procedure:

- Check sheath level and ensure sufficient supply for the experiment

- If sheath tank needs filling, empty waste tank

- Turn on the instrument and computer

- Set up data-acquisition protocols

- Prime instrument once

- Let instrument warm up 15 minutes prior to use [35]

Shut-down Procedure:

- Run a tube with 2 mL of 10% bleach

- Move swing arm to aspirate 1 mL of bleach

- Return swing arm to center, put instrument on RUN and HIGH for 5 minutes

- Run a tube with 2 mL of distilled water

- Aspirate 1 mL of water, return arm to center

- Put instrument on STANDBY for 5 minutes for laser cooldown

- After 5 minutes, the instrument may be turned off [35]

The Yale Research Flow Cytometry Facility adds that users must empty waste tanks and add 100 mL of bleach after each use, and refill sheath fluid tanks, unless the next user has agreed to perform these tasks [36].

Sample Preparation and Experimental Protocols

Sample Processing Standardization

Proper sample preparation is foundational for reproducible flow cytometry results. Key considerations include:

Single-Cell Suspension: Creating a monodispersed, viable cell suspension is critical. Clumps of cells obstruct the flow path, cause instrument errors, and lead to inaccurate counts [32]. For solid tissues, proper mechanical and enzymatic disaggregation must balance releasing individual cells without compromising viability or altering surface antigen expression [32].

Filtration: Filtering cell suspensions through fine mesh filters (typically 40-70μm) removes cell clumps, debris, and tissue fragments, preventing clogs in the fluidic system and ensuring uniform sample stream [32]. The Yale Facility requires all samples to be filtered at the machine just before running, with specific protocols using Falcon Mesh Top tubes [36].

Viability Assessment: Dead and dying cells pose significant problems through non-specific antibody binding, creating background noise [32]. Viability dyes like propidium iodide or 7-AAD allow differentiation between live and dead cells for subsequent exclusion during analysis. Facilities often mandate fixation of potentially infectious materials before analysis on shared instruments [36].

Controlling for Background and Non-Specific Binding

Background noise from non-specific antibody binding or cellular autofluorescence can obscure true positive signals:

Blocking Reagents: Cells with Fc receptors can bind antibodies non-specifically. Blocking these receptors with FcR blocking solution before adding antibodies is crucial for accurate data [32].

Appropriate Controls: Isotype controls (antibodies with same host species, isotype, and fluorophore but specific to irrelevant antigens) help distinguish true positive staining from background [32]. Unstained samples measure autofluorescence, while fluorescence minus one (FMO) controls are critical for accurate gating in multicolor panels [35].

Data Analysis and Harmonization Approaches

Automated Analysis for Improved Reproducibility

Manual data analysis introduces significant variability in flow cytometry. The PRECISESADS study addressed this by developing supervised machine learning-based automated gating pipelines that replicated manual analysis [30]. Their approach used a two-step workflow: a first step customized for each instrument to address differences in forward and side scatter signals, and a second instrument-independent step for gating remaining populations of interest [30].

Validation comparing automated results with traditional manual analysis on 300 patients across 11 centers showed very good correlation for frequencies, absolute values, and MFIs [30]. This demonstrates that automated analysis provides consistency and reproducibility advantages, especially in large-scale, multi-center studies.

Cross-Laboratory Harmonization Protocols

Recent initiatives have focused on comprehensive harmonization strategies. The Curiox Biosystems Commercial Tutorial at CYTO 2025 highlighted advances in antibody preparation and automation for reliable immune monitoring, emphasizing CLSI H62 and NIST standards for assay validation and harmonization across laboratories [37].

Similarly, the European Immunogenicity Platform's working group on non-clinical immunogenicity risk assessment has provided comprehensive recommendations for establishing robust workflows to ensure data quality and meaningful interpretation [38]. While acknowledging the improbability of complete protocol harmonization, they propose measures and controls that support developing high-quality assays with improved reproducibility and reliability [38].

Essential Research Reagent Solutions

Table 3: Key Reagent Solutions for Standardized Flow Cytometry

| Reagent Category | Specific Examples | Function in Standardization | Implementation Considerations |

|---|---|---|---|

| Reference Standard Beads | VersaComp Capture Beads, 8-peak beads | Instrument calibration, PMT standardization, daily QC | Establish baseline MFI targets; monitor drift over time |

| Viability Dyes | Propidium iodide, 7-AAD, Ghost Dye v450 | Distinguish live/dead cells; exclude compromised cells from analysis | Titrate for optimal concentration; include in all experiments |

| Fc Receptor Blocking Reagents | Human FcR Blocking Solution | Reduce non-specific antibody binding | Pre-incubate before antibody staining; particularly important for hematopoietic cells |

| Stain Buffer | Brilliant Stain Buffer | Manage fluorophore aggregation in multicolor panels | Essential for high-parameter panels with tandem dyes |

| Alignment Beads | Commercial alignment beads (manufacturer-specific) | Laser alignment and performance verification | Regular use according to manufacturer schedule |

| Standardized Antibody Panels | DuraClone dried antibody panels | Lot-to-lot consistency, reduced pipetting errors | Provide stability over time, ease of storage |

Standardization of flow cytometry across antibody panels, instrument setup, and SOPs requires a systematic, multifaceted approach. Technological advancements in high-resolution and spectral flow cytometry have expanded detection capabilities but introduced new standardization challenges. Successful multicenter studies implement comprehensive strategies including initial instrument harmonization, daily quality control, standardized sample processing, automated analysis pipelines, and careful reagent validation. As flow cytometry continues to evolve toward higher-parameter applications in both research and clinical trials, the adoption of these standardization practices will be essential for generating reliable, comparable data across laboratories and over time. The scientific community's increasing emphasis on reproducibility, evidenced by new guidelines and standardization initiatives, promises to enhance the robustness of flow cytometric data in immunological research and drug development.

Experimental Workflow Diagrams

Standardization Workflow - This diagram illustrates the comprehensive flow cytometry standardization process from panel design through data analysis, highlighting critical control points.

Multicenter Approach - This diagram shows the key components for achieving reproducible flow cytometry results across multiple research centers.

Multiplexed Immunofluorescence (mIF) and Multi-institutional Verification

Multiplexed immunofluorescence (mIF) has emerged as a transformative technology in spatial biology, enabling the simultaneous visualization and quantification of multiple protein targets within a single formalin-fixed paraffin-embedded (FFPE) tissue section [39] [40]. By preserving critical spatial context within the tumor microenvironment (TME), mIF provides insights into cellular phenotypes, functional states, and cell-to-cell interactions that are lost in dissociated cell analyses [41]. This spatial information has proven particularly valuable in immuno-oncology, where the spatial organization of immune cells within tumors often correlates more strongly with patient response to immunotherapy than other biomarker modalities [42] [43].

Despite its powerful capabilities, the transition of mIF from a research tool to a clinically validated methodology faces significant challenges in reproducibility and standardization across institutions. The Society for Immunotherapy of Cancer (SITC) has highlighted that mIF technologies are "maturing and are routinely included in research studies and moving towards clinical use," but require standardized guidelines for image analysis and data management to ensure comparable results across laboratories [42]. This review examines current verification frameworks, compares analytical pipelines, and provides best practices for achieving robust multi-institutional mIF data.

Comparative Analysis of mIF Analysis Platforms and Their Performance

Multiple platforms and computational pipelines have been developed to address the analytical challenges of mIF data. The table below compares four prominent solutions used in multi-institutional settings.

Table 1: Comparison of mIF Analysis Platforms and Verification Performance

| Platform/Pipeline | Technology Basis | Key Verification Metrics | Multi-institutional Validation | Reference Performance Data |

|---|---|---|---|---|

| SPARTA Framework | Platform-agnostic with AI-enabled segmentation | Standardized processing across imaging systems (Lunaphore Comet, Akoya PhenoImager, Zeiss Axioscan) | Cross-platform consistency in data processing and analysis | Consistent cell segmentation and classification across platforms [41] |

| MARQO Pipeline | Open-source, user-guided automated analysis | Composite segmentation accuracy (>60% centroid detection across stains), validated against pathologist curation | Tested across multiple sites and tissue types; compatible with CIMAC-CIDC networks | 91.3% concordance with manual pathologist segmentation in HCC validation [44] |

| SITC Best Practices | Guidelines for mIHC/IF analysis | Image acquisition standards, cell segmentation verification, batch effect correction | Multi-institutional harmonization efforts across academic centers and pharmaceutical companies | Established framework for cross-site comparability; AUC >0.8 for predictive biomarkers [42] |

| ROSIE (AI-based) | Deep learning (ConvNext CNN) | Pearson R=0.285, Spearman R=0.352 for protein expression prediction from H&E | Trained on 1,300+ samples across multiple institutions and disease types | Sample-level C-index=0.706 for biomarker prediction [45] |