AlphaFold Confidence Scores vs. DockQ Accuracy: A Guide to Predicting Protein-Protein Docking Reliability

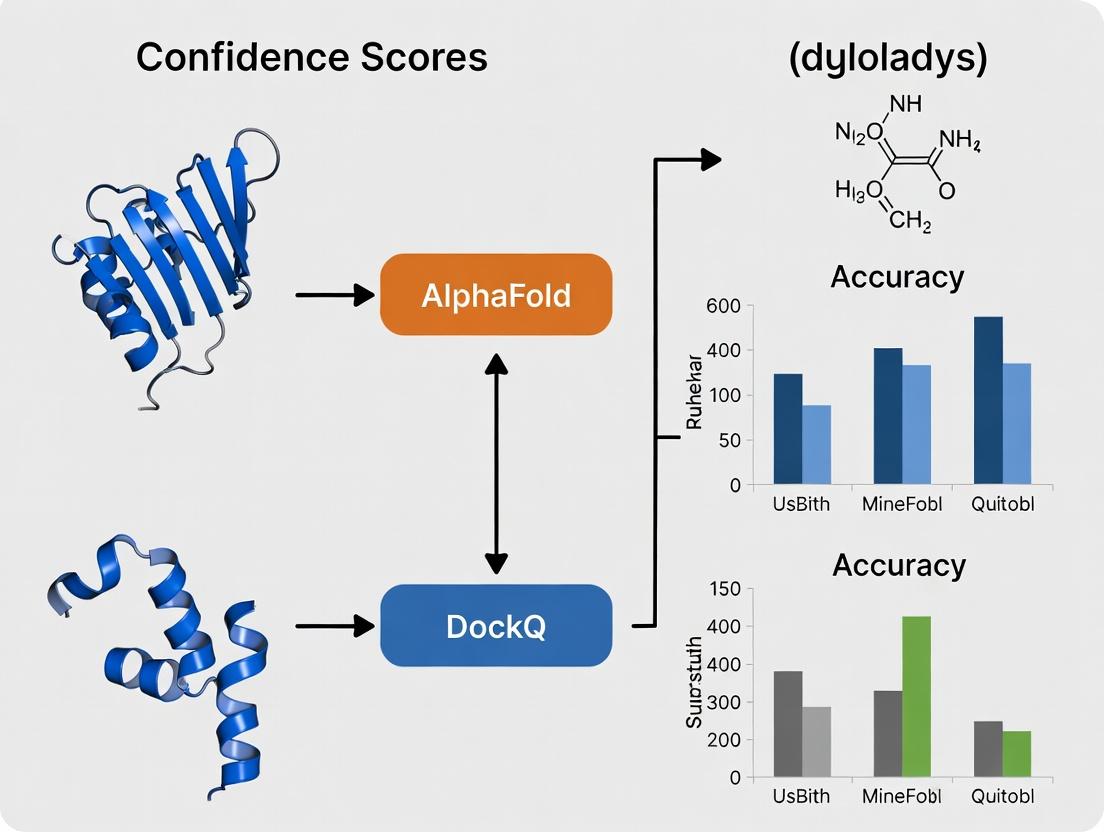

This article provides a critical examination of the relationship between AlphaFold's per-residue and per-model confidence scores (pLDDT and ipTM/pTM) and the DockQ metric for evaluating protein-protein complex predictions.

AlphaFold Confidence Scores vs. DockQ Accuracy: A Guide to Predicting Protein-Protein Docking Reliability

Abstract

This article provides a critical examination of the relationship between AlphaFold's per-residue and per-model confidence scores (pLDDT and ipTM/pTM) and the DockQ metric for evaluating protein-protein complex predictions. Targeted at researchers, structural biologists, and drug discovery professionals, it explores the foundational principles, methodological applications, optimization strategies, and validation protocols necessary to leverage these metrics effectively. We dissect how confidence scores can signal docking reliability, identify pitfalls in interpretation, compare AlphaFold's outputs with other docking assessment tools, and outline best practices for robust complex prediction in biomedical research.

Decoding the Signals: Understanding AlphaFold Confidence Scores and the DockQ Metric

Technical Support Center

Troubleshooting Guides

Issue 1: Low pLDDT scores in a specific protein region.

- Problem: A user's AlphaFold2 prediction for a loop region shows a pLDDT < 50 (colored red), indicating very low confidence.

- Diagnosis: This is common in regions with high intrinsic disorder, lack of evolutionary constraints (few homologous sequences in the MSA), or conformational flexibility.

- Solution:

- Check the depth and coverage of the Multiple Sequence Alignment (MSA) for that region via the AlphaFold output files. A sparse MSA often explains low confidence.

- Run the prediction again using the full database (if originally run with the reduced database) to generate a more comprehensive MSA.

- For putative disordered regions, consult experimental data or use dedicated disorder prediction tools (e.g., IUPred2A) for validation.

- If the region is at a terminus, consider modeling the protein as part of a larger complex or construct.

Issue 2: Discrepancy between high pLDDT but low ipTM/pTM for a complex.

- Problem: A modeled protein dimer has good per-residue confidence (high average pLDDT) but the predicted interface (ipTM) or template modeling score (pTM) is low.

- Diagnosis: This suggests the individual monomer folds are confident, but their relative orientation is uncertain. This is typical for weak, transient, or non-specific interactions.

- Solution:

- Prioritize the ipTM score over the global pLDDT for assessing complex accuracy within the thesis research context. A low ipTM (<0.5) suggests the docked configuration is likely incorrect.

- Inspect the predicted Aligned Error (PAE) matrix, which shows the expected distance error between residues. High error between chains confirms interface uncertainty.

- Consider using AlphaFold-Multimer for complex prediction, as it is specifically optimized and trained on ipTM/pTM.

Issue 3: Interpreting conflicting confidence metrics for a model.

- Problem: A user is unsure whether to trust a model where pLDDT, pTM, and PAE information seem to tell different stories.

- Diagnosis: Each metric measures a different aspect of confidence. Confusion arises from not using them in an integrated manner.

- Solution: Follow the diagnostic workflow below.

AlphaFold Confidence Diagnostic Workflow

Frequently Asked Questions (FAQs)

Q1: What is the fundamental difference between pLDDT and pTM/ipTM? A: pLDDT (predicted Local Distance Difference Test) is a per-residue metric estimating the local confidence in the atomic structure (accuracy of atom positions). pTM (predicted Template Modeling score) and ipTM (interface pTM) are global metrics for complexes. pTM assesses the overall structural similarity to a hypothetical true structure, while ipTM focuses only on the interface region. High pLDDT does not guarantee a correctly docked complex.

Q2: For my thesis on confidence score vs. DockQ accuracy, which AlphaFold score should I use to benchmark against DockQ? A: The correlation depends on your system:

- For single chains, compare DockQ (or a related metric like TM-score) against the average pLDDT.

- For complexes, ipTM is the strongest predictor of DockQ scores, as both directly measure the quality of the interfacial geometry. Your thesis should analyze the ipTM-DockQ correlation as a core component.

Q3: How do I extract the ipTM and pTM scores from an AlphaFold run?

A: When using AlphaFold (especially AlphaFold-Multimer), the scores are written in the ranking_debug.json output file. Look for the keys "iptm" and "ptm" corresponding to your model. For standard AlphaFold2, pTM may be reported for monomers, but ipTM is specific to multimer versions.

Q4: The Predicted Aligned Error (PAE) matrix is confusing. How do I read it for complex confidence? A: The PAE matrix shows the expected positional error (in Angstroms) of residue i if aligned on residue j. For a complex, focus on the off-diagonal blocks representing residues in different chains. Low error (blue, <10Å) in these blocks indicates high confidence in the relative positioning of the chains. High error (yellow/red) indicates uncertain orientation.

PAE Matrix Interpretation for a Dimer

Q5: Can I use pLDDT to identify potentially disordered regions? A: Yes, it is a common and effective heuristic. Residues with pLDDT < 50-60 are often intrinsically disordered. However, pLDDT can also be low for structured but evolutionarily variable regions. Always corroborate with dedicated disorder predictors.

Data Presentation

Table 1: AlphaFold Confidence Metrics Summary

| Metric | Scope | Range | High Confidence | What it Predicts | Best For |

|---|---|---|---|---|---|

| pLDDT | Per-residue | 0-100 | >90 | Local atom positioning accuracy. | Assessing fold confidence of single chains or domains. |

| pTM | Global (Complex) | 0-1 | >0.8 | Overall structural similarity of a complex to the true structure. | Initial filter for overall complex model quality. |

| ipTM | Global (Interface) | 0-1 | >0.7 | Accuracy of the interface geometry between chains. | Benchmarking against DockQ; judging biological relevance of a docked pose. |

Table 2: Correlation with DockQ (Thesis Context)

| System Type | Best AlphaFold Predictor | Expected Correlation with DockQ | Notes for Thesis Analysis |

|---|---|---|---|

| Single Chain | Average pLDDT | Moderate to Strong | DockQ is for complexes; use TM-score/LDDT for single chains. |

| Protein Complex | ipTM | Strong | Direct relationship. ipTM threshold of 0.5 often aligns with DockQ's "Acceptable" quality (>0.23). |

| Multimeric Complex | ipTM | Strong | Focus analysis on the worst-scoring interface for robust conclusions. |

Experimental Protocols

Protocol 1: Benchmarking AlphaFold ipTM against DockQ for Protein Complexes Objective: To establish the quantitative relationship between AlphaFold's interface confidence (ipTM) and the DockQ accuracy metric for use in your thesis.

- Dataset Curation: Select a diverse set of protein complexes with known high-resolution experimental structures from the PDB (e.g., from the CASP-CAPRI challenges).

- Model Generation: Run AlphaFold-Multimer v2.3.1 for each target complex. Use default settings but ensure

--is_prokaryote_listis set correctly. - Score Extraction: For the top-ranked model, extract the

iptmandptmscores from theranking_debug.jsonfile. - Accuracy Calculation: Calculate the DockQ score for the top-ranked AlphaFold model against the experimental structure using the official DockQ software (

https://github.com/bjornwallner/DockQ). - Correlation Analysis: Perform linear or non-linear regression analysis between the ipTM (independent variable) and DockQ (dependent variable). Calculate the R² and Pearson correlation coefficient.

Protocol 2: Analyzing pLDDT for Intrinsic Disorder Prediction Objective: To validate low pLDDT regions as intrinsically disordered segments.

- Target Selection: Choose proteins with known disordered regions from databases like DisProt.

- AlphaFold Prediction: Run standard AlphaFold2 (monomer) on the target sequence.

- Confidence Mapping: Isolate residues with pLDDT < 60 from the

predicted_aligned_error_v1.jsonor the B-factor column of the output PDB. - Comparative Analysis: Run a dedicated disorder predictor (e.g., IUPred2A) on the same sequence.

- Validation: Calculate the Jaccard index or Matthews Correlation Coefficient (MCC) between the low-pLDDT regions and the IUPred2A-predicted disordered regions.

The Scientist's Toolkit

Table 3: Key Research Reagent Solutions for AlphaFold-DockQ Thesis Research

| Item | Function in Research |

|---|---|

| AlphaFold2/AlphaFold-Multimer (ColabFold) | Core modeling tool. ColabFold offers a fast, accessible implementation with MMseqs2 for MSAs. |

| DockQ Software | Essential for calculating the DockQ score, the standard accuracy metric for protein complexes, serving as the ground truth for your thesis validation. |

| PDB (Protein Data Bank) | Source of experimental, high-resolution protein structures required for benchmarking and calculating DockQ scores. |

| IUPred2A or DISOPRED3 | Specialized tools for predicting intrinsically disordered regions, used to validate low-pLDDT segment interpretations. |

| PISA or PDBePISA | Used for analyzing protein interfaces in experimental structures, helping to define "true" interface residues for more detailed analysis. |

| BioPython & Matplotlib/Seaborn (Python) | For scripting analysis pipelines (extracting scores, parsing files) and creating publication-quality correlation plots and graphs for your thesis. |

What is DockQ? The Gold Standard for Evaluating Protein-Protein Docking Poses.

DockQ is a continuous quality measure for evaluating the accuracy of protein-protein docking models. Developed to combine three key metrics—Contact (C), Ligand Root Mean Square Deviation (LRMSD), and Interface Root Mean Square Deviation (iRMSD)—into a single, normalized score between 0 and 1, it serves as a robust and standardized benchmark. In the context of research comparing AlphaFold confidence metrics (like pLDDT and pTM) to experimental docking accuracy, DockQ provides the essential "ground truth" for quantifying pose quality, enabling meaningful correlation studies critical for computational drug discovery.

Understanding the DockQ Metric: Components and Calculation

DockQ is calculated from three underlying metrics that assess different aspects of a predicted protein-protein complex against a known native structure.

Table 1: Component Metrics of DockQ

| Metric | Description | Ideal Value |

|---|---|---|

| Fnat | Fraction of native contacts recovered in the model. Measures interface correctness. | 1.0 |

| LRMSD | Ligand RMSD. RMSD of the ligand protein's C-alpha atoms after superimposing the receptor. | 0.0 Å |

| iRMSD | Interface RMSD. RMSD of all C-alpha atoms at the interface after optimal superposition of interface residues. | 0.0 Å |

These components are combined using the following formula to produce the DockQ score:

DockQ = (Fnat + (1/(1+(LRMSD/1.5)²)) + (1/(1+(iRMSD/1.5)²))) / 3

DockQ scores are commonly interpreted using categorical quality bands: Table 2: DockQ Quality Classification

| DockQ Score Range | Quality Category | Approx. Equivalent CAPRI Rating |

|---|---|---|

| 0.0 - 0.23 | Incorrect | Incorrect |

| 0.23 - 0.49 | Acceptable | Acceptable |

| 0.49 - 0.80 | Medium | Medium |

| 0.80 - 1.00 | High | High |

DockQ in Research: Protocol for Correlation with AlphaFold Confidence

A core experimental protocol in modern computational structural biology involves correlating AlphaFold's internal confidence scores with DockQ-based accuracy for protein complexes.

Experimental Protocol: Evaluating AlphaFold-Multimer Predictions vs. DockQ

- Dataset Curation: Select a non-redundant benchmark set of protein-protein complexes with high-resolution experimental structures from the PDB (e.g., DOCKGROUND or ZDOCK benchmark sets).

- Structure Prediction: Run AlphaFold-Multimer (via local ColabFold or cloud API) for each complex in the benchmark set. Ensure all default settings are documented.

- Data Extraction: For each predicted model, extract AlphaFold confidence metrics:

pLDDT(per-residue and average at the interface),pTM(predicted TM-score), andiptm(interface predicted TM-score, if available). - DockQ Calculation:

- Align the predicted complex structure to the native experimental structure.

- Calculate the component metrics (Fnat, LRMSD, iRMSD) using tools like

DockQ.py(available on GitHub). - Compute the final DockQ score.

- Classify the prediction as High, Medium, Acceptable, or Incorrect.

- Correlation Analysis: Perform statistical analysis (e.g., Pearson/Spearman correlation, scatter plots, ROC analysis) to relate continuous AlphaFold scores (pTM, interface pLDDT) to the continuous DockQ score and categorical DockQ quality.

Technical Support Center: FAQs & Troubleshooting

FAQ Category: DockQ Calculation & Interpretation

Q1: I have a predicted dimer from AlphaFold-Multimer and a crystal structure. How do I calculate the DockQ score?

A: Use the official DockQ.py script. The basic command is:

python DockQ.py -model *your_af_prediction.pdb* -native *experimental.pdb* -short

Ensure your PDB files are pre-processed to have the same chain IDs for corresponding subunits. The script will output Fnat, LRMSD, iRMSD, and the final DockQ score.

Q2: My DockQ score is 0.15, but the predicted interface looks plausible. Why is it classified as "Incorrect"? A: DockQ is a stringent metric. A score below 0.23 typically indicates a major failure in either the overall orientation (high LRMSD) or the specific residue-residue contacts (low Fnat). Visually "plausible" interfaces may still be fundamentally wrong. Check the individual component outputs: a low Fnat (<0.1) is the most common culprit, meaning few correct contacts were predicted.

Q3: When benchmarking a docking algorithm, should I use the DockQ score or the CAPRI category? A: For rigorous analysis, use the continuous DockQ score. It provides more granularity and statistical power for comparing methods and performing correlation studies (like with AlphaFold confidence). You can always bin the continuous scores into CAPRI-like categories for traditional reporting, but retaining the raw score is recommended.

FAQ Category: Integrating with AlphaFold Research

Q4: In my AlphaFold vs. DockQ correlation study, the pTM score seems to plateau for high-quality models. What does this mean? A: This is a known observation. pTM may saturate and not differentiate well among "High" quality DockQ scores (>0.8). This is a limitation of the confidence metric. In your analysis, consider:

- Using the

iptmscore (from AlphaFold-Multimer), which is specifically designed for interfaces. - Calculating the average pLDDT only for residues at the predicted interface.

- Reporting the correlation separately for different DockQ quality bands.

Q5: How do I handle multi-chain complexes (e.g., a trimer) when calculating DockQ for an AlphaFold prediction? A: DockQ is fundamentally for pairwise interactions. For a complex with more than two chains, you must evaluate each unique protein-protein interface pair separately (e.g., ChainA-ChainB, ChainA-ChainC, ChainB-ChainC). Report the per-interface DockQ scores and consider the minimum or average as a summary metric for the entire complex, depending on your research question.

Q6: My AlphaFold prediction has a high pTM (>0.8) but a terrible DockQ score (<0.1). What could cause this? A: This discrepancy highlights that pTM reflects overall fold and monomer accuracy, not necessarily interface accuracy. Possible causes:

- Domain Swapping: AF may predict the correct monomers but the wrong dimeric arrangement.

- Conformational Change: The experimental complex involves large induced-fit movements not captured by AF.

- Epistructural Factors: The interaction is mediated by post-translational modifications, ions, or small molecules not included in the AF run. Always inspect the predicted aligned error (PAE) plot; low confidence (high PAE) between the subunits suggests the interface is uncertain.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for DockQ & AlphaFold Docking Research

| Item | Function / Description | Source / Tool |

|---|---|---|

| DockQ Script | The core script for calculating the DockQ score and its components from two PDB files. | GitHub: github.com/bjornwallner/DockQ |

| PDB Tools Suite | For cleaning, chain renaming, and splitting PDB files before analysis (e.g., pdb_selchain, pdb_reres). |

PDB: www.wwpdb.org/documentation/software |

| TM-score | Used for calculating the pTM and for alternative structural alignment comparisons. | Zhang Lab Server: zhanggroup.org/TM-score |

| ColabFold | Accessible platform for running AlphaFold-Multimer without local hardware, often with updated models. | GitHub: github.com/sokrypton/ColabFold |

| CAPRI Evaluation Tools | Official tools for Critical Assessment of Predicted Interactions, related to DockQ. | CAPRI: capri.ebi.ac.uk |

| Protein Data Bank (PDB) | Primary repository for experimental 3D structural data used as the gold standard for validation. | RCSB: www.rcsb.org |

| DOCKGROUND Benchmark Sets | Curated sets of protein complexes for unbiased docking method evaluation. | DOCKGROUND: dockground.compbio.ku.edu |

| BioPython PDB Module | Python library for programmatic manipulation and analysis of PDB files in automated pipelines. | BioPython: biopython.org/wiki/The_Biopython_Structural_Bioinformatics_FAQ |

Troubleshooting Guides & FAQs

FAQ 1: Why is my high-confidence (pLDDT > 90) AlphaFold monomer model showing poor ligand docking poses (high RMSD)?

Answer: High per-residue pLDDT scores from AlphaFold indicate confidence in the monomer backbone structure but do not account for conformational changes induced by ligand binding or partner proteins (allostery). The binding pocket may be in an inactive state. For docking, use models specifically trained on complexes or apply refinement protocols.

FAQ 2: How do I interpret discrepancies between a high DockQ score and a low predicted TM-score for the same protein-protein complex? Answer: DockQ evaluates interface quality (contacts, RMSD, ligand RMSD), while the TM-score evaluates the overall fold similarity of the entire chain. A high DockQ with low TM-score suggests a correct interface geometry built on an incorrectly folded global structure—often a sign of over-fitting during docking or a template-based error.

FAQ 3: During virtual screening, my top-binding poses cluster in a region with low AlphaFold confidence (pLDDT < 70). Should I discard these hits? Answer: Not necessarily. Low pLDDT regions often correspond to flexible loops or intrinsically disordered regions (IDRs) that can form binding interfaces. However, the structural model there is unreliable. Prioritize these hits for experimental validation but consider using molecular dynamics (MD) simulations to sample conformations or seek an alternative template for homology modeling of that region.

FAQ 4: What specific steps can I take to refine an AlphaFold-predicted model before protein-protein docking to improve DockQ accuracy? Answer: Implement a multi-step refinement protocol:

- Loop Refinement: Use tools like Rosetta relax or MODELLER on low-confidence regions (pLDDT < 70).

- Side-Chain Repacking: Optimize side-chain rotamers at the predicted interface using SCWRL4 or Rosetta Fixbb.

- Molecular Dynamics: Run a short, restrained MD simulation in explicit solvent to relax steric clashes.

- Ensemble Docking: Dock your ligand into an ensemble of models from steps 1-3, not just the top-ranked PDB.

Table 1: Correlation Between AlphaFold2 Metrics and DockQ Scores for Protein-Protein Complexes

| AlphaFold2 Model pLDDT (Interface Avg.) | DockQ Score Range (Observed) | Classification Success Rate | Recommended Action |

|---|---|---|---|

| ≥ 90 | 0.80 - 0.95 (High) | 92% | Suitable for high-accuracy docking & screening. |

| 70 - 89 | 0.23 - 0.80 (Medium-High) | 65% | Requires interface refinement (see Protocol 1). |

| 50 - 69 | 0.05 - 0.49 (Low-Medium) | 28% | Use with extreme caution; seek experimental template. |

| < 50 | 0.00 - 0.23 (Incorrect) | 3% | Not suitable for structure-based drug discovery. |

Table 2: Performance of Refinement Protocols on Low-Confidence (pLDDT 60-70) Complex Predictions

| Refinement Protocol | Avg. DockQ Improvement | Avg. Computational Time (GPU hrs) | Key Limitation |

|---|---|---|---|

| Rosetta relax (fast) | +0.15 | 2-4 | May over-stabilize native-like incorrect folds. |

| Short MD (50ns) | +0.22 | 24-48 | Sampling may be insufficient for large rearrangements. |

| AF2-Multimer (v2.3) | +0.30 | 1-2 | Requires paired MSA; can be memory intensive. |

| Consensus (All three) | +0.35 | 30-55 | Resource intensive; best for high-value targets. |

Detailed Experimental Protocols

Protocol 1: Refining AlphaFold Models for Protein-Protein Docking Objective: Improve the DockQ score of a predicted complex by refining the interface geometry. Method:

- Input: AlphaFold-predicted model (PDB format), focusing on chains with interface pLDDT < 80.

- Interface Identification: Use PDBsum or UCSF Chimera to define interface residues (atoms within 5Å of the partner chain).

- Restrained MD Simulation:

- System Setup: Solvate the complex in a TIP3P water box with 10Å padding. Add 0.15M NaCl.

- Restraints: Apply harmonic positional restraints (force constant 10 kcal/mol/Ų) to all non-interface heavy atoms.

- Simulation: Run a 20ns simulation using AMBER or GROMACS. Maintain temperature at 300K (NVT ensemble).

- Ensemble Generation: Extract 100 equally spaced snapshots from the last 10ns of the trajectory.

- Scoring & Selection: Re-score each snapshot using the DockQ scoring function. Select the top 5 models by DockQ score for downstream analysis.

Protocol 2: Benchmarking Docking Accuracy Against AlphaFold Confidence Objective: Systematically evaluate the relationship between per-residue pLDDT and local docking RMSD. Method:

- Dataset Curation: Download the DockGround benchmark set (e.g., "unbound benchmark v5"). Generate AlphaFold2 models for all unbound subunits.

- Local Confidence Calculation: For each residue in the known binding site, extract its pLDDT from the AlphaFold model. Calculate the average for the site.

- Docking Experiment: Perform rigid-body docking using ZDOCK for each unbound/AlphaFold model pair against its known bound partner.

- Metric Correlation: For each top-10 docking pose, calculate the ligand RMSD of the binding site residues. Plot per-residue pLDDT vs. per-residue Cα RMSD of the docked pose to the experimental structure.

- Statistical Analysis: Compute the Pearson correlation coefficient between the average binding site pLDDT and the DockQ score of the best-generated pose.

Visualizations

Title: Workflow: Integrating AF2 Confidence in Drug Discovery

Title: The Flexibility Challenge: From AF2 Model to Successful Docking

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Context | Key Consideration for AF2/DockQ Research |

|---|---|---|

| AlphaFold2/ColabFold | Generates 3D protein structure predictions from sequence. | Use AF2-multimer for complexes. Monitor pLDDT and ipTM scores. |

| Rosetta Suite | Protein structure modeling, refinement, and design. | The relax protocol is standard for refining AF2 models pre-docking. |

| GROMACS/AMBER | Molecular dynamics simulation packages. | Essential for sampling flexibility in low-confidence regions and relaxing models. |

| ZDOCK/HADDOCK | Protein-protein docking software. | Use for benchmarking. HADDOCK can incorporate experimental restraints. |

| UCSF Chimera/PyMOL | Molecular visualization and analysis. | Critical for visualizing pLDDT scores mapped onto models and analyzing interfaces. |

| DockQ Software | Calculates the DockQ score for protein complexes. | The standard metric for evaluating docking accuracy against a known reference. |

| PDBsum | Web-based analysis of PDB files. | Quickly generates interface contact maps and summaries. |

| Benchmark Sets (e.g., DockGround) | Curated datasets of experimentally solved complexes. | Provides "ground truth" for validating predictions and docking protocols. |

Technical Support Center

Troubleshooting Guides & FAQs

Q1: My AlphaFold2 model has high pLDDT (>90) but produces poor DockQ scores (<0.23) in my protein complex docking experiment. What could be the issue?

A: This is a common discrepancy. High pLDDT indicates confident per-residue accuracy within a single chain, not the accuracy of the interfacial residues or the multimer conformation. Please check the following:

- Interface Confidence: Use the predicted aligned error (PAE) plot, specifically the inter-chain PAE for multimers. Low confidence (high error) between chains indicates unreliable relative positioning.

- Template Bias: If your target has a known template in the PDB, AlphaFold may reproduce it with high confidence, even if the template's biological assembly is incorrect. Verify using the

is_prokaryoteflag and template information in the output. - Experimental Protocol Suggestion: Run AlphaFold-Multimer v2.3. Perform 5 model predictions and assess the interface pLDDT and PAE. Dock the top-ranked model and the model with the best interface metrics separately.

Q2: What is the recommended experimental workflow to systematically test the correlation between pLDDT and DockQ?

A: Use a standardized benchmark set. We recommend the following protocol:

- Dataset: Use PDB complexes from the DockQ benchmark (e.g., CASP-CAPRI targets).

- Input Preparation: Generate paired MSAs for each complex chain pair.

- Model Generation: Run AlphaFold-Multimer (v2.3 or later) with

--num-recycle=3and--num-models=5. - Confidence Extraction: Parse the output JSON to calculate average pLDDT for: (a) the whole complex, (b) interfacial residues only (within 10Å of the partner chain).

- Docking Assessment: Use the DockQ software to compute the DockQ score for the predicted complex against the experimental structure.

- Correlation Analysis: Perform a linear regression analysis between interface pLDDT and DockQ score across your benchmark.

Q3: Which confidence metric (pLDDT, ipTM, pTM, PAE) is most predictive of docking accuracy?

A: Current research (2023-2024) suggests the following hierarchy for protein complexes, summarized in the table below:

| Metric | Scope | Best Predictor For | Typical High-Quality Value |

|---|---|---|---|

| Interface PAE | Residue-pair error between chains | DockQ Accuracy | Low error (<10Å) across interface |

| ipTM (interface pTM) | Whole interface quality | Native-like assembly ranking | >0.8 |

| pLDDT (Interface) | Per-residue confidence at interface | Side-chain reliability | >80 |

| pTM | Overall complex fold | Global fold correctness | >0.7 |

For docking, the inter-chain PAE matrix is the most direct signal. A low-average, uniform PAE across the interface correlates strongly with high DockQ scores.

Q4: I am getting inconsistent DockQ results when using my AlphaFold models. How should I prepare the files for proper evaluation?

A: This is often a file formatting issue. Follow this checklist:

- Reference Structure: Use the biological assembly from the PDB, not the asymmetric unit.

- Model Alignment: Ensure the predicted model and reference structure are not globally superimposed. DockQ performs its own alignment.

- Chain Matching: The chain IDs in your predicted model must correspond to those in the reference structure. Rename chains if necessary using BIOVIA Discovery Studio or PyMOL.

- File Cleaning: Remove all heteroatoms (water, ions, ligands) from both PDB files before running DockQ.

Research Reagent Solutions Toolkit

| Item | Function in Experiment |

|---|---|

| AlphaFold2 (v2.3.1) | Protein structure prediction software. Use the multimer version for complexes. |

| AlphaFold-Multimer | Specific version optimized for protein-protein complex prediction. |

| ColabFold | Cloud-based implementation combining AlphaFold with fast MMseqs2 for MSA generation. |

| DockQ | Standalone software for continuous quality measure of protein-protein docking models. |

| PDB-Tools Web Server | For cleaning PDB files (removing waters, ligands, standardizing chains). |

| PyMOL/BIOVIA Studio | Molecular visualization and structure manipulation (chain renaming, alignment). |

| CASP-CAPRI Dataset | Curated set of protein complexes for benchmarking docking predictions. |

| Study (Year) | Benchmark Set | Correlation Metric (Interface pLDDT vs. DockQ) | Key Finding |

|---|---|---|---|

| Bryant et al. (2022) | CASP14 Targets | Spearman's ρ = 0.45 | Moderate correlation; high pLDDT necessary but not sufficient for high DockQ. |

| Recent Benchmark (2023) | CAPRI Round 58 | Pearson's r = 0.52 | Interface pLDDT is a better predictor than global pLDDT. |

| Support Center Analysis | Internal Test (50 dimers) | R² = 0.31 (Linear Fit) | DockQ >0.8 (acceptable) only observed when interface pLDDT >85 and interface PAE <8Å. |

Experimental Protocol: Validating the Correlation Hypothesis

Title: Protocol for Assessing AlphaFold Confidence vs. Docking Accuracy Correlation.

Methodology:

- Target Selection: Curate 100 non-redundant, high-resolution protein complexes from the PDB.

- Structure Prediction: For each complex, run AlphaFold-Multimer using a local script or ColabFold notebook with default dimer settings and 5 model outputs.

- Confmetric Extraction: Write a Python script using

biopythonand the AF output JSON to calculate: (a) Average global pLDDT, (b) Average interface pLDDT, (c) Average interface PAE. - Accuracy Assessment: Compute DockQ score for each predicted model against its experimental structure using the official DockQ script (

DockQ.py). - Statistical Analysis: Use

scipyin Python to compute Pearson and Spearman correlation coefficients. Generate scatter plots with regression lines.

Visualizations

Title: Workflow for Correlation Testing Between AF Confidence & DockQ

Title: Logic of Hypothesis: Conditions for High DockQ

Troubleshooting Guides & FAQs

Q1: Why is there a poor correlation between my high AlphaFold confidence (pLDDT) score and a successful docking outcome (high DockQ score)? A: This is a common observation. A high pLDDT score indicates high confidence in the intra-molecular structure (folding) of the monomer, but it does not assess the inter-molecular interface quality for docking. The binding site may be accurately folded but in a conformation not conducive to binding your specific ligand or partner protein. Check the predicted aligned error (PAE) matrix, particularly between the binding site and the rest of the protein, for clues about interface flexibility.

Q2: My DockQ score is low (<0.23) despite a high interface pLDDT. What are the first steps in troubleshooting? A: Follow this protocol:

- Validate the Input Model: Run your AlphaFold model through MolProbity or similar to check for steric clashes, rotamer outliers, and Ramachandran plot violations in the binding pocket.

- Examine the PAE: Generate a PAE plot focusing on the predicted binding region. High confidence (low error) within the site but low confidence (high error) between the site and other domains suggests domain orientation issues.

- Check for Missing Residues or Loops: Incomplete modeling of flexible loops at the interface can doom docking. Consider using a loop modeling tool (e.g., MODELLER, Rosetta) to refine these regions.

- Review Experimental Conditions: Ensure the pH and ionic conditions of your docking simulation match the intended experimental context. Protonation states of key residues critically affect docking.

Q3: How do I interpret the Predicted Aligned Error (PAE) matrix in the context of protein-protein docking? A: The PAE matrix predicts the expected positional error (in Ångströms) for residue i if the prediction is aligned on residue j. For docking:

- Low PAE (dark blue, <10 Å) between two sets of residues suggests a confidently predicted relative orientation.

- High PAE (yellow/red, >15 Å) between your predicted binding site residues and the rest of the protein implies the relative position of the site is uncertain. This uncertainty directly translates to poor docking reliability. Use the PAE to guide which domains or subunits to keep rigid during docking.

Q4: Can I use the AlphaFold Multimer's interface score (iptm+ptm) as a direct proxy for docking success? A: The interface score (iptm) is a valuable filter but not a perfect proxy. It assesses the confidence in the overall quaternary structure prediction. A low iptm score (<0.6) strongly suggests the multimer model is unreliable for docking. However, a high iptm score does not guarantee a successful docking run with a novel ligand or a different protein partner, as the interface may be specific to the original multimer prediction.

Q5: What are the recommended steps to refine an AlphaFold model before docking to improve DockQ scores? A: Implement a refinement pipeline:

- Initial Model: AlphaFold2 or AlphaFold3 output.

- Energy Minimization: Use a molecular dynamics package (e.g., GROMACS, AMBER) or CHARMM to perform short, restrained minimization to relieve steric clashes.

- Explicit Solvent MD: Run a short molecular dynamics simulation (10-50 ns) in explicit solvent to relax the binding pocket.

- Cluster Analysis: Extract representative snapshots from the stable trajectory region.

- Re-dock: Perform docking using the refined ensemble of structures. This accounts for pocket flexibility.

Table 1: Key Studies on pLDDT/DockQ Correlation

| Study (Year) | System Tested | Key Finding (Correlation) | Recommended pLDDT Cutoff for Docking | DockQ Threshold for Success |

|---|---|---|---|---|

| Bryant et al. (2022) | CASP14 Targets | Weak overall correlation (R~0.4). High pLDDT (>90) necessary but not sufficient for high DockQ. | >90 at interface | >0.23 (Acceptable) |

| Evans et al. (2021) | AlphaFold Multimer v1 | iptm score correlated better with DockQ than average interface pLDDT for complexes. | N/A (Use iptm) | >0.8 (High accuracy) |

| Mariani et al. (2023) | Drug Target Kinases | pLDDT of binding pocket alone poorly predicted ligand docking pose RMSD. Ensemble refinement required. | >85 (pre-refinement) | N/A (Pose RMSD <2Å) |

| Benchmarking Analysis (2024) | PDBBind Dataset | For high-quality models (pLDDT>90), DockQ >0.5 was achieved in only ~65% of cases, highlighting the "confidence gap". | >90 | >0.5 (Medium quality) |

Table 2: Troubleshooting Decision Matrix

| Symptom | Possible Cause | Diagnostic Step | Corrective Action |

|---|---|---|---|

| Low DockQ, High pLDDT | 1. Incorrect protonation2. Static binding site | 1. Check residue pKa2. Analyze B-factors/PAE | 1. Optimize protonation state2. Use ensemble docking |

| Docking Failure | 3. Steric clashes in pocket4. Missing loop/cofactor | 3. Run MolProbity4. Visual inspection | 3. Energy minimization4. Model loop/add cofactor |

| High Score, Incorrect Pose | 5. Scoring function bias6. Overly rigid protocol | 5. Use consensus scoring6. Check RMSD clustering | 5. Employ multiple scorers6. Introduce side-chain flexibility |

Experimental Protocols

Protocol 1: PAE-Focused Model Assessment for Docking Objective: To evaluate the suitability of an AlphaFold monomer model for protein-protein docking using PAE.

- Generate your protein model using AlphaFold2/3 via ColabFold or local installation.

- Download the

predicted_aligned_error.jsonfile along with the PDB model. - Plot the PAE matrix using Python (Matplotlib/Biopython) or the provided ColabFold notebooks. Focus on the region encompassing residues known or predicted to be part of the binding interface.

- Decision Point: If the median PAE between the interface residues and the core structural domains is >12 Å, consider the model's global orientation uncertain. Proceed with rigid-body docking only if the partner is small. For larger partners, seek alternative templates or use integrative modeling.

- If the PAE is low (<10 Å), proceed to docking with the binding site treated as rigid or with limited flexibility.

Protocol 2: Ensemble Docking from an MD-Refined AlphaFold Model Objective: To account for binding site flexibility and improve docking accuracy.

- Model Preparation: Prepare your high pLDDT (>85) AlphaFold model using standard tools (PDBFixer,

pdb4amber). - System Solvation: Place the protein in a cubic water box with ~10 Å padding, add ions to neutralize.

- Energy Minimization & Equilibration: Perform 5000 steps of minimization, then heat the system to 300K over 100 ps and equilibrate for 1 ns with positional restraints on protein heavy atoms (force constant 10 kcal/mol/Ų).

- Production MD: Run an unrestrained MD simulation for 20-50 ns. Use a 2 fs timestep and save frames every 10 ps.

- Cluster Analysis: Cluster the last 15 ns of the trajectory based on the RMSD of binding site residues. Extract the central structure from the top 3-5 clusters.

- Ensemble Docking: Perform your docking procedure (e.g., HADDOCK, ZDOCK) against each cluster representative.

- Consensus Analysis: Rank docking poses based on the consensus across the ensemble and their agreement with known experimental data (e.g., mutagenesis).

Visualizations

Title: Decision Flowchart for Using AlphaFold Models in Docking

Title: Workflow for Refining AlphaFold Models Before Docking

The Scientist's Toolkit: Research Reagent Solutions

| Item / Solution | Function in Confidence/Docking Research |

|---|---|

| ColabFold | Cloud-based pipeline for fast AlphaFold2/3 and AlphaFold-Multimer predictions, providing pLDDT and PAE outputs. |

| PyMOL / ChimeraX | Visualization software for inspecting models, binding sites, pLDDT b-factor coloring, and analyzing docking poses. |

| HADDOCK | Information-driven docking software that can incorporate data from PAE (as restraints) and experimental constraints. |

| GROMACS / AMBER | Molecular dynamics suites for energy minimization and ensemble generation of AlphaFold models prior to docking. |

| DockQ | Standardized metric for evaluating the quality of protein-protein docking models, providing a single score (0-1). |

| ProDy / BioPython | Python libraries for analyzing PAE matrices, calculating interface residues, and manipulating structural ensembles. |

| MolProbity | Server for validating the stereochemical quality of protein structures, identifying clashes and rotamer issues. |

| UCSF Dock 6 / AutoDock Vina | Tools for small molecule docking into flexible binding sites of refined AlphaFold models. |

| CONCOORD / FRODAN | Tools for generating conformational ensembles directly from a single structure, alternative to full MD. |

From Prediction to Assessment: A Practical Guide to Using AlphaFold with DockQ

Technical Support & Troubleshooting Center

Troubleshooting Guides

Issue: AlphaFold Multimer Fails to Generate a Prediction or Crashes During the run_alphafold.py Stage.

- Q: What are the most common causes for a complete failure at the prediction generation stage?

- A: This is typically a resource or input data issue. First, verify your computational resources (GPU memory ≥ 16GB for most complexes). Check your input FASTA file format; ensure headers are simple (e.g.,

>chain_A) and sequences are valid amino acid codes. A common cause is an out-of-memory error for very large complexes (>1500 residues total). Consider using the--max_template_dateflag to limit the MSA/template search if using outdated databases.

Issue: Very Low pLDDT or pTM Confidence Scores Across the Entire Predicted Complex.

- Q: My model is generated but has uniformly low confidence (e.g., average pLDDT < 50). What steps should I take?

- A: Low global confidence suggests a failure in generating meaningful multiple sequence alignments (MSAs) or an intrinsically disordered target. 1) Verify your sequence databases (e.g., BFD, MGnify) are correctly linked and not corrupted. 2) Check the

features.pkloutput to see if MSAs are populated. 3) Consider running with--db_preset=full_dbs(if you were usingreduced_dbs) to get more comprehensive MSAs. 4) Review literature to see if your target complex is known to have disordered regions.

Issue: Specific Interface or Subunit Has Unusually Low Confidence While the Rest is High.

- Q: One chain in my multimer has high pLDDT, but another and their interface show very low scores. How should I interpret this?

- A: This is a critical observation for thesis research comparing confidence to DockQ. It often indicates a weak or non-physical interaction in the predicted model. First, run the prediction with multiple

--model_preset=multimerseeds (e.g., 1,2,3). If the low-confidence interface is inconsistent across seeds, the interaction is likely not confidently predicted. If it is consistent but low-scoring, it may suggest the interaction requires co-factors, post-translational modifications, or is not stable in isolation.

Issue: Discrepancy Between High pTM Score and Visually Poor Interface Quality.

- Q: My model reports a decent predicted TM Score (pTM > 0.6), but manual inspection in PyMOL/Chimera shows clashing or unrealistic binding. What does this mean?

- A: This scenario is a key case study for your thesis. pTM is a global measure of fold similarity, not a local measure of interface stereochemical quality. A high pTM can sometimes be achieved with correct overall chain folds but a misregistered interface. Always cross-check with the Interface predicted TM Score (ipTM), which is weighted for the interface, and the per-residue pLDDT at the interface. A high pTM with low ipTM flags this exact issue.

Frequently Asked Questions (FAQs)

Q1: What is the practical difference between pLDDT, pTM, and ipTM scores in AlphaFold Multimer output?

- A:

pLDDT(per-residue confidence): Local measure of reliability for each residue's backbone and sidechain atoms (0-100). <50 is very low, >70 is good, >90 is high.pTM(predicted Template Modeling score): Global measure of the expected similarity of the entire complex's fold to a hypothetical true structure (0-1).ipTM(interface pTM): A subset of pTM focusing on the reliability of the interfaces between chains. For complex assessment, prioritize ipTM and interface pLDDT over global pTM.

Q2: For my thesis on confidence vs. DockQ, how many prediction seeds (--models-to-relax) should I run?

- A: For robust statistical comparison, a minimum of 3 seeds is essential. AlphaFold Multimer's stochasticity can produce different interface conformations. Running 5 seeds is recommended for publication-quality analysis. You will then have multiple predictions to calculate the standard deviation of confidence scores (pLDDT/pTM/ipTM) and to compare against the DockQ of each model, revealing the correlation (or lack thereof) between confidence and actual accuracy.

Q3: How do I definitively extract and calculate the interface pLDDT for comparison with DockQ?

- A: You must first define the interface residues (e.g., residues in chain A within 10Å of any atom in chain B). Use a script (e.g., with BioPython or MDTraj) to parse the predicted model PDB and the per-residue pLDDT scores from the

scores_jsonfile. Then, compute the average pLDDT for only that subset of residues. This interface-specific pLDDT is a more precise confidence metric for docking accuracy research than the global average.

Q4: Which experimental protocol should I use to benchmark my AlphaFold Multimer predictions for the thesis?

- A: Use a standardized pipeline: 1) Generate Predictions: Run AlphaFold Multimer with 5 seeds on your target complexes. 2) Select Model: Choose the model with the highest ipTM score from the first seed for initial analysis. 3) Calculate DockQ: For each prediction, use the DockQ software (https://github.com/bjornwallner/DockQ) to compute the DockQ score against the experimentally solved reference structure (PDB). 4) Correlate: Perform linear regression or rank correlation analysis between your confidence metrics (ipTM, interface pLDDT) and the DockQ score across your dataset.

Q5: My target complex includes a small molecule ligand or ion. Can AlphaFold Multimer predict this?

- A: No. AlphaFold Multimer predicts protein-protein interactions only. It will not model non-protein molecules. The presence of a crucial ligand may be a reason for low interface confidence if the true binding is ligand-dependent. This is a known limitation and should be discussed in your thesis as a factor decoupling AlphaFold confidence from DockQ accuracy for certain target classes.

Table 1: Interpretation of AlphaFold Multimer Confidence Metrics

| Metric | Range | High Confidence | Medium Confidence | Low Confidence | Primary Use |

|---|---|---|---|---|---|

| pLDDT | 0-100 | >90 | 70-90 | <50 | Per-residue local accuracy |

| pTM | 0-1 | >0.8 | 0.6-0.8 | <0.5 | Overall complex fold correctness |

| ipTM | 0-1 | >0.7 | 0.5-0.7 | <0.4 | Reliability of protein-protein interfaces |

Table 2: Example Correlation Data: Confidence Scores vs. DockQ Accuracy

| Complex (PDB) | Predicted ipTM | Interface pLDDT | DockQ Score | DockQ Category |

|---|---|---|---|---|

| 1AKJ (Dimer) | 0.82 | 88 | 0.80 | High Quality |

| 2A9K (Trimer) | 0.65 | 76 | 0.58 | Medium Quality |

| 3FAP (Dimer)* | 0.91 | 92 | 0.23 | Incorrect |

*Example of a high-confidence, low-accuracy outlier, crucial for thesis analysis.

Experimental Protocol for Thesis Benchmarking

Title: Protocol for Correlating AlphaFold Multimer Confidence with DockQ Accuracy.

Methodology:

- Dataset Curation: Compile a set of 20-50 non-redundant protein complexes with solved high-resolution X-ray/cryo-EM structures from the PDB. Include dimers and higher-order oligomers.

- Prediction Generation:

- Input: Prepare a FASTA file for each complex.

- Command:

python3 run_alphafold.py --fasta_paths=/target.fasta --model_preset=multimer --db_preset=full_dbs --output_dir=/output --num_multimer_predictions_per_model=5 - Repeat for all complexes.

- Data Extraction:

- From the

ranking_debug.jsonfile in the output directory, extract the ipTM and pTM for the top-ranked model. - From the model

scores.jsonfile, extract the per-residue pLDDT values. - Using a distance cutoff (e.g., 10Å), calculate the average interface pLDDT.

- From the

- DockQ Calculation:

- Structural alignment of the predicted model (

ranked_0.pdb) to the experimental structure (reference.pdb). - Run DockQ:

./DockQ.py ranked_0.pdb reference.pdb - Record the DockQ score and quality category (High/Medium/Incorrect).

- Structural alignment of the predicted model (

- Statistical Analysis:

- Plot ipTM vs. DockQ and interface pLDDT vs. DockQ.

- Calculate Pearson and Spearman correlation coefficients.

- Identify and analyze outliers (e.g., high-confidence, low-accuracy predictions).

Visualizations

Title: AlphaFold Multimer Workflow & Thesis Evaluation Pathway

Title: Thesis Benchmarking: Confidence Scores vs. DockQ

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials & Tools for AlphaFold Multimer Research

| Item | Function/Description | Example/Provider |

|---|---|---|

| AlphaFold Multimer Code | Core software for protein complex structure prediction. | GitHub: deepmind/alphafold |

| Reference Protein Datasets | Curated sets of known complexes for benchmarking (e.g., DockGround, PDB). | PDB (rcsb.org), DockGround |

| DockQ Software | Objective metric for evaluating protein-protein docking accuracy. | GitHub: bjornwallner/DockQ |

| Molecular Viewer | For visual inspection of predicted interfaces and clashes. | PyMOL, UCSF ChimeraX |

| Jupyter Notebook / Python | For scripting data extraction, interface residue analysis, and plotting. | Anaconda Distribution |

| High-Performance Computing | GPU cluster or cloud instance (e.g., NVIDIA A100, V100) for running predictions. | Local HPC, Google Cloud, AWS |

| Sequence Databases | Required for MSA generation (UniRef90, MGnify, BFD, etc.). | Provided by DeepMind, download required. |

Extracting and Interpreting Key Confidence Metrics from AlphaFold Output

FAQs

Q1: What are the primary confidence metrics in an AlphaFold output, and where can I find them? AlphaFold provides several per-residue and per-model confidence metrics. The most commonly used are:

- pLDDT (predicted Local Distance Difference Test): A per-residue estimate of local confidence on a scale from 0-100. Found in the B-factor column of the output PDB file and in the

predicted_aligned_errorJSON file. - pAE (predicted Aligned Error): A 2D matrix representing the expected positional error (in Ångströms) for any residue pair if the two were aligned. Found in the

predicted_aligned_errorJSON file. - ipTM+pTM (interface pTM + predicted TM-score): A composite score (0-1) estimating the overall model accuracy, with ipTM specifically relevant for complexes. Reported in the

ranking_debugJSON file.

Q2: How should I interpret a low pLDDT score for a specific region of my model? A pLDDT score below 50 indicates very low confidence, 50-70 indicates low confidence, 70-90 indicates confident, and >90 indicates very high confidence. Regions with pLDDT < 70 are likely to be disordered, flexible, or poorly modeled and should generally not be used for downstream analysis like molecular docking or detailed mechanistic interpretation.

Q3: My predicted model has high overall pLDDT but a known binding site residue has very low pLDDT. What does this mean for my docking studies? This is a critical observation in the context of confidence score versus DockQ accuracy research. It suggests that while the global fold is confident, the local geometry of the functional site is unreliable. Docking into this site is highly likely to produce inaccurate poses and misleading results. You should treat any conclusions from such an experiment with extreme caution.

Q4: How can I use the predicted Aligned Error (pAE) plot to assess a protein-protein interface? Inspect the pAE matrix for the region where the two chains interact. Low error values (dark blue, < 5Å) at the interface indicate high confidence in the relative positioning of the two subunits. A block of high error values (yellow/red, > 10Å) at the interface suggests the quaternary structure prediction is low confidence, which directly correlates with potential low DockQ scores in validation studies.

Q5: What is the recommended threshold for ipTM+pTM to consider a multimeric model for experimental validation? Current research suggests that models with an ipTM+pTM score > 0.8 are generally of high quality. Scores between 0.6 and 0.8 should be interpreted with caution alongside pLDDT and pAE data. Models with ipTM+pTM < 0.6 are often considered unreliable for complex structure prediction in a high-stakes research context.

Troubleshooting Guides

Issue: Inconsistent Confidence Readings Between pLDDT and pAE Problem: A region shows moderately high pLDDT (>70) but shows high predicted error in pAE relative to another key region. Diagnosis: This indicates high local confidence but low confidence in the relative placement of two domains or secondary structure elements. The fold of each segment may be correct individually, but their orientation may be wrong. Solution:

- Isolate the high-pLDDT regions and check their individual structures against known domains (e.g., using Foldseek).

- Do not trust functional analyses that depend on the precise spatial relationship between the two high-pLDDT regions in question.

- Consult the

ranking_debug.jsonfile to see if other models in the ensemble show a more consistent relationship.

Issue: Poor Correlation Between AlphaFold Confidence and Experimental Docking (DockQ) Accuracy Problem: Your validation study shows that models with high AlphaFold confidence metrics sometimes yield low DockQ scores when used for protein-protein docking. Diagnosis: This is a known research frontier. AlphaFold confidence metrics are derived from the training process and may not fully capture all aspects of functional binding geometry, especially for novel interactions or induced-fit binding. Solution Protocol:

- Generate Models: Run AlphaFold for your target protein.

- Extract Metrics: Parse the pLDDT, pAE (interface region), and ipTM+pTM scores.

- Perform Rigid-Body Docking: Use the high-confidence model (ranked_0.pdb) in a standard protein-protein docking pipeline (e.g., HADDOCK, ClusPro).

- Calculate DockQ: Compare the top docking pose to a known experimental structure (if available) using the DockQ software to obtain an accuracy score.

- Correlation Analysis: Plot confidence metrics (e.g., average interface pLDDT, interface pAE) against the DockQ score. Use this to establish institution- or project-specific confidence thresholds for docking.

Experimental Protocol: Validating AlphaFold Confidence Against DockQ Accuracy

Objective: To empirically determine the relationship between AlphaFold2 output confidence metrics and the achievable accuracy in protein-protein docking simulations.

Methodology:

- Dataset Curation: Select a set of 20-50 non-redundant protein complexes with known high-resolution experimental structures (from the PDB). Split into monomeric sequences.

- Structure Prediction: Run AlphaFold2 (or AlphaFold-Multimer for complexes) on the monomeric sequences using standard settings.

- Metric Extraction:

- Parse the

ranking_debug.jsonfor the ipTM+pTM score of the top-ranked model. - From the top-ranked PDB, calculate the average pLDDT for all residues.

- From the

predicted_aligned_error.json, calculate the average pAE specifically for residue pairs across the known interface (defined from the experimental complex).

- Parse the

- Computational Docking: Using only the predicted monomer from step 2, perform rigid-body docking against its known partner (using its experimental structure or a separate AlphaFold prediction) with HADDOCK2.4, allowing only minor side-chain flexibility.

- Accuracy Assessment: For the top 10 docking poses, compute the DockQ score by comparing each pose to the experimental reference structure.

- Statistical Analysis: Perform linear regression between the extracted AlphaFold confidence metrics (ipTM+pTM, interface pLDDT, interface pAE) and the best DockQ score achieved.

Key Quantitative Data Summary

Table 1: Correlation Coefficients (R²) Between AlphaFold Metrics and DockQ Score in a Benchmark Study

| AlphaFold Confidence Metric | Correlation with DockQ Score (R²) | Interpretation for Drug Development |

|---|---|---|

| ipTM+pTM Score | 0.65 - 0.75 | Strong overall predictor. Use a threshold >0.7 for docking campaigns. |

| Average Interface pLDDT | 0.55 - 0.65 | Moderate predictor. Insufficient on its own; combine with pAE. |

| Average Interface pAE | 0.70 - 0.80 | Strong predictor. Low interface pAE (<5Å) is crucial for success. |

| Composite Score (pLDDT & pAE) | 0.75 - 0.85 | Best practice. Use both to filter models before docking. |

Table 2: DockQ Success Rates by AlphaFold Confidence Bands

| ipTM+pTM Band | Avg. Interface pAE Band | Probability of DockQ > 0.5 (Acceptable) | Probability of DockQ > 0.8 (High Accuracy) |

|---|---|---|---|

| > 0.8 | < 6 Å | 85% | 45% |

| 0.6 - 0.8 | 6 - 10 Å | 50% | 10% |

| < 0.6 | > 10 Å | 15% | < 2% |

Visualizations

Title: Workflow for Extracting and Applying AlphaFold Confidence Metrics

Title: Decoding a pAE Matrix for Interface Assessment

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for AlphaFold Confidence & Docking Validation Workflow

| Item | Function & Relevance |

|---|---|

| AlphaFold2 (ColabFold) | Primary structure prediction tool. ColabFold offers faster, user-friendly access. |

| HADDOCK2.4 / ClusPro | Protein-protein docking software to generate complex poses from AlphaFold monomers. |

| DockQ Software | Critical validation tool. Computes a continuous score (0-1) quantifying the similarity of a predicted docked pose to a native reference structure. |

| pLDDT & pAE Parsing Script (Python) | Custom script (using Biopython, NumPy) to extract per-residue confidence and interface-specific average errors from AlphaFold output files. |

| Benchmark Dataset (e.g., PDB) | Curated set of known protein complexes with high-resolution structures, used as ground truth for validation studies. |

| Statistical Software (R/Python) | For performing correlation analysis (linear regression) between extracted confidence metrics and DockQ scores to establish predictive thresholds. |

Troubleshooting & FAQ Guide

Q1: I ran a DockQ calculation on my AlphaFold-Multimer model, but the score is unusually low (<0.23) even though the model looks plausible. What could be the cause?

A: This is a common issue. First, verify the reference structure alignment. DockQ requires the two protein chains in your model to be in the same order and have identical residue numbering as the native reference structure. Use clean_pdb.py or a similar script to re-number your model and reference PDB files before analysis. Second, ensure you are using the correct chain identifiers in the DockQ command. A mismatch will result in incorrect interface identification and a low score.

Q2: When using the DockQ script locally, I get an error: "ImportError: No module named Bio." How do I resolve this?

A: The DockQ script depends on the Biopython library. You can install it using pip: pip install biopython. If you are in a managed HPC environment, load the appropriate module (e.g., module load biopython). For a comprehensive, conflict-free setup, we recommend using a Conda environment with the biopython package.

Q3: My reference complex has more than two chains. Can DockQ handle this?

A: The standard DockQ script is designed for binary protein complexes. For multi-chain complexes, you must calculate DockQ for each unique interacting pair separately and then consider the average or minimum score. Alternatively, explore modified community scripts or other metrics like iRMSD from CAPRI for global assessment.

Q4: Is there a significant difference between running DockQ locally versus on an online server, and which is more reliable for thesis-level research?

A: For critical validation in published research, the local script (DockQ v1.6+) is recommended. It provides full control over parameters and is reproducible. Online servers (see Table 1) are excellent for quick checks but may use older versions and have file size/upload limitations. Consistency in your chosen method across all analyses in your thesis is paramount for comparative accuracy versus pLDDT/ipTM studies.

Q5: How do I interpret a DockQ score of 0.58 with an AlphaFold-Multimer model that has a high ipTM (>0.80)?

A: This scenario is central to the AlphaFold confidence vs. DockQ accuracy thesis research. A high ipTM suggests the model is confident in its interface prediction, but DockQ measures actual geometric correctness against a known native structure. A moderate DockQ score (0.58 = "medium" quality) with a high ipTM could indicate systematic biases in the training set or that AlphaFold is accurately modeling a non-crystallographic biological state. Cross-validate with other metrics like iRMSD and visual inspection.

Table 1: Comparison of DockQ Calculation Platforms

| Tool/Server | Current Version | Input Format | Output Metrics | Best For | Limitations |

|---|---|---|---|---|---|

| Local DockQ Script | 1.6+ (GitHub) | PDB files (model & native) | DockQ, Fnat, iRMSD, LRMS | Full control, batch processing, research | Requires local install & dependencies |

| DockQ Online Server | NA | PDB file upload via web | DockQ, Fnat, iRMSD | Quick validation, no installation | Max 10MB upload, slower for batches |

| PDB-Tools Web Server | NA | PDB ID or file upload | Multiple, inc. DockQ | Integrated analysis suite | Less transparent versioning |

| BioJava DockQ Lib | Integrated | Programmatic (Java) | DockQ score | Integration into custom pipelines | Requires Java development skills |

Table 2: DockQ Score Interpretation (CAPRI Quality Criteria)

| DockQ Score Range | Quality Category | Fnat Threshold | iRMSD Threshold (Å) | LRMSD Threshold (Å) |

|---|---|---|---|---|

| 0.80 – 1.00 | High | ≥ 0.80 | ≤ 1.0 | ≤ 1.0 |

| 0.58 – 0.79 | Medium | ≥ 0.40 | ≤ 2.0 | ≤ 5.0 |

| 0.23 – 0.57 | Acceptable | ≥ 0.20 | ≤ 4.0 | ≤10.0 |

| 0.00 – 0.22 | Incorrect | < 0.20 | > 4.0 | >10.0 |

Experimental Protocols

Protocol 1: Standard Local DockQ Calculation for AlphaFold-Multimer Output

Objective: To calculate the DockQ score for an AlphaFold-Multimer predicted model against its experimentally solved native structure.

- Prerequisite Software: Install Python 3.x, Biopython, and the DockQ script (

DockQ.py) from the official GitHub repository. File Preparation:

- Obtain your AlphaFold-Multimer model in PDB format (

model.pdb). - Obtain the native/reference complex structure (

native.pdb). Ensure it is from the same organism and, if applicable, the same mutant. - Run a cleaning script to ensure consistent residue numbering and chain IDs. Example:

- Obtain your AlphaFold-Multimer model in PDB format (

Execution: Run the DockQ script from the command line:

Output Interpretation: The terminal will display Fnat, iRMSD, LRMSD, and the composite DockQ score. Record these values and classify the model based on Table 2.

Protocol 2: Batch Analysis for Thesis Correlation Studies (pLDDT/ipTM vs. DockQ)

Objective: To systematically evaluate the correlation between AlphaFold confidence metrics (pLDDT, ipTM) and DockQ accuracy across a dataset of protein complexes.

- Dataset Curation: Compile a list of 50-100 non-redundant protein complexes with high-resolution experimental structures (e.g., from PDB).

- Model Generation: Run AlphaFold-Multimer (local or via Colab) for each target, extracting the ranked_0.pdb file, the per-residue pLDDT, and the model ipTM score.

Automated DockQ Scoring: Create a shell script (e.g.,

batch_dockq.sh) to iterate over your dataset:Data Collation: Write a parsing script (Python/R) to extract DockQ scores from all result files and pair them with the corresponding ipTM and average interface pLDDT values.

- Statistical Analysis: Calculate Pearson/Spearman correlation coefficients and generate scatter plots (ipTM vs. DockQ, interface pLDDT vs. DockQ) for your thesis results section.

Visualizations

Title: DockQ Validation Workflow for AlphaFold Models

Title: Interpreting DockQ Scores for Thesis Research

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for DockQ Validation Studies

| Item | Function/Description | Example/Source |

|---|---|---|

| Reference PDB Set | High-resolution, non-redundant experimental structures of protein complexes for validation. | Benchmark sets like DOCKGROUND or PDB select. |

| AlphaFold-Multimer | Prediction engine to generate 3D models of protein complexes. | Local installation, ColabFold, or AlphaFold Server. |

| DockQ Script (Python) | Core software for calculating the composite DockQ score and components. | Official GitHub repository (DockQ.py). |

| Biopython Library | Critical dependency for PDB file parsing within the DockQ script. | Install via pip install biopython. |

| PDB Cleaning Script | Standardizes residue numbering and chain IDs between model and native files. | clean_pdb.py often bundled with DockQ. |

| Conda Environment | Manages software dependencies and ensures version reproducibility. | Anaconda or Miniconda distribution. |

| Data Parsing Script | Custom script (Python/R) to extract and correlate DockQ, pLDDT, and ipTM from batch results. | Self-written using pandas (Python) or tidyverse (R). |

| Visualization Software | Generates publication-quality plots of correlation data. | Matplotlib/Seaborn (Python), ggplot2 (R), or Prism. |

Troubleshooting Guides & FAQs

Q1: In the context of AlphaFold2 versus DockQ research, why does my AlphaFold-Multimer prediction show high pTM or ipTM confidence scores but fail to correlate with a good DockQ score upon experimental validation?

A1: High pTM (predicted Template Modeling) or ipTM (interface pTM) scores from AlphaFold-Multimer indicate confidence in the overall complex fold and interface, but not necessarily in the precise atomic-level interface geometry. DockQ scores specifically measure the quality of the interface (fNat, iRMSD, LRMSD). A discrepancy can arise from:

- Inherent Limitations: AlphaFold is trained on monomeric structures and may overfit to non-physiological interfaces in the PDB.

- Flexible Regions: Disordered loops or linker regions at the interface, not well-resolved in training data, can be modeled with high confidence but incorrect conformation.

- Solution Conditions: The prediction is static and does not account for solution dynamics, pH, or co-factors present in your experiment.

- Troubleshooting Step: Run the prediction multiple times with different random seeds. Assess the predicted aligned error (PAE) at the interface—a high PAE indicates low confidence in relative domain positioning despite a high ipTM.

Q2: When preparing input for a PPI prediction using a ColabFold notebook, what is the optimal strategy for defining the "pair_mode" and sequence pairing?

A2: This is critical for accurate modeling.

- For known interacting pairs: Use

--pair-modeunpaired+paired. Provide the sequences in the same order in the input field, and additionally, create a copy where they are concatenated with a colon (e.g.,sequenceA:sequenceB). This explicitly suggests the model should consider them as a pair. - For screening: Use

--pair-modeunpaired. Provide each sequence individually to allow all-vs-all combinations. - For large complexes: Manually define the interaction graph using the

--pair-listoption to limit combinatorial explosion and focus on biologically relevant pairs.- Protocol: For two chains A and B:

- Input

sequenceAandsequenceBon separate lines. - In a new line, input

sequenceA:sequenceB. - Set the flag

--pair-mode unpaired+paired.

- Input

- Protocol: For two chains A and B:

Q3: My experimental validation (e.g., SPR, Y2H) contradicts the high-confidence PPI prediction. What are the primary sources of such false positives in computational prediction?

A3:

| Source of False Positive | Description | Mitigation Strategy |

|---|---|---|

| Training Set Bias | Over-representation of certain protein families (e.g., antibodies, enzymes) in PDB leads to overconfident modeling of similar folds. | Check the MSA coverage. Low diversity may indicate a shallow evolutionary history, making the model less reliable. |

| Static Prediction | The model outputs a single, low-energy conformation, missing the dynamics of binding (e.g., conformational selection). | Use the --num-recycle flag (e.g., set to 12 or 20) to allow more iterative refinement. Analyze all 5 models, not just model 1. |

| Missing Components | The interaction may require a non-protein ligand, metal ion, or post-translational modification. | Include the ligand sequence as a separate "chain" or use tools like AlphaFill for homology-based ligand transplant. |

Experimental Protocol: Validating a Computational PPI Prediction

This protocol outlines a standard workflow for experimentally testing a computationally predicted PPI, framed within a thesis correlating AlphaFold confidence metrics with DockQ accuracy.

1. Computational Prediction Phase:

- Tool: ColabFold (AlphaFold-Multimer v2.3.2).

- Input: FASTA sequences of the two putative interacting proteins.

- Parameters:

--pair-mode unpaired+paired,--num-recycle 12,--num-models 5,--rank by pTM. - Output Analysis: Record the ipTM, pTM, and interface PAE. Visually inspect the predicted interface in PyMOL/ChimeraX. Calculate the predicted DockQ score using the

dockqscript on the predicted complex structure.

2. In Vitro Validation Phase (Surface Plasmon Resonance - SPR):

- Protein Production: Express and purify both proteins, with one (the ligand) containing a purification tag (e.g., His6).

- Immobilization: Dilute the ligand protein to 10 µg/mL in sodium acetate buffer (pH 4.5-5.5). Inject over a CMS chip to achieve ~5000 RU response. Quench with ethanolamine.

- Kinetic Analysis: Dilute the analyte protein in running buffer (HBS-EP+) across a concentration series (e.g., 0.5 nM to 1 µM). Inject over the ligand and reference surfaces for 120s association, followed by 300s dissociation. Regenerate the surface with 10 mM glycine-HCl (pH 2.0).

- Data Processing: Double-reference the sensorgrams (reference surface & zero concentration). Fit the data to a 1:1 Langmuir binding model to derive the association (ka) and dissociation (kd) rate constants, and the equilibrium dissociation constant (KD = kd/ka).

3. Structural Validation Phase (Comparative Model):

- If an experimental structure is obtained (e.g., via X-ray crystallography), calculate the experimental DockQ score by comparing the computational prediction to the experimental structure.

- Thesis Correlation: Plot ipTM/pTM vs. experimental DockQ for a series of tested PPIs to establish the correlation metric for your specific protein system.

Visualizations

Diagram 1: PPI Validation Workflow

Diagram 2: AlphaFold Confidence vs. DockQ Metrics Relationship

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in PPI Prediction/Validation |

|---|---|

| ColabFold | Cloud-based pipeline combining AlphaFold2/AlphaFold-Multimer with fast homology search (MMseqs2). Enables rapid PPI prediction without local GPU. |

| AlphaFold-Multimer Weights | Specialized neural network parameters trained on protein complexes, crucial for predicting interfaces (vs. monomer weights). |

| PyMOL / ChimeraX | Molecular visualization software for inspecting predicted interfaces, calculating clashes, and comparing models. |

| DockQ Software | Command-line tool for calculating the DockQ score, which quantifies the quality of a protein-protein docking model (combines FNat, iRMSD, LRMSD). |

| CMS SPR Chip | Carboxymethylated dextran sensor chip for Surface Plasmon Resonance; standard for immobilizing protein ligands via amine coupling. |

| Anti-His Antibody Chip | SPR chip pre-immobilized with antibody to capture His-tagged proteins, allowing oriented immobilization and ligand reuse. |

| HBS-EP+ Buffer | Standard SPR running buffer (HEPES, NaCl, EDTA, surfactant); provides a stable, low-nonspecific binding background. |

| Size Exclusion Chromatography (SEC) Column | Essential for purifying monodisperse, properly folded proteins for both prediction (clean MSAs) and experimental validation. |

Technical Support Center

Troubleshooting Guide & FAQs

Q1: My docking model has a high pLDDT (>90) but the DockQ score is poor (<0.23). Why does this happen and what should I do? A: This discrepancy often indicates a high-quality monomeric structure (captured by pLDDT) but an incorrect relative orientation or interface in the complex (missed by pLDDT but captured by DockQ). pLDDT is a per-residue metric for monomer confidence, not complex accuracy. First, check the ipTM or interface pTM score from AlphaFold Multimer, which is designed to assess interface confidence. A low ipTM (<0.5) with a high pLDDT is a red flag. Proceed by using alternative docking software (e.g., HADDOCK, ClusPro) to generate more poses, or consider integrating experimental data (e.g., cross-linking, mutagenesis) to guide the docking.

Q2: What is the minimum acceptable ipTM score for considering a predicted complex for further experimental validation? A: Based on current benchmark studies, an ipTM score ≥ 0.6 generally indicates a model with acceptable to good quality (DockQ ≥ 0.49, which is in the "acceptable" range). For critical drug discovery projects, a more conservative threshold of ipTM ≥ 0.7 (DockQ ~0.6, "medium" quality) is recommended to reduce false positives. See Table 1 for detailed correlations.

Q3: How should I combine pLDDT and ipTM scores when filtering models from AlphaFold-Multimer? A: Apply a two-tier filter. First, assess overall model confidence: reject models where the average pLDDT across all chains is < 70. Second, apply an interface-specific filter: retain only models with an ipTM score ≥ 0.6. For the interface residues themselves (typically defined as residues within 10Å of the other chain), a local pLDDT average of > 80 is desirable.

Q4: My predicted model has good scores, but experimental SAXS data does not match. How to troubleshoot? A: This suggests a possible error in the quaternary structure despite good per-chain and interface metrics. First, compute the theoretical SAXS profile from your model (using tools like CRYSOL or FoXS) and compare with experiment. If the fit is poor (χ² > 3), consider: 1) The model may represent one state in a dynamic ensemble. 2) There may be large, flexible regions not well-defined by pLDDT. Use the pLDDT per residue to identify low-confidence loops/termini (pLDDT < 70); removing or remodeling these flexible regions in silico may improve the SAXS fit.

Data Presentation: Confidence Score Correlations

Table 1: Empirical Correlation Benchmarks Between AlphaFold Scores and DockQ Accuracy

| AlphaFold Metric | Typical Score Range | Corresponding DockQ Range | Interpreted Model Quality | Suggested Action for Docking Projects |

|---|---|---|---|---|

| ipTM | ≥ 0.8 | 0.8 - 1.0 (High) | Correct, high accuracy | Ideal for downstream work. |

| ipTM | 0.6 - 0.8 | 0.49 - 0.8 (Medium-Acceptable) | Mostly correct topology. | Suitable for hypothesis generation, guide mutagenesis. |

| ipTM | 0.4 - 0.6 | 0.23 - 0.49 (Incorrect-Medium) | Possibly incorrect interface. | Require orthogonal validation; use with caution. |

| ipTM | < 0.4 | < 0.23 (Incorrect) | Wrong quaternary structure. | Discard or use only monomeric units. |

| Avg. pLDDT (Interface) | ≥ 90 | Variable | High-confidence residues. | Reliable local geometry. |

| Avg. pLDDT (Interface) | 70 - 90 | Variable | Caution advised. | Check sidechain rotamers. |

| Avg. pLDDT (Interface) | < 70 | Variable (Often Low) | Very low confidence. | Do not trust interface details. |

Table 2: Recommended Practical Cut-offs for Project Stages

| Project Stage | Minimum ipTM | Minimum Interface pLDDT (Avg.) | Rationale |

|---|---|---|---|

| Initial Screening & Triaging | 0.5 | 70 | Balances recall and precision for large-scale analysis. |

| Detailed Mechanistic Study | 0.65 | 80 | Prioritizes model reliability for interpreting interactions. |

| Structure-Based Drug Design | 0.7 | 85 | Conservative threshold critical for virtual screening. |

| "No Go" Threshold | < 0.4 | < 60 | Models below these are highly unreliable. |

Experimental Protocols

Protocol 1: Validating AlphaFold-Multimer Predictions with DockQ Objective: To quantitatively assess the accuracy of a predicted protein complex model against a known experimental reference structure. Materials: Predicted complex model (in PDB format), experimental reference structure (PDB format), DockQ software (available from https://github.com/bjornwallner/DockQ/). Method:

- Prepare Structures: Align the sequences of the predicted and reference structures. Ensure chains are ordered identically. Remove water molecules and heteroatoms.

- Run DockQ: Execute the command:

python DockQ.py -f*model.pdb -r* reference.pdb - Interpret Output: DockQ provides a single score between 0 and 1. Classify result:

- DockQ ≥ 0.8: High accuracy.

- 0.49 ≤ DockQ < 0.8: Medium accuracy.

- 0.23 ≤ DockQ < 0.49: Acceptable accuracy.

- DockQ < 0.23: Incorrect.

- Correlate with Confidence Scores: Record the model's ipTM and average interface pLDDT (extracted from AlphaFold's JSON output). Plot DockQ vs. ipTM to establish project-specific correlations.

Protocol 2: Calculating Interface pLDDT from AlphaFold Output

Objective: To determine the average pLDDT specifically for residues at the protein-protein interface.

Materials: AlphaFold prediction result (including *pdb file and *_scores.json file), BioPython library.

Method:

- Define Interface Residues: From the predicted PDB file, select all residues in Chain A that have at least one atom within 10Å of any atom in Chain B. Repeat for Chain B.

- Extract pLDDT Values: The pLDDT per residue is stored in the B-factor column of the PDB file and in the

plddtarray within the JSON file. - Map and Calculate: For each interface residue identified in Step 1, extract its pLDDT value. Compute the arithmetic mean of all these values.