Bayesian Optimization in Antibody Design: A New Frontier for Accelerated Therapeutic Discovery

This article explores the transformative role of Bayesian optimization (BO) in computational antibody design, a field critical for developing new biologics.

Bayesian Optimization in Antibody Design: A New Frontier for Accelerated Therapeutic Discovery

Abstract

This article explores the transformative role of Bayesian optimization (BO) in computational antibody design, a field critical for developing new biologics. We first establish the foundational principles of BO and its necessity for navigating the vast combinatorial sequence space of antibodies. The discussion then progresses to cutting-edge methodological frameworks like AntBO and CloneBO, which integrate Gaussian processes and generative models for efficient in silico design. We critically examine key optimization challenges, including the integration of structural information and developability constraints, and present comparative validation studies that demonstrate significant performance improvements over traditional methods, such as discovering high-affinity binders in under 200 design cycles. This resource is tailored for researchers, scientists, and drug development professionals seeking to leverage machine learning for next-generation therapeutic antibody development.

The Antibody Design Challenge and Why Bayesian Optimization is the Answer

The Combinatorial Problem of Antibody Sequence Space

The combinatorial nature of antibody sequence space presents a fundamental challenge in computational immunology and therapeutic antibody design. Antibodies achieve their remarkable diversity primarily through V(D)J recombination in the Complementarity Determining Regions (CDRs), with the CDR3 of the heavy chain (CDRH3) demonstrating the highest sequence variability and playing a dominant role in antigen-binding specificity [1] [2]. The source of antibody diversity has long been identified to be the somatic recombination of V-, (D- in the heavy chains) and J-genes, with additions and deletions of nucleotides at the junctions further increasing diversity [3].

The combinatorial explosion of possible sequences creates a search space of intractable size for exhaustive exploration. For a sequence of length n consisting of the 20 naturally occurring amino acids, there are 20^n possible sequences [1]. With CDRH3 sequence lengths reaching up to 36 residues, the theoretical sequence space exceeds practical limits for exhaustive computational or experimental screening [1]. This vastness makes it impossible to query binding-affinity oracles exhaustively, both computationally and experimentally, necessitating sophisticated optimization approaches [1].

Computational Framework: Bayesian Optimization for Antibody Design

Core Principles of AntBO

AntBO represents a combinatorial Bayesian optimization (BO) framework specifically designed for the in silico design of antigen-specific CDRH3 regions [1] [4]. This approach addresses the combinatorial challenge through several key innovations:

- Gaussian Processes (GPs): Utilized as a surrogate model to incorporate prior beliefs about the domain and guide the search in sequence space [1]

- Uncertainty Quantification: Enables the acquisition maximization step to optimally balance exploration and exploitation in the search space [1]

- CDRH3 Trust Region: Restricts the search to sequences with favorable developability scores to ensure therapeutic relevance [4]

- Sample Efficiency: Designed to find high-affinity sequences with a minimal number of calls to the binding-affinity oracle [1]

Performance Benchmarking

The following table summarizes the quantitative performance of AntBO compared to experimental data and other computational approaches:

Table 1: Performance Metrics of AntBO in Computational Experiments

| Metric | Performance | Comparative Baseline |

|---|---|---|

| Oracle Calls | <200 | Outperforms best of 6.9M experimental CDRH3s [1] |

| High-Affinity Discovery | 38 protein designs | Requires no domain knowledge [1] |

| Antigen Testing | 159 discretized antigens | Consistent outperformance across diverse targets [1] [4] |

| Developability | Favorable scores maintained | Incorporates biophysical constraints [1] |

Experimental Protocols and Methodologies

AntBO Implementation Protocol

Objective: To design high-affinity, developable CDRH3 sequences for specific antigens using combinatorial Bayesian optimization.

Materials:

- Absolut! software suite (binding affinity oracle)

- AntBO computational framework

- 159 antigen structures for benchmarking

- Developability scoring metrics

Procedure:

- Initialization: Define the CDRH3 sequence space and trust region based on developability constraints

- Surrogate Modeling: Implement Gaussian process to model the antibody-antigen binding landscape

- Acquisition Function Optimization: Balance exploration and exploitation using upper confidence bound criteria

- Oracle Query: Evaluate candidate sequences using Absolut! binding affinity simulation

- Iterative Refinement: Update the surrogate model with new data points and repeat steps 3-4 for 200 iterations

- Validation: Select top-performing sequences for in vitro testing

Technical Notes: The trust region is critical for maintaining favorable developability properties, including aggregation resistance, solubility, and stability [1]. The Absolut! framework provides an end-to-end simulation of antibody-antigen binding affinity using coarse-grained lattice representations while preserving eight levels of biological complexity present in experimental datasets [1].

Antibody Repertoire Sequencing and Analysis

Objective: To characterize natural antibody repertoire architecture and understand sequence space organization.

Materials:

- Bulk B-cell RNA/DNA or single-cell suspensions

- 5' RACE or multiplex PCR reagents

- Unique Molecular Identifiers (UMIs)

- High-throughput sequencing platform (Illumina)

- Computational analysis tools (MixCR, ImmuneDB, DEAL)

Procedure:

- Library Preparation:

- For bulk RNA: Use random hexamers or constant region primers for cDNA synthesis with UMIs

- For single-cell: Implement 5' scRNA-seq with V(D)J enrichment

- Amplify using multiplex PCR or 5' RACE protocols [2]

Sequencing: Perform high-throughput sequencing on Illumina platform (recommended depth: >100,000 reads/sample)

V(D)J Sequence Annotation:

- Preprocess raw FASTQ data (quality control, adapter trimming)

- Align to germline Ig reference sequences (IMGT database)

- Identify V, D, J gene segments and CDR3 boundaries

- Extract clonotypes based on nucleotide or amino acid sequences [2]

Network Analysis:

- Calculate pairwise sequence similarity using Levenshtein distance

- Construct similarity networks with nodes (sequences) and edges (similarity relationships)

- Analyze global network properties: interconnectedness, component size, centrality [3]

Technical Notes: DNA-input repertoire allows analysis of both productive and non-productive V(D)J rearrangements, while RNA-input reflects expressed antibody repertoire. UMIs are essential for accurate quantification and error correction [2]. For diversity estimation, the DEAL software utilizes base quality scores to compensate for technical errors in sequencing [5].

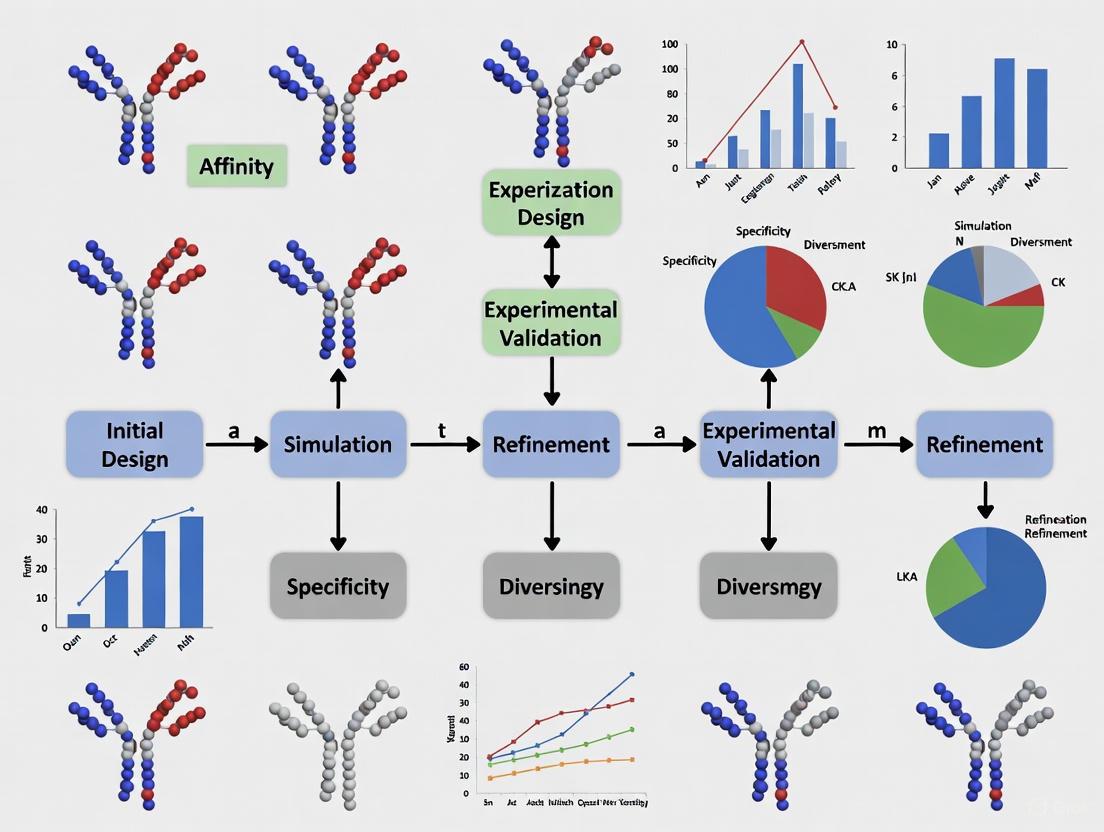

Visualization of Workflows and Relationships

AntBO Combinatorial Optimization Workflow

Diagram 1: AntBO Bayesian Optimization Workflow

Antibody Repertoire Architecture Analysis

Diagram 2: Repertoire Sequencing & Analysis Pipeline

Table 2: Essential Research Tools for Antibody Sequence Space Analysis

| Resource | Type | Primary Function | Application Context |

|---|---|---|---|

| Absolut! Software | Computational Oracle | In silico antibody-antigen binding simulation | Benchmarking designed CDRH3 sequences [1] |

| AntBO Framework | Optimization Tool | Combinatorial Bayesian optimization | Automated antibody design with developability constraints [1] [4] |

| IMGT Database | Reference Database | Germline Ig gene sequences | V(D)J sequence annotation and alignment [2] |

| DEAL (Diversity Estimator) | Bioinformatics Tool | Antibody library complexity estimation | Quantitative diversity assessment from NGS data [5] |

| MixCR | Analysis Pipeline | Adaptive immunity repertoire analysis | V(D)J alignment and clonotype inference [2] |

| Unique Molecular Identifiers (UMIs) | Molecular Biology Reagent | Error correction and quantification | Accurate RNA molecule counting in repertoire sequencing [2] |

| 5' RACE Protocol | Laboratory Method | Unbiased V(D)J amplification | Library preparation without primer bias [2] |

Discussion and Future Perspectives

The integration of Bayesian optimization with antibody design represents a paradigm shift in addressing the combinatorial challenge of antibody sequence space. The demonstrated efficiency of AntBO in discovering high-affinity binders in under 200 oracle calls, outperforming millions of experimentally obtained sequences, highlights the transformative potential of this approach [1]. This methodology effectively navigates the vast combinatorial space while incorporating critical developability constraints early in the design process.

Future directions in this field include the incorporation of more sophisticated machine learning architectures, expansion to target multiple antibody regions beyond CDRH3, and improved accuracy in affinity prediction for high-affinity binders [6]. Additionally, the integration of structural information with sequence-based models may further enhance prediction accuracy, though current methods like AntBO demonstrate that significant progress can be achieved using sequence information alone [7]. As these computational methods mature, they promise to significantly accelerate therapeutic antibody development while reducing experimental costs.

Bayesian Optimization (BO) is a powerful, sample-efficient framework for optimizing expensive black-box functions where the functional form of the objective is unknown and direct evaluations are costly [8]. This approach has demonstrated remarkable success across diverse domains, from tuning the hyperparameters of AlphaGo to accelerating materials discovery and designing therapeutic antibodies [9] [8] [10]. The fundamental challenge BO addresses is the exploration-exploitation dilemma—balancing the need to learn about unknown regions of the search space (exploration) with the desire to concentrate on areas already known to be promising (exploitation) [8].

In antibody design, researchers face precisely this type of optimization problem: they must iteratively mutate antibody sequences to improve binding affinity and stability, where each experimental evaluation requires substantial laboratory resources and time [9]. The sequence-function relationship constitutes a complex black box, making BO particularly well-suited for guiding this optimization process efficiently.

Core Mathematical Framework

BO operates through an iterative process that combines a probabilistic surrogate model with an acquisition function to guide the selection of future evaluation points [8].

The General Optimization Problem

Formally, BO aims to find the global optimum of an unknown objective function (f(x)): [ x^* = \arg\max_{x \in \mathcal{X}} f(x) ] where (x) represents the design parameters (e.g., antibody sequence features), (\mathcal{X}) is the design space, and (f(x)) is expensive to evaluate (e.g., requiring wet lab experiments) [11].

Bayes' Theorem and Sequential Learning

The process is "Bayesian" because it maintains a posterior distribution over the objective function that updates as new observations are collected. According to Bayes' theorem: [ P(f|D{1:t}) \propto P(D{1:t}|f) P(f) ] where (D{1:t} = {(x1, y1), \ldots, (xt, yt)}) represents the observations collected up to iteration (t), (P(f)) is the prior over the objective function, (P(D{1:t}|f)) is the likelihood, and (P(f|D_{1:t})) is the posterior distribution [12] [8]. This sequential updating process allows BO to incorporate information from each new experiment to refine its understanding of the objective landscape.

Key Components of Bayesian Optimization

Surrogate Models

The surrogate model approximates the expensive black-box function using a probabilistic framework. The most common choice is Gaussian Process (GP) regression, which defines a probability distribution over possible functions that fit the observed data [8]. A GP is fully specified by its mean function (\mu(x)) and covariance kernel (k(x, x')): [ f(x) \sim \mathcal{GP}(\mu(x), k(x, x')) ] This framework provides both predictions and uncertainty estimates at unobserved points, which is crucial for guiding the optimization process [8]. For problems involving both qualitative and quantitative variables, such as material selection combined with parameter tuning, specialized approaches like Latent-Variable Gaussian Processes (LVGP) map qualitative factors to underlying numerical latent variables to enable effective modeling [13].

Acquisition Functions

The acquisition function (\alpha(x)) uses the surrogate model's predictions to quantify the utility of evaluating a candidate point (x), balancing exploration and exploitation. Common acquisition functions include:

- Expected Improvement (EI): Measures the expected improvement over the current best observation (f^) [8]: [ \text{EI}(x) = \mathbb{E}[\max(f(x) - f^, 0)] ]

- Probability of Improvement (PI): Captures the probability that a point will improve upon (f^*)

- Upper Confidence Bound (UCB): Uses an optimism-based strategy: (\text{UCB}(x) = \mu(x) + \kappa\sigma(x)), where (\kappa) controls the exploration-exploitation balance [8]

Table 1: Comparison of Common Acquisition Functions

| Acquisition Function | Mathematical Form | Strengths | Weaknesses |

|---|---|---|---|

| Expected Improvement (EI) | (\mathbb{E}[\max(f(x) - f^*, 0)]) | Well-balanced performance, analytic form | Can be overly greedy |

| Probability of Improvement (PI) | (P(f(x) \geq f^*)) | Simple interpretation | Prone to excessive exploitation |

| Upper Confidence Bound (UCB) | (\mu(x) + \kappa\sigma(x)) | Explicit exploration parameter | Parameter tuning required |

The BO Algorithm

The complete BO procedure follows these steps:

- Initialize with a small set of observations (using random sampling or design of experiments)

- Repeat until budget exhausted:

- Update the surrogate model using all available data

- Optimize the acquisition function to select the next evaluation point (x_{t+1})

- Evaluate (f(x{t+1})) (e.g., run experiment) and record (y{t+1})

- Augment the data (D{1:t+1} = D{1:t} \cup {(x{t+1}, y{t+1}))

- Return the best observed solution

The following diagram illustrates this iterative workflow:

Advanced Bayesian Optimization Frameworks

Mixed-Variable Optimization with LVGP

Many real-world problems, including antibody design, involve both qualitative and quantitative variables. The Latent-Variable Gaussian Process (LVGP) approach represents qualitative factors by mapping them to underlying numerical latent variables through a low-dimensional embedding [13]. This provides a physically justified representation that captures complex correlations between qualitative levels and enables effective optimization in mixed variable spaces [13].

Multi-Objective Bayesian Optimization

Therapeutic antibody optimization requires balancing multiple competing objectives simultaneously, such as binding affinity, stability, specificity, and low immunogenicity [14] [10]. Multi-objective BO extends the basic framework to identify Pareto-optimal solutions—configurations where no objective can be improved without worsening another [14]. This approach was successfully demonstrated in biologics formulation development, where BO concurrently optimized three key biophysical properties of a monoclonal antibody (melting temperature, diffusion interaction parameter, and stability against air-water interfaces) in just 33 experiments [14].

Integration with Domain Knowledge and Reasoning

Recent advances integrate large language models (LLMs) with BO to incorporate domain knowledge and scientific reasoning. In the "Reasoning BO" framework, LLMs generate scientific hypotheses and assign confidence scores to candidate points, while knowledge graphs store and retrieve domain expertise throughout optimization [15]. This approach demonstrated remarkable performance in chemical reaction yield optimization, increasing yield to 94.39% compared to 76.60% for traditional BO [15].

Bayesian Optimization for Antibody Design: Protocols and Applications

Clone-Informed Bayesian Optimization (CloneBO)

CloneBO is a specialized BO procedure that incorporates knowledge of how the immune system naturally optimizes antibodies through clonal evolution [9]. The methodology involves:

Protocol: CloneBO for Antibody Optimization

Training Data Preparation

- Collect hundreds of thousands of clonal families (sets of related, evolving antibody sequences) from immune repertoire data

- Annotate sequences with experimental measurements of binding affinity and stability

Generative Model Training

- Train a large language model (CloneLM) on the clonal family data to learn the natural evolutionary patterns of antibody optimization

- The model learns which mutations are most likely to improve antibody function within biological constraints

Bayesian Optimization Loop

- Use CloneLM to design candidate sequences with mutations informed by natural immune optimization

- Employ a twisted sequential Monte Carlo procedure to guide designs toward regions with high predicted performance

- Iteratively select sequences for experimental validation based on both model predictions and uncertainty

- Update the model with new experimental measurements

This approach has demonstrated substantial efficiency improvements over previous methods in both computational experiments and wet lab validations, producing stronger and more stable antibody binders [9].

Multi-Objective Antibody Optimization with AbBFN2

The AbBFN2 framework, built on Bayesian Flow Networks, provides a unified approach for multi-property antibody optimization [10]. The system enables simultaneous optimization of multiple antibody properties through conditional generation:

Protocol: Multi-Objective Antibody Optimization with AbBFN2

Task Specification

- Define target properties (e.g., humanization score, developability, specificity)

- Set constraints and thresholds for each property

- Input initial antibody sequence(s) for optimization

Conditional Generation and Evaluation

- Sample candidate sequences conditioned on desired property values

- Evaluate candidates using the model's internal property predictors

- Select promising variants that satisfy multiple objectives simultaneously

Experimental Validation and Iteration

- Synthesize and test top candidates experimentally

- Feed results back into model for continued refinement

- Repeat until desired property profile is achieved

In validation studies, AbBFN2 successfully optimized 63 out of 91 non-human antibody sequences for both human-likeness and developability within just 2.5 hours—a task that traditionally requires weeks to months per sequence [10].

Table 2: Performance Comparison of Bayesian Optimization Methods in Biological Applications

| Application Domain | BO Method | Performance Metrics | Comparison to Alternatives |

|---|---|---|---|

| Antibody Design | CloneBO [9] | Substantial efficiency improvement in designing strong, stable binders | Outperformed previous methods in realistic in silico and in vitro experiments |

| Biologics Formulation | Multi-objective BO [14] | Identified optimal formulations in 33 experiments | Accounted for complex trade-offs between conflicting properties |

| Assay Development | Cloud-based BO [16] | Found optimal conditions testing 21 vs 294 conditions (7x cost reduction) | Dramatically reduced experimental burden compared to brute-force approach |

| Chemical Synthesis | Reasoning BO [15] | Achieved 94.39% yield vs 76.60% for traditional BO | Demonstrated superior initialization and continuous optimization |

Experimental Protocol: Bayesian Optimization for Assay Development

The National Center for Advancing Translational Sciences (NCATS) developed a cross-platform, cloud-based BO system for biological assay optimization [16]. The detailed protocol includes:

Materials and Reagents

- Assay components (enzymes, substrates, buffers)

- Liquid handling robotics or automated systems

- Plate readers or other detection instrumentation

- Cloud computing infrastructure for BO algorithm

Procedure

Initial Experimental Design

- Define parameter ranges (concentrations, pH, temperature, incubation times)

- Establish objective function (e.g., signal-to-noise ratio, Z'-factor)

- Generate initial design (10-20 points) using Latin Hypercube Sampling

Automated Optimization Loop

- Prepare assay plates according to current candidate conditions

- Run assay and measure outcomes

- Upload results to cloud BO system

- Allow algorithm to suggest next batch of conditions (typically 5-20 conditions)

- Repeat until convergence or budget exhaustion

Validation and Verification

- Confirm optimal conditions in replicate experiments

- Compare performance to previous standard conditions

This approach achieved a sevenfold reduction in costs and experimental runtime compared to brute-force optimization while being controlled remotely through a secure connection [16].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Key Research Reagent Solutions for Bayesian Optimization in Antibody Design

| Reagent/Material | Function in BO Workflow | Application Notes |

|---|---|---|

| High-Throughput Screening Assays | Enable parallel evaluation of multiple candidate conditions | Critical for efficient data generation; miniaturization reduces reagent costs [16] |

| Antibody Sequence Libraries | Provide starting points and training data for surrogate models | Clonal families offer evolutionarily-informed search space [9] |

| Protein Stability Assays | Measure biophysical properties for multi-objective optimization | Include thermal shift, aggregation propensity, and viscosity measurements [14] |

| Binding Affinity Measurements | Quantify target engagement strength | Surface plasmon resonance (SPR) or bio-layer interferometry provide quantitative data |

| Cloud Computing Infrastructure | Host BO algorithms and surrogate models | Enable remote access and collaboration across research teams [16] |

| Automated Liquid Handling Systems | Implement candidate conditions suggested by BO | Essential for reproducible high-throughput experimentation [16] |

| Surrogate Model Software | Implement Gaussian Processes or Bayesian neural networks | Options include GPyTorch, BoTorch, or custom implementations [8] |

| Bi-Mc-VC-PAB-MMAE | Bi-Mc-VC-PAB-MMAE, MF:C71H104N12O18, MW:1413.7 g/mol | Chemical Reagent |

| Bromo-PEG7-CH2COOtBu | Bromo-PEG7-CH2COOtBu, MF:C20H39BrO9, MW:503.4 g/mol | Chemical Reagent |

Workflow Visualization: Integrated Bayesian Optimization for Antibody Design

The complete integration of BO into the antibody design process involves multiple interconnected components, as illustrated in the following comprehensive workflow:

Bayesian Optimization represents a paradigm shift in how researchers approach expensive black-box optimization problems in antibody design and broader immunology research. By intelligently balancing exploration and exploitation through probabilistic modeling and acquisition functions, BO dramatically reduces the experimental burden required to discover improved therapeutic candidates. The integration of biological prior knowledge through clonal evolutionary information, combined with multi-objective optimization frameworks and emerging reasoning capabilities, positions BO as an indispensable tool in the modern computational immunologist's toolkit. As these methods continue to evolve and become more accessible, they promise to accelerate the discovery and development of novel antibody-based therapeutics with enhanced properties and reduced immunogenicity.

The discovery and optimization of therapeutic antibodies represent a complex multidimensional challenge, requiring the simultaneous improvement of binding affinity, specificity, stability, and manufacturability. Bayesian Optimization (BO) has emerged as a powerful machine learning framework to navigate this vast combinatorial sequence space efficiently, transforming antibody development from a largely empirical process to a rational, data-driven endeavor [17]. This approach is particularly valuable given the enormous landscape of possible antibody sequences, estimated between 10 billion and 100 billion for germline antibodies alone, making exhaustive experimental screening practically impossible [10].

The core BO framework for antibody design operates through an iterative feedback loop. It begins with an initial set of experimentally characterized antibody variants, uses this data to build a surrogate model that predicts antibody properties, and then employs an acquisition function to intelligently select the next most promising variants for experimental testing [18] [19]. This process strategically balances the exploration of novel sequence regions with the exploitation of known promising areas, dramatically reducing the experimental burden required to identify optimized candidates. For instance, researchers have demonstrated the identification of highly optimized antibody formulations in just 33 experiments, a significant reduction compared to traditional methods [20].

This protocol details the implementation of BO for antibody engineering, focusing on the three fundamental components that enable its sample efficiency: surrogate models that learn from available data, acquisition functions that guide experimental selection, and oracle functions that provide the crucial experimental validation. We provide application notes, experimental protocols, and implementation guidelines to equip researchers with practical tools for leveraging BO in antibody discovery and optimization campaigns.

Bayesian Optimization Workflow: From Sequence to Candidate

The typical BO workflow for antibody design follows a structured, iterative process that integrates computational predictions with experimental validation. The diagram below illustrates this cyclic workflow, highlighting the roles of the surrogate model, acquisition function, and experimental oracle.

Core Component 1: Surrogate Models

Gaussian Process Fundamentals

Surrogate models form the predictive heart of the Bayesian optimization framework, approximating the expensive-to-evaluate true function that maps antibody sequences or formulations to their functional properties. The most commonly employed surrogate in BO is the Gaussian Process (GP), a probabilistic model that defines a probability distribution over possible functions that fit the observed data [18] [8]. A GP is particularly well-suited for biological applications like antibody design due to its flexibility in modeling complex nonlinear relationships, ability to quantify prediction uncertainty, and efficiency with small datasets commonly encountered in early-stage research [19].

A Gaussian Process is fully specified by a mean function (m(\boldsymbol{x})) and a covariance kernel (K(\boldsymbol{x}, \boldsymbol{x}')):

[ f(\boldsymbol{X}*) \mid \mathcal{D}n, \boldsymbol{X}* \sim \mathcal{N} \left(\mun (\boldsymbol{X}*), \sigma^2n (\boldsymbol{X}_*) \right) ]

[ \mun (\boldsymbol{X}) = K(\boldsymbol{X}_, \boldsymbol{X}n) \left[ K(\boldsymbol{X}n, \boldsymbol{X}n) + \sigma^2 I \right]^{-1} (\boldsymbol{y} - m (\boldsymbol{X}n)) + m (\boldsymbol{X}_*) ]

[ \sigma^2n (\boldsymbol{X}) = K (\boldsymbol{X}_, \boldsymbol{X}*) - K(\boldsymbol{X}, \boldsymbol{X}_n) \left[ K(\boldsymbol{X}_n, \boldsymbol{X}_n) + \sigma^2 I \right]^{-1} K(\boldsymbol{X}_n, \boldsymbol{X}_) ]

where (\boldsymbol{X}n) represents the training inputs (antibody sequences or formulations), (\boldsymbol{y}) are the observed outputs (e.g., binding affinity, stability), and (\boldsymbol{X}*) are the test points for prediction [18].

Implementation Protocol for Surrogate Modeling

Materials and Reagents:

- Experimentally characterized antibody variant dataset (sequence and property measurements)

- Computational resources for model training (CPU/GPU)

- Bayesian optimization software platform (e.g., ProcessOptimizer, BoTorch, NUBO)

Procedure:

Data Preparation and Feature Encoding:

- Encode antibody sequences as numerical feature vectors using appropriate representations (e.g., one-hot encoding, physicochemical properties, or embeddings from protein language models).

- Standardize input features to zero mean and unit variance to improve model convergence.

- Standardize objective values if they vary across scales, especially in multi-objective optimization.

Model Initialization:

- Select a Matern 5/2 kernel as a flexible default for modeling complex antibody property landscapes: [ k_{\text{Matern 5/2}}(r) = \sigma^2 \left(1 + \frac{\sqrt{5}r}{\ell} + \frac{5r^2}{3\ell^2}\right) \exp\left(-\frac{\sqrt{5}r}{\ell}\right) ] where (r = \|\boldsymbol{x} - \boldsymbol{x}'\|), (\ell) is the length-scale, and (\sigma^2) is the output variance [18].

- Initialize with a constant mean function if no prior knowledge is available.

Model Training:

- Optimize GP hyperparameters (length scales, output variance, noise variance) by maximizing the log marginal likelihood using the L-BFGS-B algorithm: [ \log p(\boldsymbol{y}n \mid \boldsymbol{X}n) = -\frac{1}{2} (\boldsymbol{y}n - m(\boldsymbol{X}n))^T [K(\boldsymbol{X}n, \boldsymbol{X}n) + \sigma^2 I]^{-1} (\boldsymbol{y}n - m(\boldsymbol{X}n)) - \frac{1}{2} \log \lvert K(\boldsymbol{X}n, \boldsymbol{X}n) + \sigma^2 I \rvert - \frac{n}{2} \log 2\pi ]

- Implement using GPyTorch or scikit-optimize libraries for robust hyperparameter estimation [18].

Model Validation:

- Perform leave-one-out or k-fold cross-validation to assess prediction accuracy.

- Monitor normalized root mean square error (NRMSE) for mean predictions and negative log predictive density (NLPD) for probabilistic calibration.

- Retrain model with full dataset before deployment in BO loop.

Application Notes: For multi-objective optimization problems common in antibody development (e.g., simultaneously optimizing affinity, stability, and specificity), use independent GP surrogates for each objective when using a simple approach [20]. For advanced implementations, consider multi-task GPs that model correlations between objectives. For high-dimensional sequence optimization, consider combining GPs with deep learning embeddings to capture complex sequence-function relationships [10].

Core Component 2: Acquisition Functions

Acquisition Function Formulations

Acquisition functions guide the experimental design process by quantifying the potential utility of evaluating unseen antibody variants, strategically balancing exploration of uncertain regions with exploitation of promising areas. The following table compares the three primary acquisition functions used in antibody development.

Table 1: Acquisition Functions for Antibody Optimization

| Function | Formula | Mechanism | Antibody Application Context |

|---|---|---|---|

| Probability of Improvement (PI) | (\alpha_{PI}(x) = P(f(x) \geq f(x^+) + \epsilon) = \Phi\left(\frac{\mu(x) - f(x^+) - \epsilon}{\sigma(x)}\right)) | Measures probability that a new point exceeds current best by margin (\epsilon) [21] | Conservative approach for fine-tuning known antibody scaffolds with minor modifications |

| Expected Improvement (EI) | (\alpha_{EI}(x) = (\mu(x) - f(x^+) - \epsilon)\Phi(z) + \sigma(x)\phi(z)) where (z = \frac{\mu(x) - f(x^+) - \epsilon}{\sigma(x)}) [22] | Measures expected magnitude of improvement over current best [8] | General-purpose choice for balanced exploration-exploitation in sequence optimization |

| Upper Confidence Bound (UCB) | (\alpha_{UCB}(x) = \mu(x) + \lambda\sigma(x)) [22] | Optimistic strategy assuming upper confidence bound is achievable | High-risk exploration for discovering novel antibody scaffolds with unusual properties |

Implementation Protocol for Acquisition Optimization

Materials and Reagents:

- Trained surrogate model (Gaussian Process)

- Computational resources for numerical optimization

- Defined search space of antibody sequences or formulations

Procedure:

Function Selection:

- For initial discovery phases with high uncertainty, select UCB with (\lambda = 2.0-3.0) for aggressive exploration.

- For intermediate optimization, use Expected Improvement for balanced trade-off.

- For final fine-tuning stages, employ PI with small (\epsilon = 0.01-0.05) for conservative improvements.

Acquisition Optimization:

- Using the trained GP surrogate, compute the acquisition function values across the defined antibody sequence space.

- Employ multi-start gradient-based optimization (e.g., L-BFGS-B) or global optimization techniques to find the maximum of the acquisition function.

- For combinatorial sequence spaces, use genetic algorithms (NSGA-II) or simulated annealing tailored to antibody representations.

Candidate Selection:

- Select the top (k) candidates (for batch evaluation) that maximize the acquisition function.

- For parallel experimental workflows, use techniques like Kriging believer or local penalization to select diverse batches that cover promising regions of the sequence space.

- Implement Thompson sampling as an alternative for highly parallelized evaluation of antibody variants.

Iteration and Update:

- Proceed with experimental evaluation of selected candidates through the oracle function.

- Update the surrogate model with new data and repeat the acquisition process.

Application Notes: For antibody humanization tasks where the goal is to reduce immunogenicity while maintaining binding affinity, use a constrained EI formulation that incorporates domain knowledge [10]. In formulation optimization with physical constraints (e.g., osmolality, pH), modify the acquisition function to penalize invalid regions [20]. The acquisition function's exploration-exploitation balance can be dynamically adjusted based on remaining experimental budget—favoring exploration early and exploitation later in the campaign.

Core Component 3: Experimental Oracles

Oracle Functions for Antibody Assessment

In Bayesian optimization, the oracle function represents the expensive, black-box experimental process that evaluates candidate antibodies and returns quantitative measurements of the properties of interest. For antibody development, these oracle functions typically involve high-throughput experimental assays that measure key developability properties. The relationship between oracle measurements and the optimization workflow is crucial for success.

Implementation Protocol for Oracle Validation

Materials and Reagents:

- Purified antibody variants or formulations for testing

- Assay-specific reagents and equipment

- High-throughput screening infrastructure

Procedure for Binding Affinity Oracle:

Surface Plasmon Resonance (SPR) or Bio-Layer Interferometry (BLI):

- Immobilize antigen on sensor chip according to manufacturer's protocol

- Dilute antibody variants in running buffer at multiple concentrations

- Measure association and dissociation phases to determine kinetic parameters (kon, koff)

- Calculate binding affinity (K_D) from kinetic rates or steady-state analysis

- Include reference antibodies for quality control and signal normalization

High-Throughput ELISA Screening:

- Coat microplates with antigen at optimized concentration

- Block plates with protein-based blocking buffer

- Incubate with antibody variants at standardized concentration

- Detect binding with enzyme-conjugated secondary antibody and substrate

- Measure absorbance and normalize to positive and negative controls

Procedure for Stability Oracle:

Differential Scanning Fluorimetry (DSF):

- Prepare antibody samples in formulation buffer with fluorescent dye (e.g., SYPRO Orange)

- Apply temperature ramp from 25°C to 95°C in real-time PCR instrument

- Monitor fluorescence intensity as function of temperature

- Determine melting temperature (T_m) from inflection point of unfolding curve

- Rank variants by thermal stability based on T_m values

Accelerated Stability Assessment:

- Incubate antibody formulations at stressed conditions (e.g., 25°C, 40°C for 2-4 weeks)

- Analyze samples periodically for aggregation (SEC-HPLC), fragmentation (CE-SDS), and binding activity

- Calculate degradation rates and compare relative stabilities

Application Notes: Implement the BreviA system or similar high-throughput platforms for parallel evaluation of 384 antibody-antigen interactions when working with large variant libraries [17]. For early-stage screening, prioritize throughput over precision by using single-concentration assays before validating hits with full kinetic analysis. Incorporate quality control metrics and replicate measurements to quantify and model experimental noise in the Bayesian optimization framework.

Integrated Protocol: Multi-Objective Antibody Optimization

This section provides a complete experimental protocol for optimizing antibody formulations using Bayesian optimization, based on a published study that simultaneously improved three key biophysical properties of a monoclonal antibody [20].

Materials and Reagents

Table 2: Research Reagent Solutions for Bayesian Antibody Optimization

| Category | Specific Items | Function in Protocol |

|---|---|---|

| Model System | Bococizumab-IgG1 monoclonal antibody | Model therapeutic antibody for optimization |

| Excipients | d-Sorbitol (≥98%), L-Arginine (≥99.5%), L-Aspartic acid (≥98%), L-Glutamic acid (≥99%) | Formulation components to optimize stability |

| Buffers | L-Histidine (≥99.5%), Hydrochloric acid | Buffer system for pH control |

| Analytical Instruments | SPR/BLI instrument, DSF-capable RT-PCR system, UPLC/HPLC systems | Oracle functions for property measurement |

| Software | ProcessOptimizer (v0.9.4), pHcalc package, Python with scikit-optimize | BO implementation and constraint management |

Step-by-Step Procedure

Problem Formulation and Search Space Definition:

- Define six input variables: concentration of Sorbitol, concentration of Arginine, pH, fraction of Glutamic acid, fraction of Aspartic acid, and fraction of HCl

- Normalize all variables to a unit hypercube for optimization

- Set optimization objectives: maximize melting temperature (Tm, thermal stability), maximize diffusion interaction parameter (kD, colloidal stability), and maximize stability against air-water interfaces

- Define practical constraints: osmolality 250-500 mOsm/kg, sum of acid fractions ≤1, pH range 5.0-7.0

Initial Experimental Design:

- Sample 13 initial points randomly from the defined variable space

- Prepare formulations according to the generated compositions using the pHcalc package for concentration reconstruction

- Ensure all initial formulations satisfy defined constraints

Oracle Evaluation:

- Measure Tm using DSF: Prepare samples at 0.2 mg/mL antibody in respective formulations, apply temperature ramp 25-95°C at 1°C/min, determine Tm from inflection point

- Measure k_D using dynamic light scattering: Analyze antibody solutions at 10 mg/mL, 25°C, determine interaction parameter from concentration-dependent scattering

- Assess interfacial stability by measuring aggregation after agitation: Subject samples to vertical shaking for 24 hours, quantify soluble monomer by SEC-HPLC

Bayesian Optimization Loop:

- Standardize all three objectives to zero mean and unit variance

- Train independent Gaussian Process surrogates for each objective using Matern 5/2 kernel

- With 75% probability, use exploitation route: Generate Pareto front with NSGA-II (100 generations, 100 population size), select point with maximum minimum distance to existing observations in objective and variable space

- With 25% probability, use exploration route: Minimize Steinerberger sum from 20 random starts to explore sparsely sampled regions

- Implement batch suggestion with batch size 5 using Kriging believer strategy

- Enforce constraints during candidate suggestion by discarding constraint-violating genetic moves

Iteration and Convergence:

- Run BO for 4 iterations (20 total experiments beyond initial design)

- Monitor hypervolume progression to assess convergence

- Select final optimized formulation from Pareto front based on application requirements

Expected Results and Interpretation

This protocol should identify formulation conditions that simultaneously improve all three target properties within 33 total experiments (13 initial + 20 BO-suggested) [20]. The algorithm typically identifies clear trade-offs between properties (e.g., high pH favors Tm while low pH favors kD), enabling informed decision-making based on therapeutic application priorities. The entire computational process requires approximately 56 minutes per iteration on standard computing hardware, with the majority of time spent on pH and osmolality constraint enforcement [20].

Troubleshooting and Optimization Guidelines

Poor Surrogate Model Performance:

- Problem: GP predictions show high cross-validation error

- Solution: Normalize input features and objectives, consider alternative kernel functions, or increase initial design size for better space-filling

Insufficient Exploration:

- Problem: BO converges quickly to local optimum

- Solution: Increase exploration probability to 30-40%, use UCB with higher λ values, or incorporate random points in batch suggestions

Constraint Violations:

- Problem: Suggested formulations violate practical constraints

- Solution: Implement more conservative constraint handling through penalty functions or use of feasible set projections

High Experimental Noise:

- Problem: Oracle measurements show high variability

- Solution: Incorporate explicit noise modeling in GP, implement replicate measurements for promising candidates, use robust acquisition functions

For advanced implementations, consider transfer learning approaches where knowledge from previous antibody optimization campaigns is incorporated through informed priors in the GP model, potentially reducing experimental burden by 30-50% in related projects [19].

Within the framework of Bayesian optimization (BO) for antibody design, the precise definition of optimization objectives is paramount. BO provides a sample-efficient, uncertainty-aware framework for navigating the vast combinatorial sequence space of antibodies, where exhaustive experimental screening is infeasible [1] [23]. This process treats the intricate biophysical simulations and experimental assays as black-box "oracles" that are expensive to query. The efficacy of this search is wholly dependent on a clear, quantitative articulation of the target properties. This application note delineates the three primary pillars of antibody optimization—affinity, developability, and stability—detailing their computational and experimental assessment methods to guide the formulation of robust objectives for BO campaigns.

Core Optimization Objectives

The following table summarizes the key parameters and their assessment methods for each optimization objective, which are critical for defining the output of a Bayesian optimization oracle.

Table 1: Core Optimization Objectives in Antibody Design

| Objective | Key Parameters | Common In Silico/Computational Assessment Methods | Common Experimental Assessment Methods |

|---|---|---|---|

| Affinity | Binding affinity (KD), Association rate (ka), Dissociation rate (kd) | Structural modeling with scoring functions (e.g., mCSM-AB2), Machine Learning models (e.g., ensemble ML, graph neural networks), Lattice-based simulations (e.g., Absolut! framework) [1] [24] | Surface Plasmon Resonance (SPR), Bio-Layer Interferometry (BLI), Enzyme-Linked Immunosorbent Assay (ELISA) [17] |

| Developability | Colloidal stability (kD), Viscosity, Isoelectric point (pI), Hydrophobicity, Presence of aggregation motifs | Sequence-based pI calculation, Hydrophobicity indices (e.g., TAP), Structure-based patch analysis, Machine learning classifiers [25] [26] | Size-Exclusion Chromatography (SEC), Dynamic Light Scattering (DLS), Differential Scanning Fluorimetry (DSF), Hydrophobic Interaction Chromatography (HIC) [25] [17] |

| Stability | Thermal stability (Tm), Aggregation temperature (Tagg) | Instability index, Aliphatic index, Molecular Dynamics (MD) simulations [26] | Differential Scanning Calorimetry (DSC), Differential Scanning Fluorimetry (DSF) [17] |

Affinity

Affinity defines the strength of the interaction between an antibody and its target antigen, often dominated by the sequence and structure of the Complementarity-Determining Regions (CDRs), particularly CDRH3 [1]. The primary goal is to minimize the dissociation constant (KD), which often involves engineering slower off-rates (kd). For BO, affinity is frequently used as the primary objective function. The AntBO framework, for instance, uses a combinatorial BO approach with a trust region to efficiently maximize binding affinity as evaluated by a black-box simulator, demonstrating the ability to find high-affinity CDRH3 sequences in fewer than 200 oracle calls [1].

Developability

Developability encompasses a suite of biophysical properties that determine whether an antibody candidate can be successfully developed into a stable, manufacturable, and safe therapeutic. Unlike affinity, developability often functions as a constraint within a multi-objective BO problem. Key considerations include:

- Colloidal Stability: A measure of protein-protein interactions in solution, often assessed via the diffusion interaction parameter (kD) from DLS. Low kD values indicate attractive interactions that can lead to aggregation [25].

- Viscosity: High viscosity poses challenges for subcutaneous injection. The isoelectric point (pI) of the variable domains is a simple and powerful predictor; aligning the pIs of different domains in bispecific antibodies can mitigate charge asymmetries and reduce viscosity risks [25].

- Sequence-based Risks: In silico checks for undesirable motifs, such as glycosylation sites or chemical degradation hotspots, are routinely performed [1].

Frameworks like PropertyDAG formalize these complex relationships by structuring objectives in a directed acyclic graph, allowing BO to hierarchically prioritize candidates that satisfy upstream developability constraints before optimizing for affinity [23].

Stability

Stability refers to the structural integrity and resistance to degradation of the antibody itself. This is an intrinsic property crucial for ensuring adequate shelf-life and in vivo half-life. Thermal stability, measured as the melting temperature (Tm) via DSC or DSF, is a standard metric. Computationally, stability can be inferred from various sequence- and structure-based descriptors. Large-scale analyses of natural antibody repertoires have quantified the plasticity of these stability parameters, providing a reference landscape against which engineered antibodies can be compared [26]. In BO, stability can be integrated either as a secondary objective in a multi-objective formulation or as a constraint, similar to developability.

Experimental Protocols for Objective Quantification

Protocol: High-Throughput Affinity Kinetics using Bio-Layer Interferometry (BLI)

Purpose: To quantitatively determine the binding affinity and kinetics (ka, kd, KD) of antibody variants in a high-throughput format suitable for generating data for machine learning model training [17].

Procedure:

- Antibody Capture: Hydrate biosensors (e.g., Anti-Human Fc Capture) in kinetics buffer for at least 10 minutes. Load antibody samples (10-20 µg/mL in kinetics buffer) onto the biosensors for 300 seconds to achieve adequate capture levels.

- Baseline Establishment: Immerse the antibody-loaded biosensors in kinetics buffer for 60 seconds to establish a stable baseline.

- Association Phase: Dip the biosensors into wells containing a series of concentrations of the antigen (e.g., 0, 3.125, 6.25, 12.5, 25, 50 nM) for 300 seconds to monitor binding.

- Dissociation Phase: Transfer the biosensors back to kinetics buffer for 600 seconds to monitor dissociation.

- Data Analysis: Reference the data against a buffer-only well. Fit the association and dissociation curves globally to a 1:1 binding model using the BLI analysis software to extract ka, kd, and KD.

Protocol: Colloidal Stability Assessment via Dynamic Light Scattering (DLS)

Purpose: To measure the diffusion interaction parameter (kD), a key indicator of colloidal stability and aggregation propensity, which correlates with viscosity and solution behavior [25].

Procedure:

- Sample Preparation: Buffer-exchange antibody candidates into a standard formulation buffer (e.g., histidine buffer, pH 6.0) and concentrate to 10 mg/mL. Clarify the solution by centrifugation at 15,000 × g for 10 minutes.

- DLS Measurement: Load the supernatant into a quartz cuvette. Place the cuvette in the instrument and equilibrate to 25°C.

- Data Acquisition: Perform a series of measurements at increasing antibody concentrations (e.g., 2, 5, 10 mg/mL). For each concentration, measure the diffusion coefficient (D).

- kD Calculation: Plot the measured diffusion coefficient (D) against the antibody concentration (c). The diffusion interaction parameter kD is derived from the slope of the linear regression of D versus c, according to the equation: D = D0 (1 + kD c), where D0 is the diffusion coefficient at infinite dilution. A high, positive kD indicates net repulsive forces and favorable colloidal stability.

Workflow Visualization

Figure 1: Bayesian Optimization Workflow for Antibody Design. This diagram illustrates the iterative process of using Bayesian optimization to balance multiple objectives, with experimental assays feeding data back to update the model.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Reagents and Platforms for Antibody Optimization

| Reagent/Platform | Function/Description | Application in Optimization |

|---|---|---|

| Absolut! Software Framework | A computational lattice-based simulator for end-to-end in silico evaluation of antibody-antigen binding affinity [1]. | Serves as a deterministic, low-cost black-box "oracle" for benchmarking BO algorithms like AntBO before wet-lab experimentation. |

| IgFold & ABodyBuilder | Deep learning-based tools for rapid and accurate prediction of antibody 3D structures from sequence alone [26] [27]. | Generates structural inputs for structure-based surrogate models in BO, enabling the use of 3D features without experimental structures. |

| Protein Language Models (pLMs) | Large-scale neural networks (e.g., ESM-2) trained on protein sequence databases to infer evolutionary and structural constraints [23] [27]. | Provides sequence embeddings for BO surrogate models and can be used as a "soft constraint" to prioritize natural, functional sequences. |

| BLI & SPR Platforms | Label-free biosensor systems (e.g., Octet, Biacore) for real-time kinetic analysis of biomolecular interactions [17]. | The gold-standard experimental oracle for quantifying binding affinity (KD, ka, kd) of designed antibody variants. |

| Phage/Yeast Display | In vitro selection technologies for screening vast libraries (10^9-10^10) of antibody variants for antigen binding [24] [17]. | High-throughput method for initial candidate discovery and affinity maturation; data can be used to train initial ML/BO models. |

| DLS & DSF Instruments | Analytical instruments for assessing colloidal stability (kD via DLS) and thermal stability (Tm via DSF) in a high-throughput manner [25] [17]. | Key experimental oracles for quantifying developability and stability objectives and constraints within a BO cycle. |

| D-Glucose-13C-4 | D-Glucose-13C-4|13C-Labeled Glucose for Research | D-Glucose-13C-4 is a stable isotope-labeled tracer for metabolic research. This product is For Research Use Only. Not for diagnostic or personal use. |

| UniPR129 | UniPR129, MF:C36H52N2O4, MW:576.8 g/mol | Chemical Reagent |

Frameworks in Action: From AntBO to Clone-Informed Bayesian Optimization

Antibodies are Y-shaped proteins crucial for therapeutic applications, with the Complementarity-Determining Region H3 (CDRH3) playing a dominant role in determining antigen-binding specificity and affinity [4]. The sequence space of CDRH3 is vast and combinatorial, making exhaustive experimental or computational screening for optimal binders infeasible [28] [4]. Combinatorial Bayesian Optimization (CBO) has emerged as a powerful machine learning framework to address this challenge, enabling efficient in silico design of high-affinity antibody sequences with favorable developability profiles [28] [4]. AntBO is a CBO implementation designed to bring automated antibody design closer to practical viability for in vitro experimentation [4] [29].

Core Methodology of AntBO

The AntBO framework treats the process of evaluating antigen-binding affinity as a black-box oracle. This oracle takes an antibody sequence as input and returns a binding affinity score, abstracting the complex computational simulations or experimental assays required for this assessment [28] [4]. The primary objective is to find CDRH3 sequences that maximize this oracle's output—indicating stronger binding—while navigating the immense combinatorial sequence space efficiently.

The Bayesian Optimization Engine

Bayesian Optimization is a sequential design strategy that builds a probabilistic surrogate model of the black-box function (the oracle) and uses an acquisition function to decide which sequences to evaluate next [28].

- Surrogate Model: AntBO typically employs Gaussian Process (GP) regression to model the relationship between antibody sequences and their predicted binding affinity. The GP provides a posterior distribution over the function space, yielding both an expected affinity and an uncertainty estimate for any given sequence [30].

- Acquisition Function: This function leverages the GP's predictions to balance exploration (sampling regions of high uncertainty) and exploitation (sampling regions of high predicted affinity). It selects the most promising sequences for the next round of evaluation by the oracle [28] [4].

- Combinatorial Search Space: The approach is specifically tailored for the discrete, combinatorial nature of the CDRH3 sequence space, making it more suitable than optimization methods designed for continuous spaces [4].

Integration of Developability Constraints

A key feature of AntBO is the incorporation of a CDRH3 trust region. This restricts the Bayesian optimization search to sequences that are predicted to have favorable developability scores, ensuring that the designed antibodies not only bind strongly but also possess biophysical properties conducive to therapeutic development, such as stability and low immunogenicity [4].

The following diagram illustrates the core iterative workflow of the AntBO framework:

Performance Benchmarks

AntBO's performance has been rigorously evaluated against established baselines, demonstrating its superior efficiency and effectiveness in designing high-affinity CDRH3 sequences.

Benchmarking Setup

- Oracle: The Absolut! software suite was used as an in silico black-box oracle to score the target specificity and affinity of designed antibodies [4].

- Baselines: AntBO was compared against:

- Scope: Experiments were conducted for 159 discretized antigens available within the Absolut! framework [4].

Key Quantitative Results

Table 1: Summary of AntBO Benchmarking Performance [4]

| Metric | Performance of AntBO | Comparison Baseline |

|---|---|---|

| Optimization Efficiency | Found very-high affinity CDRH3 sequences in 38 protein designs | Outperformed genetic algorithm baseline |

| Performance vs. Experimental Data | Suggested sequences outperforming the best binder from 6.9 million experimental CDRH3s | Surpassed a massive experimentally derived database |

| Domain Knowledge | Required no prior domain knowledge for sequence design | - |

In a separate, head-to-head experimental comparison of a different but related Bayesian optimization method for full single-chain variable fragment (scFv) design, the machine learning approach generated a library where the best scFv showed a 28.7-fold improvement in binding over the best scFv from a directed evolution approach. Furthermore, in the most successful ML-designed library, 99% of the scFvs were improvements over the initial candidate [30].

Experimental Validation Protocols

While AntBO is an in silico design tool, its predictions require empirical validation. The following section outlines standard high-throughput experimental protocols used to measure the binding affinity and properties of antibodies designed by computational methods.

High-Throughput Binding Affinity Measurement

Principle: Yeast surface display is a powerful technique for expressing antibody fragments (like scFvs or Fabs) on the surface of yeast cells, allowing for high-throughput quantification of antigen binding via fluorescence-activated cell sorting (FACS) [30] [31] [17].

Table 2: Key Reagents for Yeast Display Binding Assay

| Research Reagent | Function/Description |

|---|---|

| Yeast Display Library | A population of yeast cells (e.g., Saccharomyces cerevisiae) genetically engineered to express a library of antibody variant sequences on their surface. |

| Fluorescently Labeled Antigen | The target antigen conjugated to a fluorophore (e.g., biotin-streptavidin with a fluorescent tag). Essential for detecting binding events via FACS. |

| FACS Instrument | Fluorescence-Activated Cell Sorter. Used to analyze and sort individual yeast cells based on the fluorescence intensity resulting from antigen binding. |

| Induction Media | Media (e.g., SGLC) used to induce the expression of the antibody fragment on the yeast cell surface. |

Step-by-Step Protocol:

- Library Transformation: Transform the library of designed antibody sequences into a suitable yeast display strain (e.g., EBY100) [30].

- Surface Expression Induction: Inoculate transformed yeast into induction media and incubate for 24-48 hours at a defined temperature (e.g., 20°C) with shaking to allow antibody expression on the cell surface [30].

- Antigen Binding: Label approximately 10^7 yeast cells with a range of concentrations of the fluorescently labeled antigen. Incubate on ice for a set period (e.g., 1-2 hours) to reach binding equilibrium [30].

- FACS Analysis & Sorting: Analyze the labeled cells using a FACS instrument. The median fluorescence intensity (MFI) of the population is measured and can be used to determine the apparent binding affinity. Cells displaying high-affinity binders can be physically sorted for further analysis or sequencing [30] [17].

- Data Analysis: Binding data is typically reported on a log-scale, with lower values indicating stronger binding. The resulting dataset is used to validate and potentially retrain the computational models [30].

The workflow for the end-to-end design and validation process, integrating AntBO with high-throughput experiments, is shown below:

Specificity and Developability Profiling

After initial affinity screening, lead candidates require further characterization.

- Kinetic Analysis with BLI/SPR: Techniques like Bio-Layer Interferometry (BLI) or Surface Plasmon Resonance (SPR) provide label-free, quantitative data on binding kinetics (association rate, Kon; dissociation rate, Koff) and affinity (KD) [31] [17]. BLI, for instance, can measure up to 96 interactions simultaneously in a single run [17].

- Stability Assessment with DSF: Differential Scanning Fluorimetry (DSF) is a high-throughput method to assess the thermal stability of antibodies. It measures the temperature at which an antibody unfolds, providing a key indicator of its developability [17].

The Scientist's Toolkit: Essential Research Reagents & Software

Table 3: Key Resources for Implementing an AntBO-Led Workflow

| Category | Tool/Reagent | Specific Function |

|---|---|---|

| Computational Software | AntBO | The combinatorial Bayesian optimization framework for CDRH3 design [28] [4]. |

| Absolut! | A software suite that can act as an in silico oracle for benchmarking, enabling unconstrained generation of 3D antibody-antigen structures and affinity scoring [4]. | |

| Pre-trained Protein Language Models | Models (e.g., BERT) trained on large protein sequence databases (e.g., Pfam, OAS) to provide meaningful sequence representations and predict affinity with uncertainty [30]. | |

| Experimental Platforms | Yeast Display | Eukaryotic display system for high-throughput screening of antibody libraries (up to 10^9 variants) and affinity measurement [30] [31]. |

| Phage Display | In vitro selection system capable of screening extremely large antibody libraries (often >10^10 variants) [31]. | |

| Characterization Instruments | FACS Sorter | Instrument for analyzing and sorting cells based on fluorescent antigen binding in display technologies [30] [17]. |

| BLI (e.g., Octet systems) | Label-free instrument for high-throughput kinetic analysis of antibody-antigen interactions [31] [17]. | |

| Data Resources | Observed Antibody Space (OAS) | A massive, publicly available database of natural antibody sequences used for pre-training language models [30]. |

| Antimalarial agent 7 | Antimalarial agent 7, MF:C23H22F2N4O3, MW:440.4 g/mol | Chemical Reagent |

| Bentazone-13C10,15N | Bentazone-13C10,15N|13C and 15N-Labeled Herbicide | Bentazone-13C10,15N is a stable isotope-labeled herbicide for research. It inhibits photosynthesis to control weeds. This product is for Research Use Only (RUO). Not for human use. |

Leveraging Gaussian Processes as Surrogate Models

The design of therapeutic antibodies represents a formidable challenge in biologics development, requiring the simultaneous optimization of multiple properties such as high antigen-binding affinity, specificity, and favorable developability profiles. The combinatorial nature of antibody sequence space, particularly in the critical complementarity-determining region 3 of the heavy chain (CDRH3), makes exhaustive experimental screening computationally and practically impossible [28] [32]. Within this framework, Bayesian optimization (BO) has emerged as a powerful, sample-efficient strategy for navigating this vast design space. Central to the BO framework is the Gaussian process surrogate model, a probabilistic machine learning model that approximates the complex, often unknown relationship between antibody sequence and function. By building a statistical surrogate of the expensive experimental oracle (e.g., binding affinity measurements), Gaussian processes enable data-efficient optimization by balancing the exploration of uncertain regions with the exploitation of known promising sequences [33] [23].

Gaussian processes are particularly well-suited for this task because they provide not only predictions of function but also a quantitative measure of uncertainty for those predictions. This uncertainty quantification is the cornerstone of the acquisition functions in BO, which guide the selection of the most informative sequences to test in the next experimental cycle [33]. The application of GP-based BO has been demonstrated to successfully identify high-affinity antibody sequences in under 200 calls to the binding oracle, outperforming sequences obtained from millions of experimental reads [28] [32]. This document details the theoretical foundation, practical implementation, and experimental protocols for employing GPs as surrogate models in antibody engineering campaigns.

Theoretical Foundation of Gaussian Process Surrogate Models

Gaussian Process Formulation

A Gaussian process is a collection of random variables, any finite number of which have a joint Gaussian distribution. It is fully defined by a mean function, ( m(\mathbf{x}) ), and a covariance function, ( k(\mathbf{x}, \mathbf{x}') ), and is expressed as: [ f(\mathbf{x}) \sim \mathcal{GP}(m(\mathbf{x}), k(\mathbf{x}, \mathbf{x}')) ] where ( \mathbf{x} ) represents an input antibody sequence [33] [34]. In practice, the mean function is often set to zero after centering the data. The covariance function, or kernel, is the critical component as it encodes assumptions about the function's smoothness and periodicity. For antibody sequence data, which is inherently discrete, specialized kernels are required.

The fundamental predictive equations of a GP for a test point ( \mathbf{x}* ), given training inputs ( \mathbf{X} ) and observations ( \mathbf{y} ), are given by: [ \bar{f}* = \mathbf{k}*^T (\mathbf{K} + \sigman^2\mathbf{\Delta})^{-1} \mathbf{y} ] [ \mathbb{V}(f*) = k(\mathbf{x}, \mathbf{x}_) - \mathbf{k}*^T (\mathbf{K} + \sigman^2\mathbf{\Delta})^{-1} \mathbf{k}* ] where ( \mathbf{K} ) is the covariance matrix between all training points, ( \mathbf{k}* ) is the covariance vector between the test point and all training points, ( \sigma_n^2 ) is the global noise variance, and ( \mathbf{\Delta} ) is a diagonal matrix containing the relative uncertainty estimates for each data point [33].

Kernel Selection for Antibody Sequences

The choice of kernel function is paramount, as it determines the generalization properties of the surrogate model. Standard kernels designed for continuous spaces are unsuitable for the discrete, combinatorial space of antibody sequences. The following table summarizes kernels validated for antibody sequence data.

Table 1: Kernels for Gaussian Process Surrogate Models in Antibody Design

| Kernel Name | Input Domain | Mathematical Formulation | Application in Antibody Design |

|---|---|---|---|

| Transformed Overlap Kernel [23] | Sequence | ( k(\mathbf{x}, \mathbf{x}') = \sigma_f^2 \cdot \text{Overlap}(\phi(\mathbf{x}), \phi(\mathbf{x}')) ) | Adapted for categorical sequence data; measures sequence similarity. |

| Tanimoto (OneHot-T) [23] | Sequence | Derived from Tanimoto similarity on one-hot encoded sequences. | Suitable for binary fingerprint representations of sequences. |

| Tanimoto (BLO-T) [23] | Sequence | Derived from Tanimoto similarity on BLOSUM-62 substitution matrix embeddings. | Accounts for biochemical similarity between amino acids. |

| Matérn-5/2 (ESM-M) [23] | Sequence | ( k(r) = \sigma_f^2 (1 + \sqrt{5}r + \frac{5}{3}r^2) \exp(-\sqrt{5}r) ), where ( r ) is a distance metric on ESM-2 embeddings. | Uses embeddings from protein language models; captures deep semantic similarity. |

| String Kernel [23] | Sequence | Counts matching k-mers (substrings) between two sequences. | Captures local motif conservation important for function. |

Handling Multiple Objectives with Multi-Output Gaussian Processes

Antibody optimization is inherently multi-objective. A candidate must possess not only high affinity but also stability, low immunogenicity, and expressibility. Modeling these multiple, often correlated, objectives requires multi-output Gaussian processes [34] [20].

The Linear Model of Coregionalization (LMC) is a prominent multi-output framework. It models ( P ) output functions as linear combinations of ( Q ) independent latent Gaussian processes ( {gq(\mathbf{x})}{q=1}^Q ): [ fp(\mathbf{x}) = \sum{q=1}^{Q} W{p,q} gq(\mathbf{x}) + \kappap vp(\mathbf{x}) ] where ( \mathbf{W} ) is a ( P \times Q ) weight matrix, ( vp(\mathbf{x}) ) is an independent latent function for output ( p ), and ( \kappap ) is a learned constant [34]. The resulting covariance between two outputs ( fp ) and ( f{p'} ) at inputs ( \mathbf{x} ) and ( \mathbf{x}' ) is: [ \text{cov}(fp(\mathbf{x}), f{p'}(\mathbf{x}')) = \sum{q=1}^{Q} b{p, p'}^q k_q(\mathbf{x}, \mathbf{x}') ] where ( \mathbf{B}^q = \mathbf{W}^q (\mathbf{W}^q)^T ) is the coregionalization matrix for latent process ( q ). This structure allows the model to share information across different property predictions, improving data efficiency [34].

Experimental Protocol: Implementing a GP-BO Pipeline for CDRH3 Optimization

This protocol details the steps for implementing a combinatorial Bayesian optimization pipeline, specifically for designing antigen-specific CDRH3 sequences, based on the AntBO framework [28].

Materials and Reagents

Table 2: Key Research Reagent Solutions for Antibody Optimization

| Reagent / Resource | Function / Description | Example or Source |

|---|---|---|

| Antigen | The target molecule for antibody binding. | Purified recombinant protein. |

| Parent Antibody Sequence ((X_0)) | The starting point for optimization, often a weak binder. | e.g., Bococizumab-IgG1 [20]. |

| Binding Affinity Oracle | The experimental assay used to measure binding strength. | Surface Plasmon Resonance (SPR) or Bio-Layer Interferometry (BLI). |

| Developability Assays | Suite of assays to assess stability, solubility, and aggregation propensity. | SEC-HPLC, DSF, ( k_D ) measurement [20]. |

| ProcessOptimizer Package | Python library for Bayesian optimization. | Version 0.9.4, built on scikit-optimize [20]. |

| IgFold | Software for rapid antibody structure prediction from sequence. | Used for generating structural features as model input [23]. |

| ESM-2 | Large protein language model. | Used to generate informative sequence embeddings [23]. |

Step-by-Step Procedure

Step 1: Problem Formulation and Initial Dataset Creation

- Define the design space: Focus on the CDRH3 loop. Define the sequence length and the allowable amino acids at each position.

- Establish the objective: Define the function ( f(\mathbf{x}) ) to be maximized. This could be a composite score based on binding affinity and a developability index.

- Generate initial data: Create a diverse set of CDRH3 sequences, for example, by generating random mutants of the parent sequence ( X_0 ) within a defined Hamming distance. The recommended initial dataset size is 13-20 sequences [28] [20].

Step 2: Sequence Representation and Feature Engineering Choose an appropriate numerical representation for the antibody sequences. The following are common approaches:

- One-Hot Encoding: Encode each amino acid in a sequence as a 20-dimensional binary vector.

- BLOSUM-62 Embedding: Encode each amino acid using its BLOSUM-62 substitution matrix row, which encapsulates evolutionary information.

- Protein Language Model Embeddings: Pass the sequence through a pre-trained model like ESM-2 and use the mean-pooled embeddings from the final layer as the feature vector [23].

- Structural Features: Use IgFold to predict the 3D structure of the antibody and extract features such as the flattened ( C_{\alpha} ) coordinates of the CDRH3 loop [23].

Step 3: Surrogate Model Configuration and Training

- Select a kernel: Based on the chosen representation, select a corresponding kernel from Table 1. For example, use the ESM-M kernel for ESM-2 embeddings.

- Configure the Gaussian Process: Use the selected kernel and a zero-mean function. The hyperparameters ( \theta = (\sigmaf^2, \ell1, ..., \elld, \sigman^2) ) (signal variance, length-scales, and noise variance) must be inferred from the data.

- Train the model: Optimize the hyperparameters by maximizing the marginal log-likelihood of the observed data: [ \log p(\mathbf{y} | \mathbf{X}, \theta) = -\frac{1}{2} \mathbf{y}^T (\mathbf{K}{\theta} + \sigman^2\mathbf{I})^{-1} \mathbf{y} - \frac{1}{2} \log |\mathbf{K}{\theta} + \sigman^2\mathbf{I}| - \frac{n}{2} \log 2\pi ] This is typically done using a gradient-based optimizer like L-BFGS [33] [34].

Step 4: Bayesian Optimization Loop and Candidate Selection

- Define an acquisition function: The Expected Improvement (EI) is a common choice. For a surrogate model providing posterior mean ( \mu(\mathbf{x}) ) and variance ( \sigma^2(\mathbf{x}) ), EI is defined as: [ EI(\mathbf{x}) = (\mu(\mathbf{x}) - f(\mathbf{x}^+) - \xi)\Phi(Z) + \sigma(\mathbf{x})\phi(Z) ] where ( Z = \frac{\mu(\mathbf{x}) - f(\mathbf{x}^+) - \xi}{\sigma(\mathbf{x})} ), ( f(\mathbf{x}^+) ) is the best-observed value, ( \xi ) is a trade-off parameter, and ( \Phi ) and ( \phi ) are the standard normal CDF and PDF, respectively [28] [33].

- Propose new candidates: Find the sequence ( \mathbf{x} ) that maximizes the acquisition function. This is a combinatorial optimization problem over the CDRH3 sequence space. AntBO uses a trust region to restrict the search to sequences within a bounded Hamming distance from the current best performer [28].

- Iterate: The proposed candidate is synthesized, experimentally characterized (e.g., binding affinity measured), and the new data point is added to the training set. The GP surrogate is retrained, and the loop repeats until the experimental budget is exhausted or performance converges.

The following diagram illustrates the complete Bayesian optimization workflow for antibody design.

Diagram 1: Bayesian Optimization Workflow for Antibody Design. The process iterates between experimental measurement and model-based candidate proposal.

Advanced Protocol: Integration with Generative Models (CloneBO)

For enhanced efficiency, the standard GP-BO can be integrated with a generative model prior, as in the CloneBO framework [35]. The supplemental protocol is as follows:

- Train a generative model: Train a large language model (e.g., CloneLM) on hundreds of thousands of clonal families of naturally evolving antibody sequences. This model learns the distribution ( p(X | \text{clone}) ) of mutations that lead to functional improvements in nature [35].

- Incorporate the prior: Use the generative model to build an informed prior ( p(f | X_0) ) for the Bayesian optimization process. This biases the search towards biologically plausible and fitter sequences.

- Condition on data with SMC: Employ a Twisted Sequential Monte Carlo (SMC) procedure to condition the generative proposals from CloneLM on the experimental measurements ( (X{1:N}, Y{1:N}) ), ensuring that proposed sequences are both biologically informed and likely to improve the target property [35].

Expected Outcomes and Performance Benchmarks

When implemented correctly, the GP-BO pipeline is highly data-efficient. The following table summarizes quantitative performance data from published studies.

Table 3: Benchmarking Performance of GP-Based Antibody Optimization

| Framework / Study | Optimization Target | Key Performance Metric | Result |

|---|---|---|---|

| AntBO [28] [32] | CDRH3 binding affinity | Number of oracle calls to outperform 6.9M experimental sequences | < 200 calls |

| AntBO [28] | CDRH3 binding affinity | Number of designs to find very high affinity binder | 38 designs |

| Formulation BO [20] | mAb formulation (3 properties) | Number of experiments to identify optimized conditions | 33 experiments |

| CloneBO [35] | Binding and stability (in silico) | Optimization efficiency vs. state-of-the-art | Substantial improvement |

Troubleshooting and Technical Notes

- Poor Model Fit: If the GP surrogate fails to accurately model the objective function, verify the sequence representation and kernel choice. Consider using a hybrid kernel or a more expressive representation like ESM-2 embeddings [23].

- Slow Optimization: The combinatorial optimization of the acquisition function can be slow. The use of a trust region, as in AntBO, dramatically reduces the search space and improves speed [28].

- Multi-objective Trade-offs: For problems with competing objectives (e.g., affinity vs. stability), a single composite objective might be insufficient. Implement a multi-output GP and use a multi-objective acquisition function like Expected Hypervolume Improvement [20].

The following diagram illustrates the core architecture of the AntBO system, highlighting the role of the Gaussian process and the combinatorial trust region.

Diagram 2: AntBO Combinatorial Optimization Architecture. The trust region focuses the search on sequences near the current best performer.

Antibody therapeutics represent the fastest-growing class of drugs, with applications spanning oncology, autoimmune diseases, and infectious diseases [36]. A fundamental challenge in developing these biologics lies in optimizing initial antibody candidates to achieve sufficient binding affinity and stability while maintaining developability properties. Traditional methods often struggle with the combinatorial vastness of sequence space, frequently failing to identify suitable candidates within practical experimental budgets [36].