Integrating Multi-Omic Data in Immunology: A Comprehensive Guide to MOFA+, Seurat, and Harmony Frameworks

The complexity of the immune system, characterized by extraordinary cellular diversity and dynamic responses, demands analytical approaches that can holistically integrate multiple layers of molecular information.

Integrating Multi-Omic Data in Immunology: A Comprehensive Guide to MOFA+, Seurat, and Harmony Frameworks

Abstract

The complexity of the immune system, characterized by extraordinary cellular diversity and dynamic responses, demands analytical approaches that can holistically integrate multiple layers of molecular information. This article provides researchers, scientists, and drug development professionals with a comprehensive guide to three leading multi-omic integration frameworks—MOFA+, Seurat, and Harmony. We explore the foundational principles of these statistical and computational tools, detail their methodological application to immunological questions—from characterizing innate immune cells at CNS borders to profiling T-cell activation—and offer practical troubleshooting strategies for overcoming common bottlenecks related to data heterogeneity, computational scaling, and reproducibility. Finally, we present a comparative analysis of their performance against emerging methods and validate their utility through groundbreaking case studies in immunology and therapeutic development.

Understanding the Need for Multi-Omic Integration in Modern Immunology

Frequently Asked Questions (FAQs)

Q1: What are the main categories of data integration methods, and how do I choose? Data integration methods can be broadly divided into four categories, each with different strengths and resource requirements [1].

Table: Categories of Data Integration Methods

| Method Type | Key Principle | Examples | Best For |

|---|---|---|---|

| Linear Embedding Models | Uses singular value decomposition and local neighborhoods for correction [1]. | Harmony, Seurat, Scanorama [1] | Simple batch correction tasks [1]. |

| Deep Learning Approaches | Uses autoencoder networks, often conditioned on batch covariates [1]. | scVI, scANVI, scGen [1] | Complex data integration tasks [1]. |

| Graph-based Methods | Uses nearest-neighbor graphs, forcing connections between batches [1]. | BBKNN [1] | Fast runtime on smaller datasets [2]. |

| Global Models | Models batch effect as a consistent additive/multiplicative effect [1]. | ComBat [1] | - |

Q2: I'm getting a Harmony error in my Seurat v5 pipeline. What should I check?

A common error when using IntegrateLayers with HarmonyIntegration in Seurat v5 is: "Error in names(groups) <- "group" : attempt to set an attribute on NULL" [3]. This is often related to how cell identities are set before subsetting. To resolve this:

- Check Active Identities: Before subsetting your Seurat object, ensure that the active identities (

Idents) are set correctly. The error can occur if you have changed the active identity from the defaultseurat_clustersto another grouping (likeRNA_snn_res.0.3) and then attempt to subset and integrate [3]. - Verification Step: Re-run your subsetting and check the object's metadata to confirm that the intended clusters are present before proceeding to normalization and integration.

Q3: How does MOFA differ from standard PCA? MOFA is a statistically rigorous generalization of Principal Component Analysis (PCA) for multi-omics data [4]. While PCA reduces dimensionality for a single data matrix, MOFA infers a set of latent factors from multiple data matrices (omics layers) simultaneously. These factors capture the principal sources of variation, revealing which are shared across omics and which are specific to a single modality [4].

Q4: My Harmony algorithm isn't converging and shows a warning. What does this mean?

The warning "did not converge in 25 iterations" indicates that Harmony's iterative process has not stabilized [5]. The algorithm uses a soft k-means clustering approach and may require more iterations to find a stable solution where cell cluster assignments are no longer strongly dependent on dataset origin [5] [2]. You can typically increase the max.iter.harmony parameter to allow the algorithm more time to converge.

Troubleshooting Guides

Issue: Poor Mixing of Datasets After Integration

If cells still cluster by batch or dataset after integration instead of by biological cell type, consider the following steps:

- Verify Method Choice: Confirm you are using an appropriate method for your task's complexity. For complex integrations (large protocol differences, non-overlapping cell types), a deep learning method like scVI may be more effective than a linear embedding model [1].

- Check the Batch Covariate: The choice of "batch" covariate determines what variation is removed. Using a fine-grained covariate (e.g., individual samples) may remove meaningful biological signal, while a coarse covariate (e.g., sequencing platform) might leave technical artifacts [1].

- Inspect Input Data: Ensure your data preprocessing (normalization, highly variable feature selection) is consistent and appropriate across all batches.

Issue: Over-correction and Loss of Biological Variation

A key challenge is over-correction, where a method removes genuine biological variation along with the batch effect [1].

- Quantitative Evaluation: Use metrics like the k-nearest-neighbor Batch-Effect Test (kBET) to quantify batch mixing and metrics that measure the conservation of biological variation, which require ground-truth cell identity labels [1].

- Leverage Labels if Available: Some methods, like scANVI and scGen, can use cell identity labels to guide integration and prevent the removal of meaningful biological signal [1].

Issue: Computational Limitations with Large Datasets

When working with hundreds of thousands of cells, some methods become computationally infeasible.

- Benchmark for Scaling: Harmony has been demonstrated to integrate up to 500,000 cells on a personal computer, requiring significantly less memory and time than other methods like Seurat's MultiCCA or MNN Correct [2].

- Consider Federated Learning: For extremely large or privacy-sensitive distributed data, "Federated Harmony" provides a framework to perform integration without sharing raw data, reducing computational strain on any single machine [6].

Experimental Protocols

Protocol 1: Basic Multi-omics Integration Workflow with MOFA2 (R)

This protocol outlines the steps for an unsupervised integration of multiple omics data types (e.g., mutations, RNA expression, DNA methylation) using the MOFA2 R package [7].

- Data Preparation: Format your multi-omics data into a

MultiAssayExperimentobject or a list of matrices where features are rows and columns are samples. MOFA can handle missing data for samples not profiled with all omics [4]. - Model Training: Create a MOFA object and train the model. The core function will infer the latent factors.

- Downstream Analysis: Use the trained model for:

- Visualization: Plot the factor values for samples to identify continuous gradients or subgroups.

- Factor Interpretation: Examine the loadings to identify which features (e.g., genes, methylation sites) drive each factor.

- Annotation: Perform gene set enrichment analysis on the highly weighted features in relevant factors [7].

Protocol 2: Single-Cell RNA-Seq Integration with Seurat and Harmony

This protocol details the integration of multiple scRNA-seq datasets to correct for batch effects using Seurat and Harmony [5] [8].

- Preprocessing: Create a Seurat object for each dataset and perform standard preprocessing (normalization, identification of highly variable features) individually [8].

- PCA: Merge the objects and run PCA on the merged dataset to obtain an initial low-dimensional embedding [5] [8].

- Harmony Integration: Run Harmony on the PCA embedding to remove batch-specific effects.

- Clustering and UMAP: Use the Harmony-corrected embedding for downstream clustering and UMAP visualization. Cells should now group by cell type rather than batch [2].

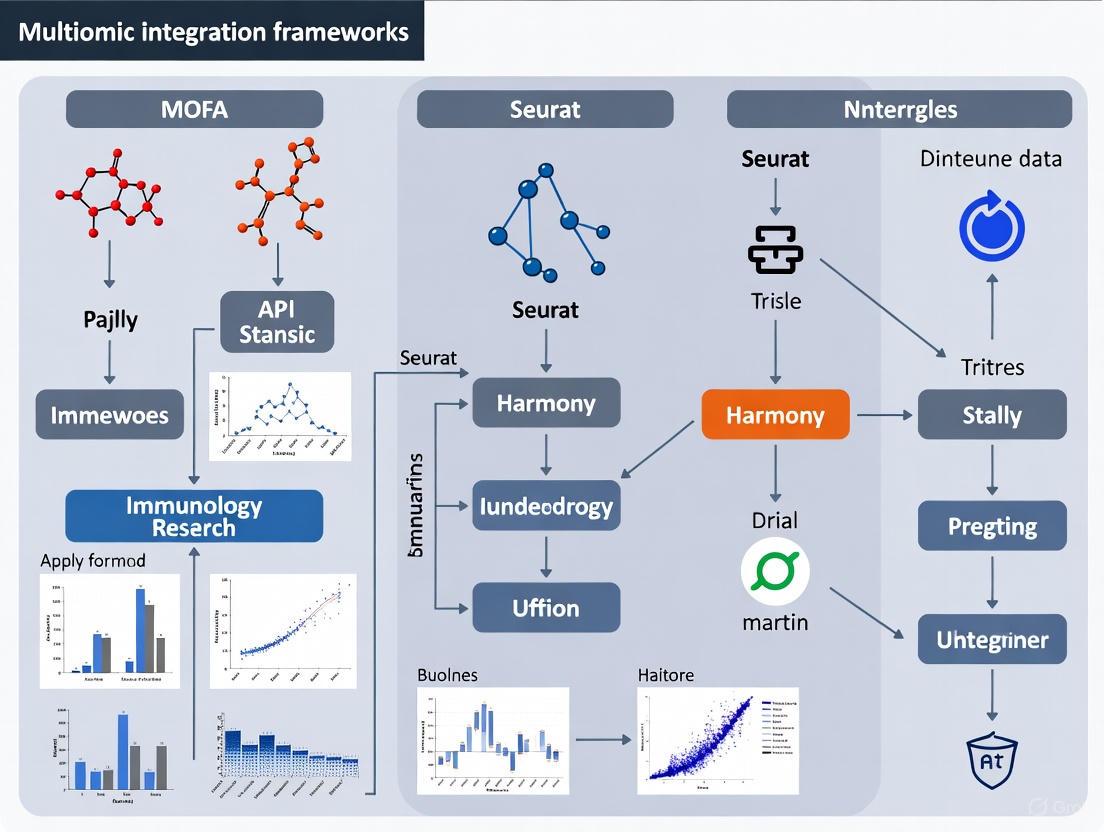

Diagram: MOFA2 Workflow for Multi-omics Integration.

Diagram: Single-Cell Integration with Seurat and Harmony.

Research Reagent Solutions

Table: Essential Materials for Multi-omics Integration Experiments

| Reagent / Resource | Function in Analysis | Example or Note |

|---|---|---|

| 10x Genomics Chromium | Platform for generating single-cell or single-nucleus RNA-seq and multiome (ATAC + GEX) data [2]. | Used in PBMC benchmarks for Harmony [2]. |

| Seurat Object | Primary data container in Seurat; holds count matrix, metadata, and analysis results [8]. | - |

| Chronic Lymphocytic Leukaemia (CLL) Data | A common multi-omics benchmark dataset featuring mutations, RNA, DNA methylation, and drug response [4]. | Used in the original MOFA application [7] [4]. |

| PBMC (Peripheral Blood Mononuclear Cells) | A standard biological system for testing and benchmarking integration methods due to well-defined cell types [2]. | Freely available from 10X Genomics [8]. |

Frequently Asked Questions (FAQs)

Q1: What are the key differences between MOFA+, Seurat, and Harmony for multi-omics integration?

A1: These tools employ distinct mathematical approaches for data integration. MOFA+ is a factor analysis model that identifies latent factors representing shared and specific sources of variation across multiple omics layers [9]. It uses Bayesian framework with Automatic Relevance Determination priors to jointly model variation across sample groups and data modalities. Seurat employs an "anchor-based" integration workflow to find mutual nearest neighbors between datasets, enabling the creation of a shared embedding [10]. Harmony utilizes an iterative algorithm that projects cells into a shared embedding where cells group by cell type rather than dataset-specific conditions, using soft clustering and linear correction functions [2].

Q2: How do I choose between unsupervised (MOFA) and supervised (DIABLO) integration methods?

A2: The choice depends on your research question and whether you have predefined outcome variables. MOFA is ideal for exploratory analysis to discover inherent sources of variation without prior knowledge of sample groups [11]. DIABLO is preferable when you want to identify multi-omics patterns associated with specific clinical outcomes or predefined sample classes [11]. In practice, using both methods complementarily can provide comprehensive insights, as demonstrated in chronic kidney disease research where both approaches identified shared pathways despite different methodologies [11].

Q3: What are the minimum computational requirements for analyzing large-scale single-cell multi-omics datasets?

A3: Requirements vary by tool. Harmony is particularly efficient, capable of integrating ~10⁶ cells on a personal computer with minimal memory requirements (0.9GB for 30,000 cells to 7.2GB for 500,000 cells) [2]. MOFA+ implements stochastic variational inference enabling GPU acceleration, achieving up to 20-fold speed increases compared to conventional inference methods [9]. For very large datasets (>100,000 cells), Seurat and MOFA+ both offer scalable solutions, but may require high-performance computing resources for optimal performance [9] [10].

Q4: How can I validate that my multi-omics integration has preserved biological variation while removing technical artifacts?

A4: Use quantitative metrics like Local Inverse Simpson's Index (LISI) to assess integration quality [2]. Integration LISI (iLISI) measures dataset mixing, while cell-type LISI (cLISI) measures biological separation. Additionally, inspect known biological markers across integrated clusters to ensure they remain coherent. For factor models like MOFA+, examine the variance decomposition to verify that factors capture biologically meaningful patterns across modalities [9].

Q5: What strategies exist for integrating single-cell data with bulk omics measurements or spatial transcriptomics?

A5: Multiple approaches exist depending on data types. Harmony has demonstrated capabilities for cross-modality spatial integration [2]. MOFA+ can integrate single-cell modalities with bulk measurements when they share sample origins [9]. The emerging MEFISTO extension incorporates temporal or spatial dependencies in multi-omics integration [12]. For spatial transcriptomics integration with single-cell data, methods like Seurat's integration with spatial mapping enable cell-type localization in tissues [10].

Troubleshooting Guides

Issue 1: Poor Integration Quality Across Datasets

Symptoms: Cells cluster primarily by dataset origin rather than cell type; biological signals are obscured.

Solution:

- Preprocessing consistency: Ensure consistent normalization across all datasets

- Feature selection: Use highly variable features that are robust across batches

- Parameter optimization: Adjust the strength of integration parameters:

Verification: Calculate LISI metrics after integration. Successful integration should show high iLISI (dataset mixing) while maintaining low cLISI (cell-type separation) [2].

Issue 2: Failure to Identify Biologically Meaningful Factors in MOFA+

Symptoms: Factors explain technical variance but not biological processes; factors don't align with known biology.

Solution:

- Input normalization: Ensure proper normalization per modality (e.g., TF-IDF for chromatin data, log-normalization for RNA)

- Factor number selection: Use the evidence lower bound (ELBO) or automatic relevance determination to select appropriate factor numbers [9]

- Variance decomposition: Examine the variance explained by factors across modalities and groups to identify shared and specific signals

- Biological interpretation: Use known marker genes and pathway enrichment to annotate factors biologically

Advanced Tip: For temporal data, use MEFISTO which incorporates temporal smoothness constraints to improve factor interpretability [12].

Issue 3: Memory or Computational Limitations with Large Datasets

Symptoms: Analysis fails due to memory errors; excessive computation time.

Solution:

- Dataset size:

- Feature reduction:

- Pre-filter lowly abundant features

- Use highly variable features rather than all genes

- Computational resources:

- For MOFA+: Enable GPU acceleration when available [9]

- Increase memory allocation or use distributed computing

Table 1: Computational Requirements Comparison

| Tool | Maximum Dataset Size | Memory Efficiency | GPU Support | Integration Approach |

|---|---|---|---|---|

| Harmony | ~1 million cells | High (7.2GB for 500k cells) | No | Iterative linear correction |

| MOFA+ | Hundreds of thousands of cells | Moderate | Yes (Stochastic VI) | Bayesian factor analysis |

| Seurat | Hundreds of thousands of cells | Moderate | Limited | Anchor-based integration |

| DIABLO | Moderate (~100s of samples) | Moderate | No | Multivariate supervised |

Issue 4: Inconsistent Cell Type Annotation Across Modalities

Symptoms: Different modalities suggest different cell-type assignments; conflicting marker expression.

Solution:

- Cross-modality validation:

- Use conserved marker detection (e.g., Seurat's FindConservedMarkers) [10]

- Transfer labels from well-annotated modalities to others

- Employ symmetric integration approaches that weight modalities equally

- Reference mapping:

- Map to established references like Azimuth [13]

- Use manual annotation based on canonical markers from literature

- Multi-level annotation:

- Implement hierarchical annotation (L1, L2) as used in OASIS atlas [13]

- Distinguish broad cell types from fine-grained states

Verification: Check expression of known marker genes across modalities in integrated space. Use cross-validation with held-out datasets.

Issue 5: Handling Missing Data or Unbalanced Modalities

Symptoms: Some samples missing certain modalities; uneven quality across data types.

Solution:

- MOFA+ handling: MOFA+ naturally handles missing modalities by learning from available data [9]

- Imputation approaches: Use cross-modality imputation (e.g., Seurat's bridge integration) when appropriate

- Weighting strategies: Adjust modality weights based on data quality and biological importance

- Subsetting: Analyze complete cases only for certain questions, then validate findings in full dataset

Experimental Protocols

Protocol 1: Basic Multi-Omics Integration with MOFA+

Application: Integrating scRNA-seq with scATAC-seq or DNA methylation data from the same cells [9].

Workflow:

- Input preparation: Create a MOFA+ object with multiple assays (views) and sample groups

- Data preprocessing: Normalize each modality appropriately (e.g., log+CPM for RNA, TF-IDF for ATAC)

- Model training: Set up and train the MOFA+ model with appropriate options:

- Specify number of factors (start with 5-15)

- Enable stochastic inference for large datasets

- Set sparsity options to encourage interpretability

- Model evaluation: Examine variance explained, ELBO convergence, and factor correlation

- Downstream analysis:

- Use factors for visualization (UMAP/t-SNE)

- Identify driving features per factor

- Perform gene set enrichment on factor weights

MOFA+ Analysis Workflow

Protocol 2: Multi-Group Integration with Harmony

Application: Integrating multiple scRNA-seq datasets across conditions, batches, or technologies [2].

Workflow:

- Initial processing: Normalize and run PCA on each dataset separately

- Integration: Apply Harmony to remove dataset-specific effects:

- Specify batch covariates (e.g., technology, donor)

- Adjust theta (diversity penalty) and lambda parameters

- Run iterative correction until convergence

- Visualization: Generate UMAP/t-SNE embeddings from Harmony coordinates

- Validation:

- Calculate LISI metrics to quantify integration quality

- Check preservation of known biological states

- Verify removal of technical artifacts

Protocol 3: Cross-Modality Integration with Seurat

Application: Mapping scRNA-seq data to spatial transcriptomics or integrating CITE-seq data [10] [2].

Workflow:

- Layer separation: Split Seurat object by condition or modality using the

splitfunction [10] - Anchor identification: Find integration anchors between layers using

FindIntegrationAnchors - Data integration: Integrate layers using

IntegrateLayersorIntegrateData - Joint analysis:

- Perform PCA on integrated data

- Run UMAP for visualization

- Identify conserved markers across conditions

- Differential analysis: Compare conditions within integrated cell types

Table 2: Key Parameters for Multi-Omics Integration Tools

| Tool | Critical Parameters | Default Values | Adjustment Guidelines |

|---|---|---|---|

| MOFA+ | Number of factors, Sparsity, Training iterations | Factors: 5-25 | Increase factors for complex systems; adjust sparsity for interpretability |

| Harmony | Theta (θ), Lambda (λ), Max iterations | θ=2, λ=1 | Increase θ for stronger batch correction; λ prevents overcorrection |

| Seurat | k.anchor, k.filter, k.score | k.anchor=5, k.filter=200 | Increase for datasets with rare cell types; decrease for better integration |

| DIABLO | Number of components, KeepX parameters | Components: 2-5 | Optimize using cross-validation; adjust KeepX for feature selection |

Protocol 4: Multi-Omics eQTL Mapping at Single-Cell Resolution

Application: Identifying cell-type specific genetic regulation using single-cell data with genotype information [13].

Workflow:

- Cell typing: Annotate cell types using reference mapping or manual annotation

- Pseudobulk creation: Aggregate expression by donor and cell type

- cis-eQTL mapping: Test variant-gene associations within 1Mb of TSS

- Cell-state mapping: Extend analysis to continuous cell states

- Cross-population comparison: Compare eQTL effects across ancestries

Single-cell eQTL Mapping Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Multi-Omics Technologies and Their Applications

| Technology | Target Molecules | Key Applications in Immunology | Considerations |

|---|---|---|---|

| 10x Genomics Chromium | RNA, ATAC, protein (CITE-seq) | Immune cell profiling, receptor sequencing | Compatible with single-cell multiome kits |

| Olink Proteomics | 2,925 plasma proteins | Plasma protein quantification with high sensitivity | Requires only 1μL plasma per measurement |

| Single-cell bisulfite sequencing | DNA methylation | Epigenetic heterogeneity in immune cells | Can profile non-CpG methylation in neurons [9] |

| scNMT-seq | Nucleosome, transcriptome, methylation | Parallel multi-layer profiling from same cells | Technically challenging; lower throughput |

| CITE-seq | RNA + surface proteins | Immunophenotyping with transcriptomics | ~200 proteins simultaneously with transcriptome |

| SPLiT-seq | RNA (low-cost) | High-throughput screening applications | Fixed cells; lower sequencing depth |

| CRISPR-based screening | Functional genomics | Identify regulators of immune functions | Pooled screens with single-cell readout |

Advanced Integration Strategies

Strategy 1: Leveraging Complementary Integration Approaches

Rationale: Different integration methods have complementary strengths. Using both unsupervised (MOFA) and supervised (DIABLO) approaches on the same dataset can provide more comprehensive insights [11].

Implementation:

- Run MOFA for unsupervised discovery of sources of variation

- Use DIABLO for supervised analysis with clinical outcomes

- Compare results to identify robust signals across methods

- Prioritize pathways and features identified by both approaches

Example: In CKD research, both MOFA and DIABLO identified complement and coagulation cascades, cytokine-cytokine receptor interaction, and JAK/STAT signaling pathways, despite their different mathematical foundations [11].

Strategy 2: Dynamic Genetic Regulation Analysis

Rationale: Genetic effects on gene expression can vary across cell types and states, requiring single-cell resolution to fully capture [13].

Implementation:

- Map eQTLs across finely resolved cell types (L1 and L2 annotations)

- Assess sharing of eQTL effects across related cell types

- Compare eQTL effects across populations (e.g., Japanese vs. European)

- Integrate with GWAS findings through colocalization analysis

Key Finding: Statistical power for eQTL discovery depends more on cell counts per sample than total sample size, highlighting the importance of deep sequencing [13].

Strategy 3: Somatic Mutation Integration

Rationale: Somatic mutations in immune cells (mCAs, LOY, mt-heteroplasmy) can influence immune function and be detected at single-cell resolution [13].

Implementation:

- Detect somatic mutations from WGS or SNP array data

- Project mutations onto single-cell transcriptomes

- Identify immune features associated with somatic mutations

- Assess clonal expansion patterns in disease contexts

Application: In COVID-19, somatic mutations in expanded B cell clones were assessed for reactivity against SARS-CoV-2 antigens [13].

Frequently Asked Questions

Q1: I encounter the error "argument 3 matches multiple formal arguments" when running RunHarmony in Seurat. What should I do?

This error often arises from a parameter naming conflict, frequently with the reduction parameter [14]. The Seurat wrapper for Harmony has many function arguments, and a partial argument name might match more than one formal argument.

- Solution: The most straightforward fix is to explicitly name your arguments when calling the function. Ensure you are using the exact, full parameter names as defined in the

RunHarmonydocumentation. For example, if you were running: Double-check thatreductionis the correct parameter name for your Seurat version. In some cases, simply removing a conflicting argument likereductionhas resolved the issue, though this is not recommended as it may use a default setting you did not intend [14].

Q2: After updating Seurat, I get many warnings about deprecated parameters like tau and block.size in Harmony. Will this affect my results?

These warnings indicate you are using old parameter names that are no longer supported in the updated version of Harmony or its Seurat integration [15]. Your analysis will likely still run, as the warnings state the deprecated parameters will be ignored.

- Solution: Update your code to use the new parameter names. The warnings often specify the correct new parameter to use. For example:

Q3: I cannot reproduce my old Harmony clustering results on the same data. The number and structure of clusters are different. What could be wrong?

Reproducibility issues can stem from several factors, especially across different computing environments [16].

- Solution:

- Set Random Seeds: Use

set.seed()before runningRunHarmonyand any subsequent clustering steps (e.g.,FindClusters,RunUMAP) to ensure stochastic processes are reproducible. - Check Software Versions: Different versions of Seurat, Harmony, or even underlying R packages can alter results. Note the versions used in the original analysis. For instance, the default UMAP method in Seurat changed from a Python implementation to an R-native one, which can change visualization and downstream clustering [16].

- Processor Architecture: Evidence suggests that differences in processor chips (e.g., Intel vs. Apple M-series) can lead to floating-point arithmetic variations, affecting numerical results and thus clustering outcomes [16]. Try to run the analysis on the same architecture as the original.

- Parameter Consistency: Ensure all parameters (e.g.,

resolutionfor clustering, number of PCs,dimsforRunUMAPandFindNeighbors) are identical to your original run.

- Set Random Seeds: Use

Troubleshooting Guides

Problem: Harmony fails to converge or displays a "Quick-TRANSfer stage steps exceeded maximum" warning.

This warning relates to the internal k-means clustering algorithm and may indicate that the algorithm is struggling to find a stable solution [5] [15].

- Diagnosis: This is often a warning, not an error, and the algorithm may still produce usable results. However, it warrants attention.

- Solutions:

- Increase

max_iter: Allow the algorithm more iterations to converge. - Adjust Harmony Parameters: Parameters like

theta(diversity clustering penalty) can influence convergence. The deprecatedtauwas related to this penalty, and its modern equivalent can be set viaharmony_options()[15]. - Verify Input Data: Ensure your PCA input is appropriate and not containing extreme outliers.

- Increase

Problem: General challenges with multi-omics data integration.

The integration of disparate data types (e.g., transcriptomics, proteomics) is inherently complex [17] [18].

- Diagnosis: Common challenges include:

- Data Heterogeneity: Different omics layers have unique data structures, scales, noise profiles, and batch effects [18].

- Missing Values: Some features may be present in one data modality but absent in another [17].

- Lack of Standardization: No universal pre-processing protocols exist across all omics types [18].

- Solutions:

- Choose the Right Method: Select an integration framework suited to your data and question.

- Thorough Pre-processing: Normalize and scale each data type individually before integration.

- Utilize Supervised Integration: If you have a known outcome (e.g., disease state), methods like DIABLO can use this information to guide integration and feature selection [18].

Multi-Omics Integration Frameworks at a Glance

The following table compares three key integration tools, helping you select the right one for your research goals.

| Framework | Core Methodology | Primary Use Case | Integration Type | Key Feature |

|---|---|---|---|---|

| MOFA+ [18] | Unsupervised Bayesian factor analysis | Identifying hidden sources of variation across multiple omics datasets. | Vertical / Matched | Learns factors that can be shared or specific to data types. |

| Harmony [5] | Iterative clustering with integration | Removing dataset-specific effects for better joint clustering (e.g., batch correction). | Horizontal / Unmatched | Designed for single-cell RNA-seq data, often used within Seurat. |

| DIABLO [18] | Supervised multiblock PLS-DA | Classifying phenotypes using multi-omics data and selecting biomarkers. | Vertical / Matched | Uses phenotype labels to drive integration and feature selection. |

Experimental Workflow for Multi-Omics Integration

A generalized workflow for integrating matched multi-omics samples using a factor-based approach like MOFA+ is outlined below.

Diagram Title: MOFA+ Multi-Omics Integration Workflow

Methodology Details:

- Data Pre-processing: Each omics data matrix (e.g., from RNA-Seq, DNA methylation, proteomics) undergoes individual normalization and scaling to account for technical noise and different data distributions [18].

- Model Training: The MOFA+ model is trained in an unsupervised manner to decompose the multi-omics data into a set of latent factors. These factors represent the principal sources of variation across all datasets and samples [18].

- Variance Decomposition: The model quantifies the percentage of variance explained by each factor in each omics dataset. This helps identify factors that are shared across all modalities or specific to a single data type [18].

- Factor Interpretation: Researchers correlate the latent factors with sample metadata (e.g., clinical outcomes, cell type labels) or use gene set enrichment analysis to attach biological meaning to the discovered sources of variation [18].

The Scientist's Toolkit: Essential Research Reagents & Materials

| Item | Function in Multi-Omics Research |

|---|---|

| Single-Cell Multi-Omics Platform | Enables correlated measurements of genomics, transcriptomics, and epigenomics from the same cell, providing a matched data structure for vertical integration [19]. |

| High-Throughput Sequencing | Generates the foundational data for genomic (WGS), transcriptomic (RNA-seq), and epigenomic (ChIP-seq, ATAC-seq) analyses [19]. |

| Spatial Transcriptomics Platform | Provides RNA expression data within a morphological context, adding a spatial layer to multi-omics integration [19]. |

| Liquid Biopsy Assay | Allows non-invasive collection of multi-analyte biomarkers (e.g., cfDNA, RNA, proteins) from biofluids, crucial for clinical translation [19]. |

Technical Support Center

FAQs & Troubleshooting Guides

Q: My MOFA model fails to converge when integrating scRNA-seq and CITE-seq data. What could be the cause? A: Non-convergence often stems from improper data scaling or extreme outliers.

- Solution 1: Ensure each data modality is scaled to unit variance. For CITE-seq ADT data, use centered log ratio (CLR) transformation instead of log-normalization.

- Solution 2: Filter out low-quality cells and genes/features with excessive zeros before integration. Check for technical outliers using PCA on each view independently.

Q: After integrating datasets with Harmony, my T cell clusters are still batch-specific. How can I improve integration? A: This indicates strong batch effects that Harmony's default parameters cannot overcome.

- Solution 1: Increase the

thetaparameter, which controls the strength of batch correction. A highertheta(e.g., 3-5) applies more aggressive correction. - Solution 2: Pre-filter your features more stringently. Use highly variable genes (HVGs) for transcriptomics and high-variance proteins for proteomics to give the algorithm a stronger signal.

Q: I observe a poor correlation between transcriptomic and proteomic measurements for the same surface marker in my Seurat object. Why? A: This is a common biological and technical discrepancy.

- Solution 1 (Technical): Check for antibody non-specificity in your CITE-seq data. Compare to an isotype control or FMO (Fluorescence Minus One) control.

- Solution 2 (Biological): Account for post-transcriptional regulation and protein half-life. The mRNA level may not reflect the current protein abundance. Consider a time-course experiment.

Q: My metabolomics data has many missing values, causing issues in multi-omic integration. How should I handle this? A: Metabolomics data is inherently sparse due to limits of detection.

- Solution 1: Apply a minimum detection filter. Remove metabolites that are missing in >80% of samples.

- Solution 2: For remaining missing values, use imputation methods appropriate for metabolomics, such as k-nearest neighbors (KNN) or half-minimum imputation (replacing NA with half the minimum value for that metabolite).

Data Presentation

Table 1: Typical Single-Cell Multi-Omic Data Yields and QC Metrics

| Omics Layer | Assay | Typical Cells Post-QC | Median Features per Cell | Key QC Metric (Passing Threshold) |

|---|---|---|---|---|

| Genomics | scDNA-seq | 5,000 - 10,000 | 50,000 - 100,000 SNPs | Mean Read Depth > 20x |

| Epigenomics | scATAC-seq | 5,000 - 15,000 | 5,000 - 20,000 Peaks | TSS Enrichment Score > 8 |

| Transcriptomics | scRNA-seq (10x) | 8,000 - 15,000 | 2,000 - 5,000 Genes | Mitochondrial Read % < 20% |

| Proteomics | CITE-seq (100-plex) | 8,000 - 15,000 | 50 - 150 ADTs | Total ADT Counts > 1,000 |

| Metabolomics | scMetabolomics (MS) | 500 - 2,000 | 50 - 200 Metabolites | CV < 30% in QC Samples |

Experimental Protocols

Protocol 1: CITE-seq for Surface Protein and Transcriptome Co-Profiling

- Cell Preparation: Harvest and count cells. Aim for >90% viability.

- Antibody Staining: Incubate 1 million cells with a TotalSeq-B antibody cocktail (0.5-2 µg/mL per antibody) in 100 µL PBS + 0.04% BSA for 30 minutes on ice.

- Washing: Wash cells twice with 2 mL PBS + 0.04% BSA to remove unbound antibodies.

- Cell Barcoding: Proceed immediately to single-cell partitioning on the 10x Genomics Chromium Controller using the Single Cell 5' Reagent Kit v2.

- Library Construction: Generate cDNA, ADT (Antibody-Derived Tag), and HTO (Hashtag Oligo) libraries according to the manufacturer's protocol. Pool libraries equimolarly for sequencing.

Protocol 2: Multi-Omic Integration with MOFA+ on Seurat Objects

- Data Preprocessing: Create individual Seurat objects for RNA and ADT data. Normalize RNA using

SCTransformand ADT data usingCLRnormalization. - Feature Selection: Identify and retain the top 3,000 highly variable genes and all high-quality ADT features.

- MOFA Object Creation: Use the

create_mofa_from_seuratfunction to combine the RNA and ADT assays into a single MOFA object. - Model Training: Train the model with default options. Set

convergence_modeto "slow" for more robust results. - Downstream Analysis: Regress MOFA factors against cell metadata (e.g., disease status) to identify factors driving variation. Plot factor weights to see which genes/proteins define each factor.

Mandatory Visualizations

Multi-Omic Integration Pathway

T Cell Activation Multi-Omic View

The Scientist's Toolkit: Research Reagent Solutions

| Reagent / Material | Function in Multi-Omic Immunology |

|---|---|

| TotalSeq-B Antibodies | Antibody-derived tags (ADTs) for quantifying surface protein abundance alongside transcriptome in CITE-seq. |

| 10x Genomics Chromium | Microfluidic platform for partitioning single cells and barcoding nucleic acids (RNA, DNA, ATAC). |

| Tn5 Transposase | Enzyme for tagmenting accessible chromatin in scATAC-seq assays. |

| Hashtag Oligos (HTOs) | Sample-barcoding antibodies for multiplexing samples, reducing batch effects and costs. |

| Cell Hashing Antibodies | Antibodies conjugated to HTOs for pooling samples prior to scRNA-seq/CITE-seq. |

| MOFA+ R Package | Statistical framework for integrating multiple omics data sets to uncover latent factors of variation. |

| Harmony R Package | Algorithm for integrating single-cell data across multiple batches or conditions. |

| Seurat R Package | Comprehensive toolkit for single-cell genomics data analysis, including multi-omic integration. |

A Practical Workflow for Multi-Omic Data Integration and Analysis

Frequently Asked Questions (FAQs)

FAQ on Data Processing

Q: How should I normalize my data for MOFA+? Proper normalization is critical for the model to work correctly. For count-based data such as RNA-seq or ATAC-seq, it is strongly recommended to perform size factor normalization followed by variance stabilization. If this step is not done correctly, the model will learn a very strong Factor 1 that captures differences in total expression per sample, pushing more subtle sources of biological variation to subsequent factors [20].

Q: Should I filter features before using MOFA+? Yes. It is strongly recommended that you filter for highly variable features (HVGs) per assay. However, an important caveat applies when doing multi-group inference: you must regress out the group effect before selecting HVGs to avoid bias [20].

Q: How do I handle batch effects or other technical factors?

MOFA+ is designed to discover dominant sources of variation, whether biological or technical. If you have known, strong technical factors (e.g., batch effects), MOFA+ will likely dedicate initial factors to capturing this large variability. To prevent this, it is recommended to regress out these technical factors a priori using methods like linear models in limma. This allows MOFA+ to focus on discovering more subtle biological sources of variation [20].

Q: My data modalities have different numbers of features. Does this matter? Yes. Larger data modalities (those with more features) tend to be overrepresented in the inferred factors. It is good practice to filter uninformative features to bring the different views within the same order of magnitude. If the size disparity is unavoidable, be aware that the model might miss small sources of variation present in the smaller dataset [20].

Q: Can MOFA+ handle missing values? Yes. MOFA+ simply ignores missing values in the likelihood calculation, and there is no hidden imputation step. Matrix factorization models like MOFA+ are known for their robustness to missing values [20].

FAQ on Model Training and Setup

Q: How does the multi-group framework work? The multi-group framework in MOFA+ is designed to compare the sources of variability that drive each pre-defined group (e.g., different experimental conditions, batches, or donors). Its goal is not to capture simple differential changes in mean levels between groups. To achieve this, the group effect is regressed out from the data before fitting the model, allowing you to identify which factors are shared across groups and which are specific to a single group [20].

Q: How do I define groups for my analysis? Group definition is a hypothesis-driven step motivated by your experimental design. There is no single "right" way, but the definition should align with your biological question. Crucially, remember that the multi-group framework aims to dissect variability, not to find factors that separate groups. If your aim is to find a factor that cleanly "separates" groups, you should not use the multi-group framework [20].

Q: How many factors should I learn? The optimal number of factors is context-dependent. It is influenced by the aim of your analysis, the dimensions of your assays, and the complexity of the data. As a general guideline, if the goal is to identify major biological sources of variation, considering the top 10-15 factors is typical. For tasks like imputation of missing values, where even minor sources of variation can be important, training a model with a larger number of factors is advisable [20] [21].

Q: What types of data can MOFA+ handle? MOFA+ can cope with three main types of data via different likelihoods:

- Continuous data: Modelled using a Gaussian likelihood.

- Binary data: Modelled using a Bernoulli likelihood.

- Count data: Modelled using a Poisson likelihood. A critical best practice is that if your data can be safely transformed to meet the assumptions of a Gaussian likelihood (e.g., normalizing and variance-stabilizing RNA-seq counts), this is always recommended. Non-Gaussian likelihoods require statistical approximations and are generally less accurate [20].

FAQ on Interpretation and Downstream Analysis

Q: How do I interpret the factors? MOFA+ factors capture global sources of variability, ordinating cells along a one-dimensional axis centered at zero. The relative positioning of cells is what matters. Cells with different signs display opposite "effects" along the inferred axis, and a higher absolute value indicates a stronger effect. This interpretation is analogous to interpreting principal components in PCA [20].

Q: How do I interpret the weights? Weights indicate how strongly each feature relates to a factor, enabling biological interpretation. Features with weights near zero have little association with the factor. Features with large absolute weights are strongly associated. The sign of the weight indicates the direction of the effect: a positive weight means the feature has higher levels in cells with positive factor values, and vice versa [20].

Troubleshooting Common Experimental Issues

Model Fitting and Convergence Problems

Issue: The model converges very slowly or not at all.

- Potential Cause: The learning rate for the stochastic variational inference (SVI) may be set too high or too low.

- Solution: Adjust the

learning_rateanddrop_factorparameters. A common strategy is to start with a higher learning rate (e.g., 0.01) and a modestdrop_factor(e.g., 0.5) to allow the learning rate to decrease after a set number of iterations without improvement in the ELBO [9].

Issue: The model is not capturing known biological signal.

- Potential Cause 1: Overwhelming technical variation. As noted in the FAQs, strong technical effects like batch or library size can dominate the first factors.

- Solution: Revisit your data preprocessing. Ensure you have properly normalized and regressed out known technical covariates before training the model [20].

- Potential Cause 2: The number of factors (K) is too small.

- Solution: Increase the number of factors and use the model selection criteria (e.g., looking at the variance explained by additional factors) to choose an appropriate K [21].

Data Integration and Interpretation Challenges

Issue: One data modality is dominating the factors.

- Potential Cause: A large disparity in the number of features or the amount of intrinsic variability between modalities.

- Solution: Perform more aggressive feature filtering on the larger/more variable modality to balance its influence relative to the other modalities. The goal is to have all modalities contribute meaningfully to the latent space [20].

Issue: In multi-group mode, I cannot find any group-specific factors.

- Potential Cause: The groups may not have distinct internal structures, or the signal of group-specific variation may be weak compared to shared variation.

Experimental Protocols & Workflows

Standard Protocol for Multi-Group Single-Cell RNA-seq Integration

This protocol is adapted from the MOFA+ application on a time-course scRNA-seq dataset of mouse embryos [9].

1. Input Data Preparation:

- Data: 16,152 cells from mouse embryos at embryonic days E6.5, E7.0, and E7.25 (two biological replicates per stage).

- Group Definition: The six batches (two replicates per stage) were defined as distinct groups in the MOFA+ model.

2. Preprocessing and Normalization:

- Filtering: Cells and genes were filtered based on standard quality control metrics.

- Normalization: Size factor normalization and variance stabilization transformation were applied to the count data.

- Feature Selection: Highly variable genes were selected after regressing out the group effect.

3. Model Training:

- The MOFA+ model was trained using stochastic variational inference, which provided a ~20-fold speed increase on large datasets compared to standard variational inference.

- The model was run for a sufficient number of iterations until convergence of the Evidence Lower Bound (ELBO).

4. Downstream Analysis:

- Variance Decomposition: The model identified 7 factors explaining >1% of variance, collectively accounting for 35-55% of transcriptional variance per embryo.

- Factor Interpretation:

- Factor 1 and 2 were associated with extra-embryonic (ExE) cell types (marked by Ttr, Apoa1, Rhox5, Bex3).

- Factor 4 captured the transition of epiblast to nascent mesoderm (marked by Mesp1, Phlda2).

- Factor 3 was identified as a technical factor related to metabolic stress.

- Temporal Analysis: Inspection of factor activity over time showed that the variance explained by Factor 4 (mesoderm) increased from E6.5 to E7.25, consistent with developmental progression.

Protocol for Multi-Modal Single-Cell Data Integration

This protocol outlines the general workflow for integrating data from different molecular layers, such as RNA expression and DNA methylation [9] [4].

1. Data Input and Setup:

- Assemble your data matrices where features are aggregated into non-overlapping views (e.g., RNA expression, DNA methylation) and cells are aggregated into non-overlapping groups (e.g., different conditions or samples).

2. Model Configuration:

- Specify the appropriate likelihood for each data view (Gaussian for normalized continuous data, Bernoulli for binary data, etc.).

- Choose the number of factors. It is often practical to start with a slightly larger number and then prune factors that do not explain meaningful variation.

3. Model Inference:

- Use the GPU-accelerated training option for large datasets to significantly speed up computation [9].

4. Analysis of Results:

- Use the learned latent factors for a wide range of analyses: variance decomposition, inspection of feature weights, inference of trajectories, and clustering.

Table 1: Performance Comparison of MOFA+ and Other Integration Methods on a Single-Cell Multiome Dataset (69,249 BMMC cells) [22]

| Method | Running Time (Minutes) | Peak Memory Usage (GB) | Batch Correction Score (kBET) | Biological Conservation (ARI) |

|---|---|---|---|---|

| Smmit | <15 | 23.05 | Highest | Highest |

| Multigrate | ~167 | 217.29 | Intermediate | High |

| scVAEIT | >1690 | >230 | Intermediate | Intermediate |

| MOFA+ | Not Reported | Not Reported | Lower | Lower |

| MultiVI | Not Reported | Not Reported | Lower | Lower |

Table 2: Key Statistical Features of the MOFA+ Framework [9]

| Feature | Description |

|---|---|

| Core Model | Bayesian Group Factor Analysis |

| Inference | Stochastic Variational Inference (SVI) with GPU acceleration |

| Key Prior | Automatic Relevance Determination (ARD) for structured sparsity across views and groups |

| Handling of Missing Data | Ignored in the likelihood (no imputation) |

| Data Types | Supports Gaussian (continuous), Bernoulli (binary), and Poisson (count) likelihoods |

Research Reagent Solutions

Table 3: Essential Materials and Computational Tools for MOFA+ Analysis

| Item / Software | Function / Purpose |

|---|---|

| MOFA2 (R Package) | The primary R package for running MOFA+, including data input, model training, and comprehensive downstream analysis. |

| mofapy2 (Python Package) | The Python core that performs the model inference. Can be used independently for model training. |

| Seurat | A toolkit for single-cell genomics; often used in conjunction with MOFA for upstream filtering or downstream analysis like UMAP visualization and clustering [22]. |

| Harmony | An integration algorithm that can be used as an alternative or complement to MOFA+ for batch correction [22]. |

| Scanpy | A Python-based toolkit for analyzing single-cell gene expression data; can be used for preprocessing steps. |

| NVIDIA GPU with CuPy | Recommended hardware and software configuration to enable accelerated model training for large datasets [9] [20]. |

| OpenBLAS / Intel MKL | Optimized math libraries to speed up CPU-based linear algebra operations during model training [20]. |

Workflow and Conceptual Diagrams

MOFA+ Core Workflow and Downstream Analysis

Multi-Group Integration Logic in MOFA+

Leveraging Seurat v5 for Integrative Multimodal Analysis and Bridge Integration

Frequently Asked Questions

Q: What are the key integration methods available in Seurat v5 and how do I choose between them?

Seurat v5 provides streamlined access to multiple integration methods through the IntegrateLayers function. The available methods include Anchor-based CCA integration (CCAIntegration), Anchor-based RPCA integration (RPCAIntegration), Harmony (HarmonyIntegration), FastMNN (FastMNNIntegration), and scVI (scVIIntegration). RPCA integration is faster and more conservative than CCA, while scVI requires additional Python dependencies but can be particularly powerful for complex integration tasks. The choice depends on your data characteristics and computational resources [23].

Q: How does Seurat v5 handle multimodal data like CITE-seq with simultaneous RNA and protein measurements?

Seurat v5 stores multimodal data in separate assays within the same object. For CITE-seq data, you would typically have an RNA assay for transcriptomic measurements and an ADT assay for antibody-derived tags. The CreateAssay5Object function allows you to add additional modalities, and you can switch between assays using DefaultAssay() or specify modalities using assay keys in feature names (e.g., "adtCD19" for protein data vs "rnaCD19" for RNA data) [24].

Q: What should I do if my FeaturePlot color palette is only showing two bins in Seurat v5?

This appears to be a known issue in Seurat v5 where supplying a custom color vector may cause the data to be binned into only two categories corresponding to the minimal and maximal values. As a workaround, you can use the built-in color palettes like CustomPalette(), BlackAndWhite(), BlueAndRed(), or PurpleAndYellow() which are designed to work properly with the visualization functions [25] [26].

Q: How do I properly normalize different data modalities in Seurat?

Different modalities require different normalization approaches. For RNA data, standard log-normalization or SCTransform is appropriate. For ADT data from CITE-seq, use NormalizeData with normalization.method = "CLR" and margin = 2 to perform centered log-ratio normalization across cells. For count-based data like ATAC-seq, size factor normalization with variance stabilization is recommended [24] [20].

Q: What are the key considerations for successful multi-omic integration?

Proper normalization is critical to prevent any single modality from dominating the integration. Filter highly variable features per assay and ensure different modalities have comparable numbers of informative features. For multi-group inference, regress out group effects before selecting variable features. Larger data modalities tend to be overrepresented, so balancing informative features across modalities is important [20].

Troubleshooting Guides

Integration and Clustering Issues

Problem: Poor integration results with batch effects still visible after running IntegrateLayers

- Cause: Insufficient correction of technical variability or improper normalization

- Solutions:

- Ensure proper normalization of each modality separately before integration

- Check that you have regressed out known technical factors like batch effects prior to integration

- Try different integration methods (RPCA is more conservative than CCA)

- Increase the number of variable features used for integration

- Verify that the data layers are properly split by batch before integration [20] [23]

Problem: Integration removes biological signal along with technical noise

- Cause: Over-correction during the integration process

- Solutions:

- Use the more conservative RPCA integration method instead of CCA

- Reduce the strength of the integration parameters (

integration_args) - Include known biological covariates in the downstream analysis rather than relying solely on integration to preserve them

- Compare integrated results with unintegrated analysis to verify biological signals are maintained [23]

Multimodal Data Analysis Issues

Problem: Difficulty visualizing both RNA and protein markers in the same analysis

- Cause: Default assay setting may not match the modality you want to visualize

- Solutions:

- Use assay keys in feature names (e.g., "adtCD3" for protein, "rnaCD3E" for RNA) to explicitly specify modalities

- Switch default assays using

DefaultAssay(object) <- "ADT"orDefaultAssay(object) <- "RNA" - Use the

FeaturePlotfunction with specified assay keys to visualize both modalities side-by-side [24]

Problem: One data modality dominates the integrated analysis

- Cause: Imbalance in dimensionality or information content between modalities

- Solutions:

- Filter uninformative features from the larger modality to balance dimensions

- Apply more stringent variable feature selection to prevent overrepresentation

- Consider down-weighting the influence of the dominant modality in the integration parameters

- Ensure proper normalization specific to each data type [20]

Visualization Problems

Problem: Custom color palettes not working properly in FeaturePlot

- Cause: Changed behavior in Seurat v5 regarding color palette handling

- Solutions:

- Use built-in palettes like

BlueAndRed(k = 50)orCustomPalette(low = "white", high = "red", mid = NULL, k = 50) - Ensure the length of your color vector matches the expected binning structure

- Check the

keep.scaleparameter setting as it affects color scaling - Consider using the

colsparameter with predefined palettes rather than custom vectors [25] [26]

- Use built-in palettes like

Experimental Protocols

Multimodal Data Integration Workflow

Step-by-Step Protocol:

Data Loading and Initialization

- Load RNA and ADT count matrices using

read.csv()orRead10X() - Create Seurat object with

CreateSeuratObject(counts = rna_matrix) - Add additional assays using

CreateAssay5Object()andobject[["ADT"]] <- adt_assay[24]

- Load RNA and ADT count matrices using

Modality-Specific Normalization

Data Splitting and Preprocessing

- Split layers by batch:

object[["RNA"]] <- split(object[["RNA"]], f = object$batch) - Find variable features:

FindVariableFeatures(object) - Scale data:

ScaleData(object) - Run PCA:

RunPCA(object, npcs = 30)[23]

- Split layers by batch:

Integration Methods

- Choose integration method based on data characteristics

- Execute integration:

object <- IntegrateLayers(object, method = HarmonyIntegration, new.reduction = "integrated.harmony") - Available methods: CCAIntegration, RPCAIntegration, HarmonyIntegration, FastMNNIntegration, scVIIntegration [23]

Downstream Analysis

Bridge Integration Protocol

Bridge integration enables mapping between different modalities or technologies by using a multi-omic bridge dataset. This is particularly useful for mapping scATAC-seq data onto scRNA-seq references [27].

Reference Preparation

- Build a comprehensive reference from multi-omic data

- Annotate cell types using known markers

- Normalize and scale the reference dataset

Query Dataset Processing

- Process query data using standard workflow

- Ensure compatibility of feature space with reference

- Normalize using same parameters as reference

Bridge Mapping

- Use bridge cells measured in both modalities

- Transfer annotations from reference to query

- Validate mapping accuracy using known markers

Comparative Analysis of Integration Methods

Table 1: Comparison of Integration Methods in Seurat v5

| Method | Speed | Memory Usage | Best Use Case | Key Parameters | Installation Requirements |

|---|---|---|---|---|---|

| CCAIntegration | Medium | Medium | General purpose batch correction | k.anchor, k.filter |

Base Seurat |

| RPCAIntegration | Fast | Low | Large datasets, conservative correction | k.anchor, k.filter |

Base Seurat |

| HarmonyIntegration | Fast | Low | Multiple batch effects | theta, lambda |

harmony package |

| FastMNNIntegration | Fast | Medium | scRNA-seq specific integration | k, d |

batchelor package |

| scVIIntegration | Slow (GPU accelerated) | High | Complex multimodal data | n_layers, dropout_rate |

reticulate, scvi-tools |

Research Reagent Solutions

Table 2: Essential Research Reagents and Computational Tools

| Reagent/Tool | Function | Application Context | Key Features |

|---|---|---|---|

| CITE-seq Antibodies | Cell surface protein quantification | Multimodal analysis with transcriptomics | DNA-barcoded, 11-500 plex panels |

| 10x Multiome Kit | Paired RNA+ATAC sequencing | Cellular transcriptome and chromatin accessibility | Simultaneous measurement from same cell |

| Cell Hashing | Sample multiplexing | Pooled analysis with demultiplexing | Hashtag oligos for sample identification |

| Seurat v5 | Single-cell analysis platform | Multimodal data integration | Assay layers, disk-backed data |

| MOFA2 | Multi-omics factor analysis | Unsupervised integration | Identifies latent factors across modalities |

| Harmony | Batch integration | Removing technical variability | Efficient, fast clustering integration |

| BPCells | Scalable computation | Large dataset handling | On-disk matrices, memory efficient |

Troubleshooting Flowchart

Pro Tips for Success

- Data Quality Control: Always examine unintegrated data first to understand baseline batch effects and biological signals [23]

- Iterative Approach: Try multiple integration methods and compare results using biological known markers [23]

- Memory Management: For large datasets (>100K cells), use Seurat v5's disk-backed capabilities with BPCells [27]

- Validation: Use known cell type markers and biological expectations to validate integration quality rather than relying solely on computational metrics

- Multi-group Framework: When using the multi-group functionality in MOFA, remember it aims to compare sources of variability between groups rather than find factors that separate groups [20]

Applying Harmony for Batch Correction and Scalable Data Integration

FAQs and Troubleshooting Guides

Core Concepts and Methodology

What is Harmony and what is its primary function in single-cell analysis? Harmony is an algorithm designed for the integration of single-cell genomics datasets. Its primary function is to project cells from multiple datasets, which may have technical differences (like different batches, donors, or technologies), into a shared embedding. In this shared space, cells group by their biological identity, such as cell type, rather than by dataset-specific conditions. This process is crucial for robust joint analysis of data from diverse sources [2].

How does the Harmony algorithm work technically? Harmony operates through an iterative process on a low-dimensional embedding of cells (like PCA) [2]:

- Clustering: It first groups cells into multi-dataset clusters using a soft k-means clustering that favors clusters containing cells from multiple datasets [2].

- Centroid Calculation: For each cluster, dataset-specific centroids are calculated [2].

- Correction Factor: A cluster-specific linear correction factor is computed based on these centroids [2].

- Cell-specific Correction: Each cell receives a cluster-weighted average of these correction factors and is adjusted. Since cells can belong to multiple clusters, each cell's correction factor is unique [2].

- Iteration: These steps repeat until cluster assignments stabilize, effectively removing dataset-specific variation [2].

What are the key quantitative metrics for assessing Harmony's integration performance? Two key metrics, often used together, are the Local Inverse Simpson's Index (LISI) and the Adjusted Rand Index (ARI) [6] [28] [2].

Table 1: Key Quantitative Metrics for Assessing Data Integration

| Metric Name | Acronym | What It Measures | Interpretation of Values |

|---|---|---|---|

| Integration LISI [2] | iLISI | The effective number of datasets represented in a cell's local neighborhood. | Higher values (closer to the max number of datasets) indicate better mixing. A value of 1 indicates no integration [2]. |

| Cell-type LISI [2] | cLISI | The effective number of cell types in a cell's local neighborhood. | Values near 1 indicate good separation of cell types (high accuracy). Higher values indicate cell types are mixed together [2]. |

| Adjusted Rand Index [28] | ARI | The similarity between two clusterings (e.g., clustering results from Harmony vs. original data). | Values range from 0 to 1, where 1 indicates perfect agreement between clusterings [28]. |

Implementation and Workflow

How do I run Harmony within a standard Seurat workflow?

In Seurat v5, you can run Harmony with a single line of code using the IntegrateLayers function [23]. The process involves two key changes to a standard workflow:

- Use

IntegrateLayerswithmethod = HarmonyIntegrationon your Seurat object after normalization, variable feature identification, and scaling [23]. - In all downstream analyses, such as running UMAP or finding neighbors, use the Harmony embeddings (

reduction = "harmony") instead of the standard PCA reduction [23].

What are the computational requirements for running Harmony? A significant advantage of Harmony is its computational efficiency. It is designed to be scalable and requires dramatically less memory than many other integration algorithms. Benchmark tests show that Harmony is capable of analyzing large datasets (on the order of 10^5 to 10^6 cells) on a personal computer, where other methods may fail due to memory constraints [2].

Can Harmony integrate over multiple technical covariates simultaneously? Yes. Harmony is flexible and can integrate over multiple covariates at once, such as different batches and different technology platforms. This is specified by providing a vector of column names from your metadata to the relevant parameter in the integration function [29].

Troubleshooting Common Issues

The datasets are not well mixed after running Harmony. What could be wrong?

First, verify that your data preprocessing steps (normalization, variable feature selection) were performed correctly. Ensure you are using the correct reduction (reduction = "harmony") in downstream steps like UMAP calculation. If the problem persists, it may indicate very strong batch effects. You can try adjusting Harmony's parameters, such as theta (which controls the degree of correction), or consider using a stronger integration method like the anchor-based RPCA integration available in Seurat [23].

How can I tell if I have overcorrected my data with Harmony? Overcorrection occurs when technical batch effects are removed so aggressively that real biological variation is also removed. Key signs of overcorrection include [30]:

- Cluster-specific markers comprise genes with widespread high expression (e.g., ribosomal genes).

- Significant overlap among markers from different clusters.

- Absence of expected canonical cell-type markers.

- A scarcity of differential expression hits in pathways known to be active in your samples.

My dataset is very large and distributed across multiple institutions. Can I still use Harmony? Yes. A novel method called Federated Harmony has been developed to address this exact challenge. It combines the Harmony algorithm with a federated learning framework. This allows multiple institutions to collaboratively integrate their single-cell data without sharing any raw data. Each institution performs local computations and shares only aggregated statistics with a central server, which coordinates the integration process. This preserves data privacy while maintaining integration performance comparable to the standard Harmony algorithm [6] [28].

Advanced Applications

On what types of single-cell data has Harmony been successfully evaluated? Harmony and its derivatives have been validated on a variety of single-cell data types, demonstrating its versatility. Performance comparable to standard Harmony has been shown on [6] [28]:

- scRNA-seq: e.g., human peripheral blood mononuclear cell (PBMC) data.

- scATAC-seq: e.g., integrating chromatin accessibility data from different platforms.

- Spatial Transcriptomics: e.g., mouse brain tissue data from multiple batches.

Experimental Protocols and Workflows

Detailed Methodology: Benchmarking Harmony on Cell Line Data

This protocol is based on the original Harmony study, which used a controlled mixture of Jurkat and 293T cell lines to quantitatively assess integration performance [2].

1. Objective: To evaluate Harmony's integration (mixing of datasets) and accuracy (separation of cell types) using datasets where cell identity is known.

2. Datasets:

- Pure Jurkat cell dataset.

- Pure 293T cell dataset.

- 50:50 mixture dataset of Jurkat and 293T cells [2].

3. Workflow:

- Data Preprocessing: Apply a standard scRNA-seq pipeline including library normalization, log-transformation of counts, and scaling.

- Dimensionality Reduction: Perform PCA and retain the top principal components.

- Baseline Assessment: Visualize the PCA/UMAP and calculate baseline iLISI and cLISI metrics. Cells are expected to cluster primarily by dataset of origin.

- Harmony Integration: Run the Harmony algorithm on the PCA embeddings.

- Post-Integration Analysis:

- Visualize the Harmony-corrected embeddings using UMAP.

- Re-calculate iLISI and cLISI metrics on the integrated space.

- Compare the post-integration metrics with the baseline. Successful integration is indicated by a strong increase in iLISI (better mixing) while cLISI remains near 1 (cell types remain distinct) [2].

Workflow Diagram: Federated Harmony for Distributed Data

Federated Harmony Workflow

Key Research Reagent Solutions

The following table lists essential materials and datasets used in the experiments cited in this guide.

Table 2: Essential Research Reagents and Datasets for Single-Cell Integration Studies

| Item Name | Type | Function in Experiment | Example Source / Citation |

|---|---|---|---|

| 10x Genomics Chromium Platform | Technology Platform | Generating single-cell gene expression data (e.g., 3' v1, 3' v2, 5' chemistries) for benchmarking [2]. | 10x Genomics [2] |

| Human PBMCs (Peripheral Blood Mononuclear Cells) | Biological Sample | A well-characterized, complex tissue used as a standard for testing integration algorithms on primary human cells [6] [28] [2]. | Multiple healthy donors [6] [28] |

| Jurkat & 293T Cell Lines | Biological Sample | Controlled, known cell lines used for rigorous benchmarking of integration accuracy and mixing [2]. | 10x Genomics Datasets [29] [2] |

| Mouse Brain Tissue (Spatial) | Biological Sample | Used to validate Harmony's performance on spatial transcriptomics data across different batches (FFPE, fixed, fresh) [6] [28]. | 10X Genomics [6] [28] |

| scATAC-seq Data | Dataset | Used to demonstrate Harmony's ability to integrate single-cell chromatin accessibility data from different technological platforms [6] [28]. | 10x Genomics Multiome & scATAC-seq [6] [28] |

Workflow Diagram: Standard Harmony Integration in Seurat

Standard Harmony in Seurat Workflow

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 1: Key Research Reagents and Experimental Materials

| Item Name | Function/Application | Specific Example from Case Study |

|---|---|---|

| Fluorescence-Activated Cell Sorting (FACS) | Enrichment of specific immune cell populations from complex tissue samples. | Isolation of CD45+ cells, and subsequently CD45+CD206+CD3−CD19−CD20− cells, from human CNS interface tissues [31]. |

| Single-Cell RNA Sequencing (scRNA-seq) | Profiling transcriptomes of individual cells to identify cell types and states. | Analysis of over 356,000 transcriptomes from 102 individuals to characterize immune cell diversity [31]. |

| Cellular Indexing of Transcriptomes & Epitopes by Sequencing (CITE-seq) | Simultaneous measurement of single-cell transcriptomes and surface protein expression. | Profiling of 8,161 cells with 134 surface markers to validate protein-level expression of identified markers like CD304 and CD169 [31]. |

| Time-of-Flight Mass Cytometry (CyTOF) | High-dimensional characterization of single cells based on metal-tagged antibodies. | Used alongside scRNA-seq for deeper validation and characterization of immune cell states [31]. |

| Spatial Transcriptomics | Mapping gene expression data within the context of tissue architecture. | High-resolution spatial transcriptome analysis of anatomically dissected glioblastoma samples [31]. |

| CD206 (MRC1) Antibody | Pan-marker for CNS-associated macrophages (CAMs); used for FACS and validation. | Critical for enriching CAMs for deeper molecular characterization via sorting [31]. |

| MOFA2 (Multi-Omics Factor Analysis v2) | Statistical framework for unsupervised integration of multiple data modalities. | Integration of scRNA-seq, CITE-seq, and mass cytometry data to disentangle shared and unique sources of variation [31] [9]. |

Experimental Protocols & Workflows

Core Experimental Workflow for Multiomic Immune Cell Profiling

The following diagram outlines the major experimental and computational steps employed in the case study to characterize the innate immune landscape at human CNS borders.

Diagram 1: Integrated multiomic profiling workflow for CNS immune cells.

Detailed Methodological Steps

- Sample Acquisition and Preparation: Human CNS border tissues—including the parenchyma (PC), perivascular space (PV), leptomeninges (LM), choroid plexus (CP), and dura mater (DM)—were collected. Tissues were processed into single-cell suspensions using appropriate enzymatic and mechanical dissociation protocols [31].

- Immune Cell Enrichment via FACS: Single-cell suspensions were stained with fluorescently labeled antibodies. The key populations sorted were:

- Broad immune cells: CD45+ cells.

- Targeted myeloid cells: CD45+CD206+CD3−CD19−CD20− cells to deeply characterize CAMs and related populations [31].

- Multiomic Data Generation:

- scRNA-seq: Sorted cells were processed using droplet-based platforms (e.g., 10x Genomics) and the high-sensitivity mCEL-Seq2 protocol for plate-based sorting to generate single-cell transcriptomes [31].

- CITE-seq: Cells were incubated with a panel of 134 antibodies tagged with DNA barcodes, allowing for simultaneous sequencing of transcriptomes and surface protein levels [31].

- Mass Cytometry (CyTOF): Cells were stained with metal-tagged antibodies and analyzed by mass spectrometry to provide high-dimensional protein-level validation [31].

- Spatial Transcriptomics: Fresh frozen tissue sections were processed on spatial transcriptomics platforms (e.g., Visium) to map gene expression within the anatomical context of CNS borders and glioblastoma samples [31].

- Data Integration with MOFA2:

- Data Input: The different data modalities (scRNA-seq, CITE-seq, mass cytometry) were set up as distinct "views" in MOFA2. If samples came from different conditions (e.g., fetal, adult, glioblastoma), these were defined as "groups" [9].

- Model Training: MOFA2 was run to infer a set of latent factors that capture the major axes of variation across and within the data modalities. The model's Automatic Relevance Determination (ARD) prior helps distinguish shared from modality-specific variation [9] [32].

MOFA2 Integration & Analysis Guide

Conceptual Framework of MOFA2

MOFA2 is a Bayesian probabilistic framework that integrates multiple omics data types by inferring a small number of latent factors. The core concept is that the observed multiomic data is generated by these latent factors, plus noise [32].

Diagram 2: MOFA2 models data as a function of latent factors and weights.

MOFA2 Configuration and Application in the Case Study

Table 2: MOFA2 Setup for CNS Immune Cell Integration

| Aspect | Configuration in the Case Study | Technical Rationale |

|---|---|---|

| Data Views | scRNA-seq transcript counts, CITE-seq protein counts, Mass cytometry protein data. | Captures different molecular layers from the same or similar cell populations [31] [9]. |

| Groups (if used) | Potentially "Fetal", "Adult", "Glioblastoma". | Allows the model to identify sources of variability shared across conditions and those specific to a condition [9]. |

| Data Likelihoods | Gaussian for normalized log-counts. | Standard for normalized and transformed sequencing or protein data. Count-based raw data should not be input directly [20]. |

| Number of Factors | Determined empirically; the model in the study identified 7 key factors in a related analysis. | The model should be trained with enough factors to capture biological variation without overfitting [20]. |

| Key Outputs | Factors: Coordinate for each cell. Weights: Per-feature (gene/protein) importance for each factor. Variance Explained: Per-factor R² value for each data view. | Factors ordinate cells; weights allow biological interpretation; variance explained quantifies factor importance [20] [9]. |

Technical Support Center: Troubleshooting MOFA2 and Experimental Workflows

Frequently Asked Questions (FAQs)

Q1: What is the main biological insight gained by using MOFA2 in this study, compared to analyzing each omic layer separately? A1: MOFA2 successfully identified latent factors that represent coordinated biological programs across transcriptomic and proteomic data. For example, it helped delineate a spectrum of MHC-IIlow to MHC-IIhigh CNS-associated macrophages (CAMs) and distinguish them from dendritic cells and monocyte-derived macrophages, revealing a more continuous and integrated view of myeloid cell states than single-omics analysis could provide [31].

Q2: How should I preprocess my data (e.g., scRNA-seq counts) before inputting it into MOFA2? A2: Proper normalization is critical.

- Normalization: Remove library size effects. For count-based data like RNA-seq, perform size factor normalization and variance-stabilizing transformation (e.g., log(CPM+1)). Do not input raw counts.

- Filtering: Filter for highly variable features (genes/proteins) per assay to reduce noise and computational load.

- Batch Effect Correction: If you have strong known technical batches, regress them out before running MOFA2 using a tool like

limma. Otherwise, MOFA may capture this technical variation as a dominant factor, obscuring biological signals [20].

Q3: How do I interpret the outputs of MOFA2: factors, weights, and variance explained? A3:

- Factors: These are continuous, low-dimensional representations for each cell. The relative positioning of cells along a factor (positive vs. negative value) is what matters, not the absolute value.

- Weights: These indicate how strongly each molecular feature (gene/protein) is associated with a factor. Features with large absolute weights are the main drivers of that factor. The sign indicates the direction of the effect.

- Variance Explained: This shows the percentage of total variance in a specific data modality that is captured by each factor. It helps you prioritize which factors are most important for your analysis [20].

Q4: My data modalities have very different numbers of features (e.g., 20,000 genes vs. 100 proteins). Will this bias the model? A4: Yes, larger data modalities can be overrepresented. It is good practice to filter uninformative features (e.g., by minimum variance) to bring the different views to a similar order of magnitude. If this is not possible, be aware that MOFA2 might miss small sources of variation present only in the smaller dataset [20].

Q5: Can I include known covariates (like patient age or sex) directly in the MOFA2 model? A5: The developers do not recommend it. Covariates are often discrete and may not reflect the underlying molecular biology. MOFA2 is designed to learn the latent factors in an unsupervised manner. It is better to learn the factors and then relate them to your biological covariates after the model has been trained [20].

Troubleshooting Guides

Table 3: Common MOFA2 Errors and Solutions

| Problem | Possible Cause | Solution |

|---|---|---|

Error: ModuleNotFoundError: No module named 'mofapy2' |

The Python dependency mofapy2 is not installed, or R is pointing to the wrong Python installation. |

1. Restart R and try again. 2. Ensure mofapy2 is installed in your Python environment. 3. Explicitly tell R which Python to use: library(reticulate); use_python("/path/to/your/python") [33]. |